-

-

November 11, 2021 at 12:42 am

debin.meng

SubscriberHi Lumerical Team

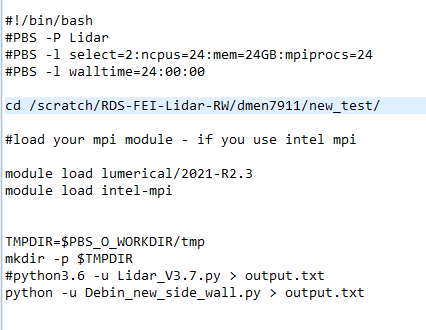

I tried to run Lumerical python API on HPC. The process is:

Running Python on HPC --> Python creates pattern--> Lumerical python API read the pattern and create simulation file through .lsf script ----> Running created simulation file on HPC ---> Python read the results.

For this process, we have assigned 4 nodes with 24 CPUs and 24 microprocessors to the job which are 96 microprocessors and CPUs in total. However, while running the simulation, it will only use 8 microprocessors which results in a long simulation time. Also, during this process, only one "lum_fdtd_solve" will be occupied.

For the other case where we create our simulation file on a local desktop and just use the lum_fdtd_solve to solve the simulation, it will use the whole assigned capacity.

Could you help me with this?

November 12, 2021 at 5:20 pmLito

Ansys Employee@Debin,

Can you share how you are running the job? Paste the command(s) you used to run the simulation on your cluster. Do not send any attachments as we are not allowed to download them. Thanks!

November 13, 2021 at 12:55 amNovember 13, 2021 at 1:29 amLito

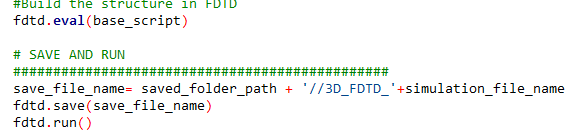

Ansys EmployeeThe Automation/Python API is part of our scripting environment. This will take the resource configuration settings from the FDTD CAD when running the simulation. i.e. the resource configuration set in the CAD/GUI is stored in your user's preference "FDTD Solutions.ini" file. This is used by the script to run the simulation. It will not run using the resources set in your submission script. If you want to use the resources set in your submission script you will have to run the "simulation.fsp" file directly by something like:

mpirun /path/to/Lumerical/installation/bin/fdtd-engine-impi-lcl -t 1 simulationfile.fsp

Otherwise, set your resources in the CAD/GUI and run the python script from the FDTD CAD/GUI. See this article for details: Lumerical job scheduler integration configuration (Slurm, Torque, LSF, SGE)

November 13, 2021 at 2:35 amdebin.meng

SubscriberHi Lito

Thank you for your reply. Unfortunately, we don't have CAD/GUI capability on our HPC. We use pyvirtual display to make the process working for lumapi to create .fsp file on HPC and run it. Is there any other way we can make the process working on HPC and assign the correct node number and microprocessor to the job?

November 15, 2021 at 10:44 pmLito

Ansys EmployeeAs you are using a virtual display and scripts on your cluster you can also set your resources via the script which will save your setting into the "FDTD Solutions.ini" file into your user/.config/Lumerical folder.

November 15, 2021 at 10:56 pmdebin.meng

SubscriberHi Lito

Thank you for your reply. The case is that our node has 24 microprocessors and 24 CPUs. If we assign 2 nodes or more than 2 nodes, the simulation will be terminated at the middle of the stage. It won't start running for some reason. I am not quite sure how to solve this problem.

Best Regards

Debin Meng

November 16, 2021 at 12:17 amLito

Ansys EmployeeHow did you set your resources? Have you tried running with 1 node using all available cores on 1 node?

November 16, 2021 at 12:48 amdebin.meng

SubscriberHi Lito

THis is the FDTD.ini

[General]

SaveBackupFile=true

backgroundB=@Variant(\0\0\0\x87\0\0\0\0)

backgroundG=@Variant(\0\0\0\x87\0\0\0\0)

backgroundR=@Variant(\0\0\0\x87\0\0\0\0)

geometry8.26=@Byte(\x1\xd9\xd0\xcb\0\x3\0\0\0\0\a\x80\0\0\0\x17\0\0\xe\xff\0\0\x4\xf\0\0\a\x80\0\0\0\x17\0\0\xe\xff\0\0\x4\xf\0\0\0\0\0\0\0\0\xf\0\0\0\a\x80\0\0\0\x17\0\0\xe\xff\0\0\x4\xf)

lastexpirewarning=@DateTime(\0\0\0\x10\0\0\0\0\0\0%\x87\x89\0\xe3\xa0\x42\0)

mainWindowVersion8.26=@Byte(\0\0\0\xff\0\0\0\0\xfd\0\0\0\x3\0\0\0\0\0\0\0\xce\0\0\x2\xb3\xfc\x2\0\0\0\x2\xfb\0\0\0\x18\0\x64\0o\0\x63\0k\0T\0r\0\x65\0\x65\0V\0i\0\x65\0w\x1\0\0\0N\0\0\x1\xd3\0\0\0\x92\0\xff\xff\xff\xfb\0\0\0\x1c\0\x64\0o\0\x63\0k\0R\0\x65\0s\0u\0l\0t\0V\0i\0\x65\0w\x1\0\0\x2'\0\0\0\xda\0\0\0[\0\xff\xff\xff\0\0\0\x1\0\0\x2?\0\0\x2\xb3\xfc\x2\0\0\0\x1\xfc\0\0\0N\0\0\x2\xb3\0\0\0\xc7\x1\0\0\x1a\xfa\0\0\0\0\x2\0\0\0\x3\xfb\0\0\0 \0\x64\0o\0\x63\0k\0S\0\x63\0r\0i\0p\0t\0\x45\0\x64\0i\0t\0o\0r\x1\0\0\0\0\xff\xff\xff\xff\0\0\0\xac\0\xff\xff\xff\xfb\0\0\0(\0\x64\0o\0\x63\0k\0\x43\0o\0m\0p\0o\0n\0\x65\0n\0t\0L\0i\0\x62\0r\0\x61\0r\0y\x1\0\0\0\0\xff\xff\xff\xff\0\0\0\x13\0\xff\xff\xff\xfb\0\0\0\x30\0O\0p\0t\0i\0m\0i\0z\0\x61\0t\0i\0o\0n\0 \0\x44\0o\0\x63\0k\0 \0W\0i\0\x64\0g\0\x65\0t\x1\0\0\0\0\xff\xff\xff\xff\0\0\0}\0\xff\xff\xff\0\0\0\x3\0\0\aX\0\0\0\xd5\xfc\x1\0\0\0\x2\xfb\0\0\0 \0\x64\0o\0\x63\0k\0S\0\x63\0r\0i\0p\0t\0P\0r\0o\0m\0p\0t\x1\0\0\0(\0\0\x3\xcb\0\0\0y\0\a\xff\xff\xfb\0\0\0.\0\x64\0o\0\x63\0k\0S\0\x63\0r\0i\0p\0t\0W\0o\0r\0k\0s\0p\0\x61\0\x63\0\x65\0V\0i\0\x65\0w\x1\0\0\x3\xf9\0\0\x3\x87\0\0\0J\0\xff\xff\xff\0\0\x4?\0\0\x2\xb3\0\0\0\x4\0\0\0\x4\0\0\0\b\0\0\0\b\xfc\0\0\0\x2\0\0\0\0\0\0\0\x4\0\0\0\x16\0\x65\0\x64\0i\0t\0T\0o\0o\0l\0\x62\0\x61\0r\x3\0\0\0\0\xff\xff\xff\xff\0\0\0\0\0\0\0\0\0\0\0\x18\0m\0o\0u\0s\0\x65\0T\0o\0o\0l\0\x62\0\x61\0r\x3\0\0\0\xb0\xff\xff\xff\xff\0\0\0\0\0\0\0\0\0\0\0\x16\0v\0i\0\x65\0w\0T\0o\0o\0l\0\x62\0\x61\0r\x3\0\0\x1\x87\xff\xff\xff\xff\0\0\0\0\0\0\0\0\0\0\0\x18\0\x61\0l\0i\0g\0n\0T\0o\0o\0l\0\x62\0\x61\0r\x3\0\0\x1\xd7\xff\xff\xff\xff\0\0\0\0\0\0\0\0\0\0\0\x2\0\0\0\x3\0\0\0\x16\0m\0\x61\0i\0n\0T\0o\0o\0l\0\x62\0\x61\0r\x1\0\0\0\0\xff\xff\xff\xff\0\0\0\0\0\0\0\0\0\0\0\x1e\0s\0i\0m\0u\0l\0\x61\0t\0\x65\0T\0o\0o\0l\0\x62\0\x61\0r\x1\0\0\x3\xdd\xff\xff\xff\xff\0\0\0\0\0\0\0\0\0\0\0\x12\0s\0\x65\0\x61\0r\0\x63\0h\0\x42\0\x61\0r\x1\0\0\x4\xb2\xff\xff\xff\xff\0\0\0\0\0\0\0\0)

perspectiveView=true

pwd=/scratch/RDS-FEI-Lidar-RW/dmen7911/new_test

updateCheckDate=@Variant(\0\0\0\xe\0%\x87\x90)

viewFilenameInTitlebar=true

viewMode=0

xyView=true

xzView=true

yzView=true

[jobmanager]

FDTD=Local Host localhost 24 1 1 true FDTD fdtd-engine-mpich2nem true true Remote: MPICH2 /usr/local/lumerical/2021-R2.3/mpich2/nemesis/bin/mpiexec /usr/local/lumerical/2021-R2.3/bin/fdtd-engine-mpich2nem false false false false false #!/bin/sh\nmodule load intel-mpi\nmodule load lumerical/2021-R2.3\nmpirun fdtd-engine-impi-lcl -logall -remote {PROJECT_FILE_PATH}

We are using PBS job scheduler to submit and run the job to the HPC.

November 16, 2021 at 2:39 amLito

Ansys EmployeeTry to upgrade to the latest release, 2021 R2.4. We have seen some issues with 2021 R2.3 on Linux without a GUI or X-server. Running the Python script is done through the GUI and set the resource as shown in this article in our KB when using a job scheduler. One thing to check as well is if you can run a "simulation.fsp' file directly using the job scheduler on more than 1 node:

#PBS -1 select=2:ncpus=24:mem=24GB:mpiprocs=24

cd /path/to/simulationfile.fsp

module load lumerical/2021-R2.4

module load intel-mpi

mpirun /path/to/Lumerical/installation/bin/fdtd-engine-impi-lcl -t 1 /your_simulationfile.fsp

November 16, 2021 at 2:54 amdebin.meng

SubscriberHi Lito

We are able to assign more than 1 node to the job by directly uploading the simulation file to HPC and running it by submitting the PBS job scheduler-based script. However, if we use python assisted way to run the process and assign more than one node to the job, the simulation will be terminated. Also, since we are using the PBS job scheduler, I am not sure how we can make the process work even we have the graphical node on HPC. The resource setting seems to only support job schedulers in the type of torque, LSF, slurm, and SGE.

Best Regards

Debin Meng

November 17, 2021 at 12:08 amLito

Ansys Employee´╗┐@Debin´╗┐,

We are able to assign more than 1 node to the job by directly uploading the simulation file to HPC and running it by submitting the PBS job scheduler-based script.

Are you using 2021 R2.3 when running simulations directly on the HPC/cluster using the job submission script to run on more than 1 node per your message above?

We use pyvirtual display to make the process working for lumapi to create .fsp file on HPC and run it.

If you are already using "virtual display", you can set the resources in your python script. Run the Python script using the job scheduler submission script and the Python script will do the rest of setting the resource for Lumerical to use when running any simulation file on the cluster using the specs requested on your job submission script.

November 17, 2021 at 6:32 amdebin.meng

SubscriberHi Lito

We are using R2.3 at the moment. We will ask the HPC team to upgrade the version for us. Thank you.

Viewing 12 reply threads- The topic ‘Lumerical Python API doesn’t Use the full capacity of the assigned CPU in HPC’ is closed to new replies.

Innovation SpaceTrending discussionsTop Contributors-

4853

-

1587

-

1386

-

1242

-

1021

Top Rated Tags© 2026 Copyright ANSYS, Inc. All rights reserved.

Ansys does not support the usage of unauthorized Ansys software. Please visit www.ansys.com to obtain an official distribution.

-

Ansys Assistant

Welcome to Ansys Assistant!

An AI-based virtual assistant for active Ansys Academic Customers. Please login using your university issued email address.

Hey there, you are quite inquisitive! You have hit your hourly question limit. Please retry after '10' minutes. For questions, please reach out to ansyslearn@ansys.com.

RETRY