TAGGED: -LS-DYNA-run-time, electromagnetism, hpc, mpp

-

-

December 2, 2024 at 3:04 pm

rajesh.pamarthi2711

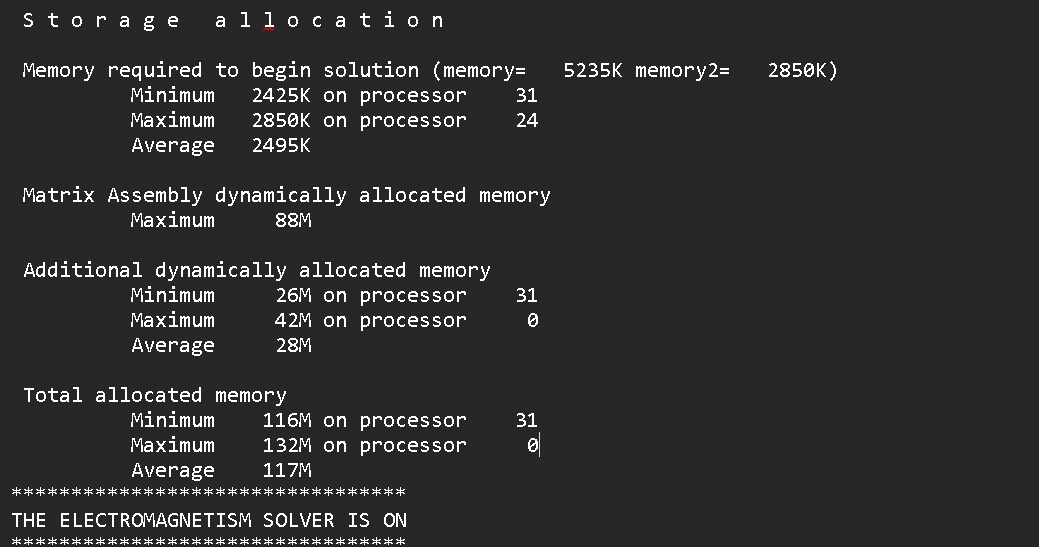

SubscriberHI,I am trying to perform simulation of my .k file in HPC cluster. my .k file has a total of 240952 elements. And i have following softwares available in my HPC.ansyscleasybuildlibmppdyna_d__avx2_ifort190_intelmpi.solibmppdyna_s__avx2_ifort190_intelmpi.sols-dyna_mpp_d_R14_1_0_x64_centos79_ifort190_avx2_intelmpi-2018.l2als-dyna_mpp_d_R14_1_0_x64_centos79_ifort190_avx2_intelmpi-2018_sharelibls-dyna_mpp_d_R14_1_0_x64_centos79_ifort190_avx2_intelmpi-2018_sharelib.tgzls-dyna_mpp_s_R14_1_0_x64_centos79_ifort190_avx2_intelmpi-2018.l2als-dyna_mpp_s_R14_1_0_x64_centos79_ifort190_avx2_intelmpi-2018_sharelibls-dyna_mpp_s_R14_1_0_x64_centos79_ifort190_avx2_intelmpi-2018_sharelib.tgzls-dyna_smp_d_R14_1_0_x64_centos79_ifort190_sse2ls-dyna_smp_d_R14_1_0_x64_centos79_ifort190_sse2.l2als-dyna_smp_d_R14_1_0_x64_centos79_ifort190_sse2.tgzls-dyna_smp_s_R14_1_0_x64_centos79_ifort190_sse2ls-dyna_smp_s_R14_1_0_x64_centos79_ifort190_sse2.l2ampp-dynampp-dyna-dmpp-dyna-ssmp-dynasmp-dyna-dsmp-dyna-sI am trying to submit a sbatch job like in the manner in the command line,#!/bin/bash#SBATCH --time=01:00:00 # walltime#SBATCH --nodes=1 # use 1 node#SBATCH --ntasks=32 # number of processor cores (i.e. tasks)#SBATCH --mem-per-cpu=4000M # memory per CPU core#SBATCH --output=output.log#SBATCH --error=error.logmodule load GCC/13.2.0 OpenMPI/4.1.6 intel-compilers/2023.2.1 impi/2021.10.0 Python/3.11.5 SciPy-bundle/2023.11 matplotlib/3.8.2 LS-DYNA/14.1.0export I_MPI_PIN_DOMAIN=coresrun mpp-dyna i=/home/pro/main.k memory=120000000but with the above settings with ntasks =32, i have got a status.out file stating it will take 285hrs to complete. If i take only 1 node and like 60 tasks, it saying simulation will complete in 206 hrs. If i take the value of nodes, more than 2, after sometime, the simulation is getting struck but i am not getting any errors #no errors. (more nodes, more tasks, simulation is getting struck) at a message. i have tried a lot with different settings and different options, but i couldnt find the right tuning parameters for fast simulation. sometimes my d3hsp file is struck atS t o r a g e a l l o c a t i o nMemory required to begin solution (memory= 5235K memory2= 2850K)Minimum 2425K on processor 31Maximum 2850K on processor 24Average 2495KMatrix Assembly dynamically allocated memoryMaximum 88MAdditional dynamically allocated memoryMinimum 26M on processor 31Maximum 42M on processor 0Average 28MTotal allocated memoryMinimum 116M on processor 31Maximum 132M on processor 0Average 117MAfter the above info*********************************THE ELECTROMAGNETISM SOLVER IS ON********************************* this has to start , but its failing to startcan you please help me with this? -

December 27, 2024 at 9:12 pm

Reno Genest

Ansys EmployeeHello Rajesh,

Have you tried with the latest MPP R15.0.2 LS-DYNA solver?

LS-DYNA (user=user) Download Page

Username: user

Password: computer

Also, have you tried with the latest Intel MPI 2021.13 as recommended by Intel?

Also, note that the time estimate in the d3hsp or mesXXXX files is not completely accurate. You should run the runs to completion to get the actual time it took to complete the simulation.

What is the problem with LS-RUN?

Let me know how it goes.

Reno.

-

December 30, 2024 at 8:37 pm

rajesh.pamarthi2711

SubscriberHi Reno,

yes tried with the latest R15 solver and MPI 2021.13 as well

f’/software/rapids/r24.04/impi/2021.9.0-intel-compilers-2023.1.0/mpi/2021.9.0/bin/mpirun -genv I_MPI_PIN_DOMAIN=core -np 147 /software/rapids/r24.04/LS-DYNA/15.0.2-intel-2023a/ls-dyna_mpp_d_R15_0_2_x64_centos79_ifort190_avx2_intelmpi-2018_sharelib i={new_file_path} memory=128M'

Still its not speeding up. Do you recommend any other ways? -

December 30, 2024 at 10:59 pm

Reno Genest

Ansys EmployeeHello Rajesh,

The model needs to be large enough to use 147 cores. As a rule of thumb, we like to have 5-10k elements per core. So, for your model with 240 952 elements, this means running on 24-48 cores should be ideal. Running with more cores may degrade performance because the communication between the cores becomes the bottleneck.

Also, from your command line above, it looks like you are using Intel MPI 2021.9 and not 2021.13. Please try with 2021.13.

Could you post the Timing Information at the end of the d3hsp file? What part of the simulation takes most of the %CPU Clock time?

Also, is this a pure EM model or is it coupled with structural and thermal? If it is a pure EM solve, you can use rigid material models for the structural solver and set NCYFEM to a high value on *EM_CONTROL.

Do you have wires modeled? If so, have you tried modeling wires with beam elements instead of solid elements? This should speed up the calculation.

If you are a commercial customer, please create a support case on the Ansys Customer Support Space (ACSS) and we will be able to look at your model and help you better:

customer.ansys.com

Let me know how it goes.

Reno.

-

December 31, 2024 at 5:38 pm

Reno Genest

Ansys EmployeeHello Rajesh,

I checked with one of the EM developpers and he said that the EM solver should scale with more MPP cores, but it is problem dependent. The rule of 5-10k elements per core still applies. To better help you, we would have to look at the model. If you are a commercial customer, please create a support case on ACSS at customer.ansys.com. If you are an academic customer, you can contact you Ansys account manager for other ways to get support.

Also, the developer said: “If it is resistive heating solver, then scalability should be good. For Eddy current problems, the main cost will be the BEM system which uses dense matrices. The BEM solve also scales quite well, but matrix assembly and solving times vary drastically from one problem to the next so there it becomes harder to establish a general rule. In general, the choice of ncyclbem and which tolerance settings are used will play a greater role. And then, it gets more complex if EM contact is turned on.”.

Could you post the Timing Information at the end of the d3hsp file with %clock time? This would help identify which part of the model is taking the most CPU time.

At last, from your previous post, the EM timestep is very small and depending on the termination time, it will take a lot of time to compute. You may want to increase the time step size for a faster solve.

Let me know if this helps or not.

Reno.

-

January 2, 2025 at 10:57 am

rajesh.pamarthi2711

SubscriberHello Reno,

Using#SBATCH --nodes=1#SBATCH --ntasks=24module load GCC/13.2.0 intel-compilers/2023.2.1 impi/2021.10.0 Python/3.11.5 SciPy-bundle/2023.12 matplotlib/3.8.2 LS-DYNA/14.1.012003 t 6.0000E-04 dt 5.00E-08 write d3plot file 12/31/24 00:15:02*** termination time reached ***12003 t 6.0005E-04 dt 5.00E-08 write d3dump01 file 12/31/24 00:15:0712003 t 6.0005E-04 dt 5.00E-08 flush i/o buffers 12/31/24 00:15:0712003 t 6.0005E-04 dt 5.00E-08 write d3plot file 12/31/24 00:15:12N o r m a l t e r m i n a t i o n 12/31/24 00:15:12S t o r a g e a l l o c a t i o nMemory required to complete solution (memory= 5235K memory2= 2930K)Minimum 2431K on processor 23Maximum 2930K on processor 4Average 2525KMatrix Assembly dynamically allocated memoryMaximum 210MAdditional dynamically allocated memoryMinimum 202M on processor 2Maximum 473M on processor 23Average 258MTotal allocated memoryMinimum 414M on processor 2Maximum 685M on processor 23Average 470MT i m i n g i n f o r m a t i o nCPU(seconds) %CPU Clock(seconds) %Clock----------------------------------------------------------------Keyword Processing ... 5.0480E+00 0.00 5.1508E+00 0.00MPP Decomposition .... 1.8137E+01 0.01 1.9138E+01 0.01Init Proc .......... 1.2349E+01 0.01 1.2409E+01 0.01Translation ........ 5.3905E+00 0.00 6.3184E+00 0.00Initialization ....... 7.9393E+00 0.00 8.2971E+00 0.00Element processing ... 1.5533E+01 0.01 1.1069E+02 0.05Solids ............. 1.4591E+01 0.01 7.6382E+01 0.04E Other ............ 1.1606E-01 0.00 1.0958E+01 0.01Binary databases ..... 4.1119E+01 0.02 9.0722E+01 0.04ASCII database ....... 1.2786E-01 0.00 1.7478E+01 0.01Contact algorithm .... 2.4563E+01 0.01 9.5649E+01 0.04Interf. ID 1 1.5629E+01 0.01 6.0890E+01 0.03Interf. ID 2 6.3305E+00 0.00 7.0120E+00 0.00Rigid Bodies ......... 9.1020E+00 0.00 3.3813E+01 0.02EM solver ............ 1.9958E+05 96.01 2.0555E+05 95.93Misc ............... 2.3263E+04 11.19 2.4359E+04 11.37System Solve ....... 1.4140E+05 68.02 1.4400E+05 67.21FEM matrices setup . 3.6113E+03 1.74 3.9420E+03 1.84BEM matrices setup . 7.1746E+03 3.45 7.5619E+03 3.53FEMSTER to DYNA .... 1.1102E+04 5.34 1.1983E+04 5.59Compute fields ..... 1.3031E+04 6.27 1.3704E+04 6.40Time step size ....... 8.1400E+03 3.92 8.1801E+03 3.82Others ............... 2.0477E+00 0.00 2.8137E+01 0.01Force Sharing ...... 1.8586E+00 0.00 1.1413E+01 0.01Misc. 1 .............. 2.7344E+00 0.00 4.5960E+01 0.02Update RB nodes .... 9.3052E-01 0.00 5.1470E+00 0.00Misc. 2 .............. 1.2236E+00 0.00 1.8888E+01 0.01Misc. 3 .............. 2.6780E+01 0.01 4.3281E+01 0.02Misc. 4 .............. 3.2197E-01 0.00 1.3296E+01 0.01Apply Loads ........ 1.3152E-01 0.00 1.0552E+01 0.00----------------------------------------------------------------T o t a l s 2.0788E+05 100.00 2.1426E+05 100.00Problem time = 6.0005E-04Problem cycle = 12003Total CPU time = 207879 seconds ( 57 hours 44 minutes 39 seconds)CPU time per zone cycle = 71866.657 nanosecondsClock time per zone cycle= 74073.168 nanosecondsParallel execution with 24 MPP procNLQ used/max 64/ 64C P U T i m i n g i n f o r m a t i o nProcessor Hostname CPU/Avg_CPU CPU(seconds)---------------------------------------------------------------------------# 0 n1680 0.99346 3.6269E+05# 1 n1680 1.00936 3.6849E+05# 2 n1680 1.00890 3.6833E+05# 3 n1680 1.00889 3.6832E+05# 4 n1680 1.00896 3.6835E+05# 5 n1680 1.00898 3.6835E+05# 6 n1680 1.00914 3.6841E+05# 7 n1680 1.00893 3.6834E+05# 8 n1680 1.00907 3.6839E+05# 9 n1680 1.00958 3.6857E+05# 10 n1680 1.00812 3.6804E+05# 11 n1680 1.00897 3.6835E+05# 12 n1680 1.00882 3.6830E+05# 13 n1680 0.80560 2.9411E+05# 14 n1680 1.00894 3.6834E+05# 15 n1680 1.00934 3.6849E+05# 16 n1680 1.00934 3.6849E+05# 17 n1680 1.00948 3.6854E+05# 18 n1680 1.00917 3.6842E+05# 19 n1680 1.00934 3.6848E+05# 20 n1680 1.00958 3.6857E+05# 21 n1680 1.00929 3.6847E+05# 22 n1680 1.00945 3.6853E+05# 23 n1680 1.00930 3.6847E+05---------------------------------------------------------------------------T o t a l s 8.7618E+06Start time 12/26/2024 16:47:27End time 12/31/2024 00:15:13Elapsed time 372466 seconds for 12003 cycles using 24 MPP procs( 103 hours 27 minutes 46 seconds)N o r m a l t e r m i n a t i o n 12/31/24 00:15:13

-

- You must be logged in to reply to this topic.

-

3492

-

1057

-

1051

-

965

-

942

© 2025 Copyright ANSYS, Inc. All rights reserved.