-

-

March 17, 2022 at 10:52 pm

saherrer

SubscriberHello all,

I am running an FSI case with 1 million fluent elements and roughly 10k fea elements. What I have tested is the same simulation on a 16 core cpu and a 32 core cpu and have found that the solution time is exactly the same. I'd like to know if it's possible to improve this.

I should also note that both output files registered 99% CPU Usage, using shared distributed machines partitioning. The 16-core case used 12/125 GB of memory, while the 32-core case used 15/85 GB. The total memory usage was set by default settings. I used a Linux-based cluster with SLURM scripts.

March 22, 2022 at 12:34 pmKarthik Remella

AdministratorHello:

How are you obtaining your solution time? Can you share this data (perhaps some screenshots) with us? Since you are dealing with both Fluent and Mechanical, I'm not sure how the scaling should work. Perhaps, can provide better info about simulation scaling for System Coupling.

Karthik

March 23, 2022 at 2:18 amsaherrer

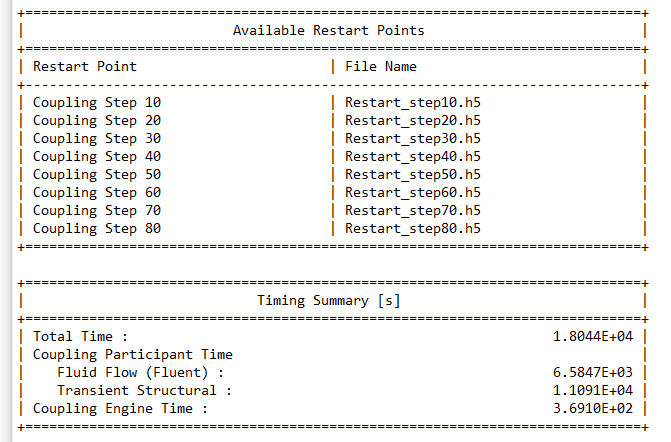

SubscriberYes let me see. I am getting my solution time from the raw output of the HPC server as well as the output file. Here is a screenshot for both.

16 core

32 core

After reviewing this, I see that it's mechanical that actually takes up more time. I don't think the mechanical solver does not need so many cores for its size.

Another factor could be the identical bonded membrane surface which is using default settings and a reduced pinball size. Could it also be the all database file outputs I have turned on in mechanical? Apologies that this has turned into a mechanical issue.

March 23, 2022 at 2:18 pmSteve

Ansys Employee

Which version are you using? It's best to use the latest version 2022R1 since we have made significant improvements to the parallel performance over the last few releases.

How many cores are used for Fluent and Mechanical for both cases? You can run a Mechanical only case to determine the optimum number of cores to use.

Is the "32 core CPU" a single compute node or is it two 16 core compute nodes?

Steve

March 23, 2022 at 5:15 pmsaherrer

SubscriberI'm using 21R2. The 32 core cpu is a single compute node. Since the shared distributed machines setting is turned on, both fluent and mechanical are partitioned to all 32 cores.

March 24, 2022 at 8:12 pmsaherrer

SubscriberHi all I have successfully reduced my solution time. I ran a few more cases with the shared allocated cores setting and got the following output. I forgot that I also had a distributed bonded contact setting turned on (CNCHECK,12) in mechanical.

Name / Cores. Single 32-Core CPU . Time in Seconds

Mapdl 12 - Fluent 20 - Mapdl Time 5332 - Fluent Time 3221

Mapdl 8 - Fluent 24 - Mapdl Time 7385 - Fluent Time 2836

Mapdl 4 - Fluent 28 - Mapdl Time 12615 - Fluent Time 2541

In the first case the total time was 2 hours 25 minutes, which is roughly half the original solution time. Thank you all for your help.

Viewing 5 reply threads- The topic ‘How to improve System Coupling in HPC Clusters?’ is closed to new replies.

Ansys Innovation SpaceTrending discussionsTop Contributors-

3467

-

1057

-

1051

-

929

-

896

Top Rated Tags© 2025 Copyright ANSYS, Inc. All rights reserved.

Ansys does not support the usage of unauthorized Ansys software. Please visit www.ansys.com to obtain an official distribution.

-

Ansys Assistant

Welcome to Ansys Assistant!

An AI-based virtual assistant for active Ansys Academic Customers. Please login using your university issued email address.

Hey there, you are quite inquisitive! You have hit your hourly question limit. Please retry after '10' minutes. For questions, please reach out to ansyslearn@ansys.com.

RETRY