TAGGED: cluster, Distributed-Memory-Parallel, fluent

-

-

November 12, 2024 at 10:37 am

drasszkusz

SubscriberHi all,

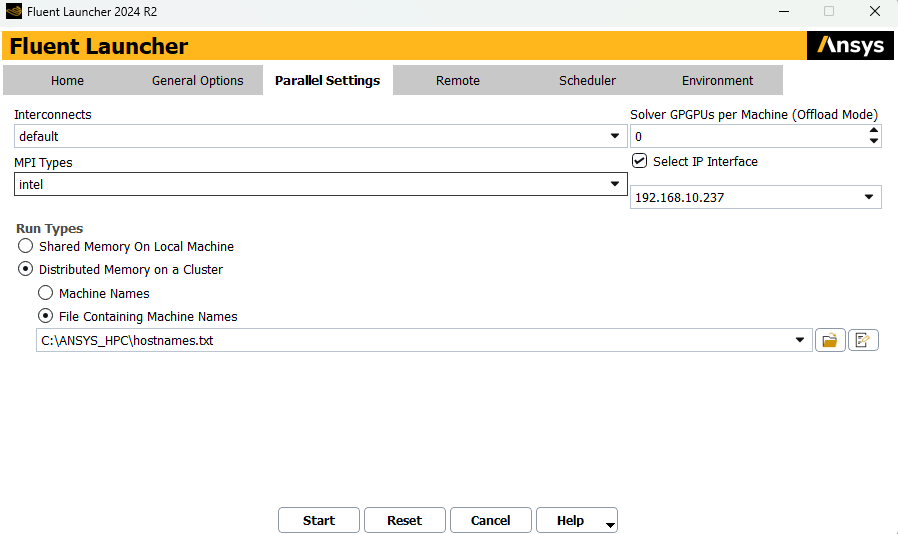

I am trying to run Ansys Fluent 2024 R2 in distributed mode on two computers.

Computer 1:

AMD Ryzen 9 7950X

64 GB RAM

NVIDIA RTX A4000

Windows 11

Computer 2:

AMD Ryzen 9 7950X

64 GB RAM

NVIDIA Quadro 4000

Windows 11

I have followed the suggested instructions that I have found in the tutorial.

Same username and password on both computers, same Ansys and Intel MPI versions are installed at the same path. I have also checked the recommended Intel MPI version for the Ansys version and the correct one (2021.8.0) is installed.

The whole C: drive is shared both on Computer 1 and Computer 2. Credentials were registered from both sides.

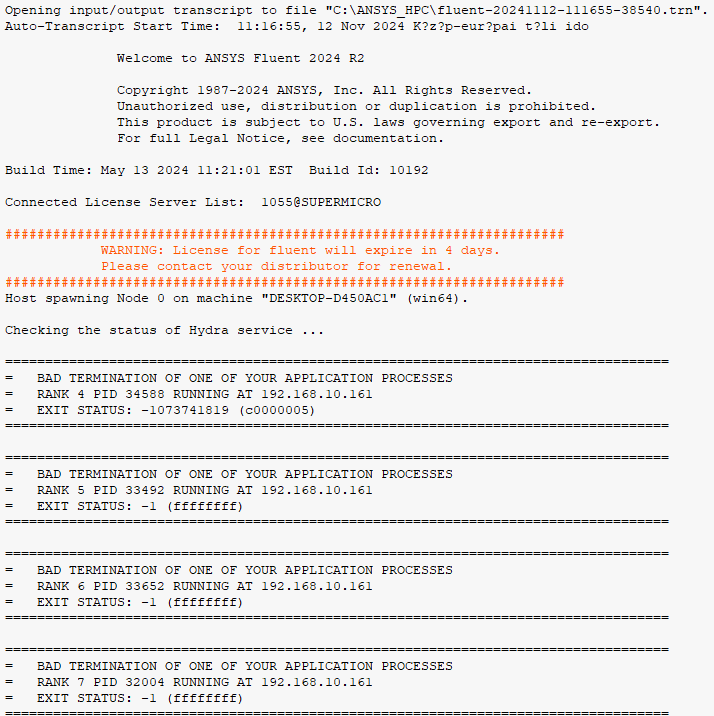

The problem is that I can't run fluent using both computers' resources. I can run Fluent from Computer 1 using Computer 2's resources and back, but when I specify to run 4 cores on Computer 1 and 4 cores on computer 2 then I get an error message (see attached photo). It does not matter how many cores I specifiy, there is a problem when I would like to use both computer's resources. Individually they work well.

Thank you in advance for any suggestion that can solve this! -

November 26, 2024 at 2:01 pm

MangeshANSYS

Ansys EmployeeHello

are both machines part of the same active directory (i.e. on same domain) or are they in a workgroup ?

-

November 26, 2024 at 2:10 pm

drasszkusz

SubscriberHello Mangesh,

both machines are in the same workgroup and the firewalls were disabled too.

-

November 26, 2024 at 2:14 pm

MangeshANSYS

Ansys Employeeto the best of my knowledge MPI requires active directory

-

November 26, 2024 at 2:32 pm

drasszkusz

SubscriberI think it is or was possible to run Ansys distributed without a domain. Someone did it in 2014 on Windows 7 machines: https://www.cfd-online.com/Forums/fluent/134275-tutorial-run-fluent-distributed-memory-2-windows-7-64-bit-machines.html

But I don't know if something related has changed in the new Ansys or MPI versions.

-

November 26, 2024 at 9:36 pm

MangeshANSYS

Ansys EmployeeIf you do not suspect authentication to be an issue then it could be file system

please check if any disk access or permissions issues are causing the error

A web search of the error codes 1073741819 & c0000005 on Microsoft website brought up some links. These may be coming from the operating system

See if information on any of these is helpful a consult with your system administrator before taking any actions which could lead to undesired effeccts

I am lisgint out action followed by the link where it was mentioned on the Microsoft Forum

a new user profile as suggested in

https://answers.microsoft.com/en-us/windows/forum/all/error-1073741819-and-0xc0000005/7948d9d7-c5a7-4358-be7c-88446de90736

system restore

https://answers.microsoft.com/en-us/windows/forum/all/application-error-0xc0000005-file-system-error/967e454e-699c-408a-b838-2ab79b66c3b7

VC++ redistributables (see prerequisites in Ansys setup)

https://answers.microsoft.com/en-us/windows/forum/all/solved-error-code-1073741819-when-running-certain/76b4c0e8-c5ff-43cd-9098-0ae524b422ac

sfc /scannow

DISM.exe /Online /Cleanup-image /RestoreHealt

https://answers.microsoft.com/en-us/windows/forum/all/application-error-0xc0000005/6224ae45-a251-4f21-b076-74524618d00a

&

https://answers.microsoft.com/en-us/windows/forum/all/cant-open-any-exe-files-or-windows-apps/747f103e-d91d-4a21-b730-ba75f6d4a282 -

November 27, 2024 at 8:08 am

drasszkusz

SubscriberIt can be a permission issue as the advanced debug shows this:

Checking the status of Hydra service ...

[mpiexec@DESKTOP-D450AC1] Launch arguments: C:\\PROGRA~1\\ANSYSI~1\\v242\\fluent\\fluent24.2.0\\multiport\\mpi\\win64\\intel2021\\bin\\hydra_bstrap_proxy.exe --upstream-host 192.168.10.237 --upstream-port 49942 --pgid 0 --launcher service --launcher-number 0 --base-path C:\\PROGRA~1\\ANSYSI~1\\v242\\fluent\\fluent24.2.0\\multiport\\mpi\\win64\\intel2021\\bin --tree-width 16 --tree-level 1 --time-left -1 --launch-type 2 --debug --service_port 0 --proxy-id 0 --node-id 0 --subtree-size 1 --upstream-fd 608 C:\\PROGRA~1\\ANSYSI~1\\v242\\fluent\\fluent24.2.0\\multiport\\mpi\\win64\\intel2021\\bin\\hydra_pmi_proxy.exe --usize -1 --auto-cleanup 1 --abort-signal 9

[proxy:0:1@DESKTOP-GBVKBOL] unable to chdir to C:\\Users\\Dani\\Documents\\ansys

[proxy:0:1@DESKTOP-GBVKBOL] pmi cmd from fd 568: cmd=init pmi_version=1 pmi_subversion=1

[proxy:0:1@DESKTOP-GBVKBOL] PMI response: cmd=response_to_init pmi_version=1 pmi_subversion=1 rc=0

[proxy:0:1@DESKTOP-GBVKBOL] pmi cmd from fd 568: cmd=get_maxes

[proxy:0:1@DESKTOP-GBVKBOL] PMI response: cmd=maxes kvsname_max=256 keylen_max=64 vallen_max=4096

[proxy:0:1@DESKTOP-GBVKBOL] pmi cmd from fd 568: cmd=get_appnum

[proxy:0:1@DESKTOP-GBVKBOL] PMI response: cmd=appnum appnum=0

[proxy:0:1@DESKTOP-GBVKBOL] pmi cmd from fd 568: cmd=get_my_kvsname

[proxy:0:1@DESKTOP-GBVKBOL] PMI response: cmd=my_kvsname kvsname=kvs_27212_0

[proxy:0:1@DESKTOP-GBVKBOL] pmi cmd from fd 568: cmd=get kvsname=kvs_27212_0 key=PMI_process_mapping

[proxy:0:1@DESKTOP-GBVKBOL] PMI response: cmd=get_result rc=0 msg=success value=(vector,(0,2,2))

[proxy:0:1@DESKTOP-GBVKBOL] pmi cmd from fd 568: cmd=put kvsname=kvs_27212_0 key=-bcast-1-2 value=4D504943485F4E454D5F31303233325F37323335323434393235

[proxy:0:1@DESKTOP-GBVKBOL] PMI response: cmd=put_result rc=0 msg=success

[proxy:0:1@DESKTOP-GBVKBOL] pmi cmd from fd 568: cmd=barrier_in

[proxy:0:1@DESKTOP-GBVKBOL] pmi cmd from fd 596: cmd=init pmi_version=1 pmi_subversion=1

[proxy:0:1@DESKTOP-GBVKBOL] PMI response: cmd=response_to_init pmi_version=1 pmi_subversion=1 rc=0

[proxy:0:1@DESKTOP-GBVKBOL] pmi cmd from fd 596: cmd=get_maxes

[proxy:0:1@DESKTOP-GBVKBOL] PMI response: cmd=maxes kvsname_max=256 keylen_max=64 vallen_max=4096

[proxy:0:1@DESKTOP-GBVKBOL] pmi cmd from fd 596: cmd=get_appnum

[proxy:0:1@DESKTOP-GBVKBOL] PMI response: cmd=appnum appnum=0

[proxy:0:1@DESKTOP-GBVKBOL] pmi cmd from fd 596: cmd=get_my_kvsname

[proxy:0:1@DESKTOP-GBVKBOL] PMI response: cmd=my_kvsname kvsname=kvs_27212_0

[proxy:0:1@DESKTOP-GBVKBOL] pmi cmd from fd 596: cmd=get kvsname=kvs_27212_0 key=PMI_process_mapping

[proxy:0:1@DESKTOP-GBVKBOL] PMI response: cmd=get_result rc=0 msg=success value=(vector,(0,2,2))

[proxy:0:1@DESKTOP-GBVKBOL] pmi cmd from fd 596: cmd=barrier_in

[proxy:0:0@DESKTOP-D450AC1] pmi cmd from fd 572: cmd=init pmi_version=1 pmi_subversion=1

[proxy:0:0@DESKTOP-D450AC1] PMI response: cmd=response_to_init pmi_version=1 pmi_subversion=1 rc=0

[proxy:0:0@DESKTOP-D450AC1] pmi cmd from fd 572: cmd=get_maxes

[proxy:0:0@DESKTOP-D450AC1] PMI response: cmd=maxes kvsname_max=256 keylen_max=64 vallen_max=4096

[proxy:0:0@DESKTOP-D450AC1] pmi cmd from fd 572: cmd=get_appnum

[proxy:0:0@DESKTOP-D450AC1] PMI response: cmd=appnum appnum=0

[proxy:0:0@DESKTOP-D450AC1] pmi cmd from fd 572: cmd=get_my_kvsname

[proxy:0:0@DESKTOP-D450AC1] PMI response: cmd=my_kvsname kvsname=kvs_27212_0

[proxy:0:0@DESKTOP-D450AC1] pmi cmd from fd 572: cmd=get kvsname=kvs_27212_0 key=PMI_process_mapping

[proxy:0:0@DESKTOP-D450AC1] PMI response: cmd=get_result rc=0 msg=success value=(vector,(0,2,2))

[0] MPI startup(): Intel(R) MPI Library, Version 2021.8 Build 20221129

[0] MPI startup(): Copyright (C) 2003-2022 Intel Corporation. All rights reserved.

[0] MPI startup(): library kind: release

[proxy:0:0@DESKTOP-D450AC1] pmi cmd from fd 572: cmd=put kvsname=kvs_27212_0 key=-bcast-1-0 value=4D504943485F4E454D5F32373334305F32333332373638383139

[proxy:0:0@DESKTOP-D450AC1] PMI response: cmd=put_result rc=0 msg=success

[proxy:0:0@DESKTOP-D450AC1] pmi cmd from fd 572: cmd=barrier_in

[proxy:0:0@DESKTOP-D450AC1] pmi cmd from fd 624: cmd=init pmi_version=1 pmi_subversion=1

[proxy:0:0@DESKTOP-D450AC1] PMI response: cmd=response_to_init pmi_version=1 pmi_subversion=1 rc=0

[proxy:0:0@DESKTOP-D450AC1] pmi cmd from fd 624: cmd=get_maxes

[proxy:0:0@DESKTOP-D450AC1] PMI response: cmd=maxes kvsname_max=256 keylen_max=64 vallen_max=4096

[proxy:0:0@DESKTOP-D450AC1] pmi cmd from fd 624: cmd=get_appnum

[proxy:0:0@DESKTOP-D450AC1] PMI response: cmd=appnum appnum=0

[proxy:0:0@DESKTOP-D450AC1] pmi cmd from fd 624: cmd=get_my_kvsname

[proxy:0:0@DESKTOP-D450AC1] PMI response: cmd=my_kvsname kvsname=kvs_27212_0

[proxy:0:0@DESKTOP-D450AC1] pmi cmd from fd 624: cmd=get kvsname=kvs_27212_0 key=PMI_process_mapping

[proxy:0:0@DESKTOP-D450AC1] PMI response: cmd=get_result rc=0 msg=success value=(vector,(0,2,2))

[proxy:0:0@DESKTOP-D450AC1] pmi cmd from fd 624: cmd=barrier_in

[proxy:0:0@DESKTOP-D450AC1] PMI response: cmd=barrier_out

[proxy:0:0@DESKTOP-D450AC1] PMI response: cmd=barrier_out

[proxy:0:0@DESKTOP-D450AC1] pmi cmd from fd 624: cmd=get kvsname=kvs_27212_0 key=-bcast-1-0

[proxy:0:0@DESKTOP-D450AC1] PMI response: cmd=get_result rc=0 msg=success value=4D504943485F4E454D5F32373334305F32333332373638383139

[proxy:0:1@DESKTOP-GBVKBOL] PMI response: cmd=barrier_out

[proxy:0:1@DESKTOP-GBVKBOL] PMI response: cmd=barrier_out

[proxy:0:1@DESKTOP-GBVKBOL] pmi cmd from fd 596: cmd=get kvsname=kvs_27212_0 key=-bcast-1-2

[proxy:0:1@DESKTOP-GBVKBOL] PMI response: cmd=get_result rc=0 msg=success value=4D504943485F4E454D5F31303233325F37323335323434393235

[0] MPI startup(): libfabric version: 1.13.2-impi

[0] MPI startup(): libfabric provider: tcp;ofi_rxm

[proxy:0:0@DESKTOP-D450AC1] pmi cmd from fd 572: cmd=put kvsname=kvs_27212_0 key=bc-0 value=mpi#0200C326A9FE2BE20000000000000000$

[proxy:0:0@DESKTOP-D450AC1] PMI response: cmd=put_result rc=0 msg=success

[proxy:0:0@DESKTOP-D450AC1] pmi cmd from fd 624: cmd=put kvsname=kvs_27212_0 key=bc-1 value=mpi#0200C327A9FE2BE20000000000000000$

[proxy:0:0@DESKTOP-D450AC1] PMI response: cmd=put_result rc=0 msg=success

[proxy:0:0@DESKTOP-D450AC1] pmi cmd from fd 572: cmd=barrier_in

[proxy:0:0@DESKTOP-D450AC1] pmi cmd from fd 624: cmd=barrier_in

[proxy:0:1@DESKTOP-GBVKBOL] pmi cmd from fd 596: cmd=put kvsname=kvs_27212_0 key=bc-3 value=mpi#0200EE09C0A80AA10000000000000000$

[proxy:0:1@DESKTOP-GBVKBOL] PMI response: cmd=put_result rc=0 msg=success

[proxy:0:1@DESKTOP-GBVKBOL] pmi cmd from fd 568: cmd=put kvsname=kvs_27212_0 key=bc-2 value=mpi#0200EE08C0A80AA10000000000000000$

[proxy:0:1@DESKTOP-GBVKBOL] PMI response: cmd=put_result rc=0 msg=success

[proxy:0:1@DESKTOP-GBVKBOL] pmi cmd from fd 596: cmd=barrier_in

[proxy:0:1@DESKTOP-GBVKBOL] pmi cmd from fd 568: cmd=barrier_in

[proxy:0:0@DESKTOP-D450AC1] PMI response: cmd=barrier_out

[proxy:0:0@DESKTOP-D450AC1] PMI response: cmd=barrier_out

[proxy:0:0@DESKTOP-D450AC1] pmi cmd from fd 624: cmd=get kvsname=kvs_27212_0 key=bc-2

[proxy:0:1@DESKTOP-GBVKBOL] PMI response: cmd=barrier_out

[proxy:0:1@DESKTOP-GBVKBOL] PMI response: cmd=barrier_out

[proxy:0:0@DESKTOP-D450AC1] PMI response: cmd=get_result rc=0 msg=success value=mpi#0200EE08C0A80AA10000000000000000$

[proxy:0:0@DESKTOP-D450AC1] pmi cmd from fd 572: cmd=get kvsname=kvs_27212_0 key=bc-0

[proxy:0:0@DESKTOP-D450AC1] PMI response: cmd=get_result rc=0 msg=success value=mpi#0200C326A9FE2BE20000000000000000$

[proxy:0:1@DESKTOP-GBVKBOL] pmi cmd from fd 596: cmd=get kvsname=kvs_27212_0 key=bc-2

[proxy:0:1@DESKTOP-GBVKBOL] PMI response: cmd=get_result rc=0 msg=success value=mpi#0200EE08C0A80AA10000000000000000$

[proxy:0:1@DESKTOP-GBVKBOL] pmi cmd from fd 568: cmd=get kvsname=kvs_27212_0 key=bc-0

[proxy:0:1@DESKTOP-GBVKBOL] PMI response: cmd=get_result rc=0 msg=success value=mpi#0200C326A9FE2BE20000000000000000$

[proxy:0:1@DESKTOP-GBVKBOL] pmi cmd from fd 596: cmd=get kvsname=kvs_27212_0 key=bc-3

[proxy:0:1@DESKTOP-GBVKBOL] PMI response: cmd=get_result rc=0 msg=success value=mpi#0200EE09C0A80AA10000000000000000$

[proxy:0:1@DESKTOP-GBVKBOL] pmi cmd from fd 568: cmd=get kvsname=kvs_27212_0 key=bc-1

[proxy:0:1@DESKTOP-GBVKBOL] PMI response: cmd=get_result rc=0 msg=success value=mpi#0200C327A9FE2BE20000000000000000$

[proxy:0:0@DESKTOP-D450AC1] pmi cmd from fd 624: cmd=get kvsname=kvs_27212_0 key=bc-3

[proxy:0:0@DESKTOP-D450AC1] PMI response: cmd=get_result rc=0 msg=success value=mpi#0200EE09C0A80AA10000000000000000$

[proxy:0:0@DESKTOP-D450AC1] pmi cmd from fd 572: cmd=get kvsname=kvs_27212_0 key=bc-1

[proxy:0:0@DESKTOP-D450AC1] PMI response: cmd=get_result rc=0 msg=success value=mpi#0200C327A9FE2BE20000000000000000$

[0] MPI startup(): File "/tuning_generic_shm-ofi_tcp-ofi-rxm.dat" not found

[0] MPI startup(): Load tuning file: "/tuning_generic_shm-ofi.dat"

[0] MPI startup(): File "/tuning_generic_shm-ofi.dat" not found

[0] MPI startup(): File "/tuning_generic_shm-ofi.dat" not found

[0] MPI startup(): File "" not found

[0] MPI startup(): Unable to read tuning file for ch4 level

[0] MPI startup(): File "" not found

[0] MPI startup(): Unable to read tuning file for net level

[0] MPI startup(): File "" not found

[0] MPI startup(): Unable to read tuning file for shm level

[0] MPI startup(): Imported environment partly inaccesible. Map=0 Info=0

[1] MPI startup(): Imported environment partly inaccesible. Map=0 Info=0

[3] MPI startup(): Imported environment partly inaccesible. Map=0 Info=0

[2] MPI startup(): Imported environment partly inaccesible. Map=0 Info=0The Windows 11 system is quite new as it was installed at the beginning of September. I have the VC++ installed too.

I will give a try for the active directory when I will have time. I just tried to avoid it, as it seemed to be difficult to create without a Windows Server software. Thank you for the suggestions

-

- You must be logged in to reply to this topic.

-

3467

-

1057

-

1051

-

929

-

896

© 2025 Copyright ANSYS, Inc. All rights reserved.