-

-

May 29, 2023 at 2:47 pm

a.aissa.berraies

SubscriberDear all,

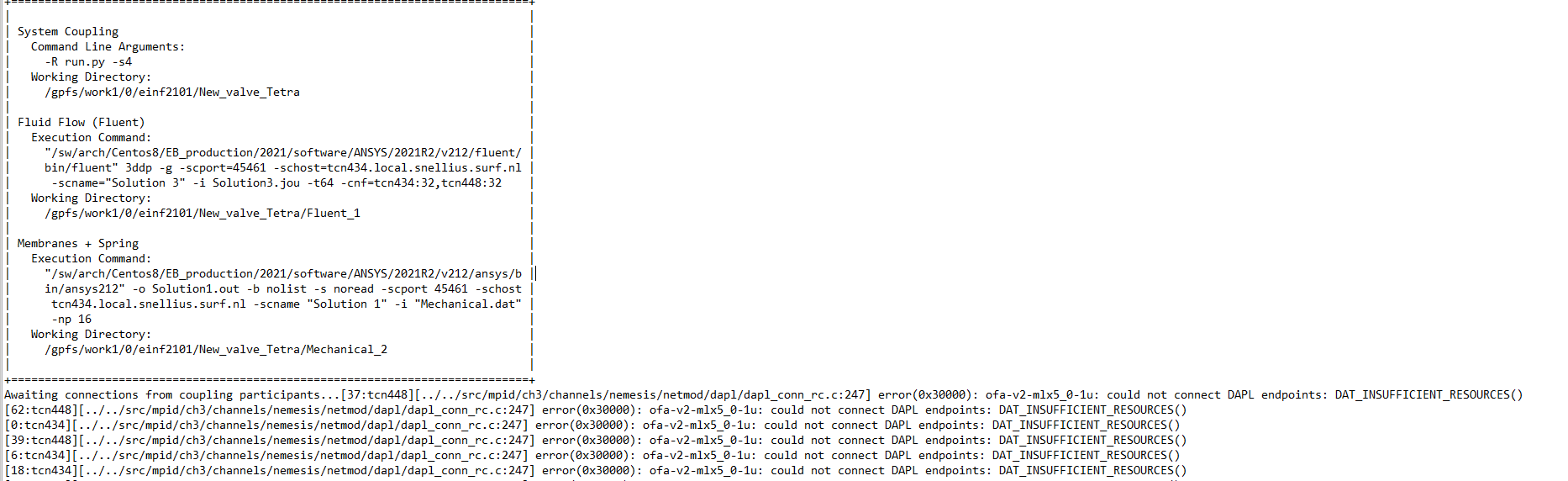

I've been doing FSI simulations using Ansys SystemCoupling for quite some time now. Currently, I am using Surf Snellius supercomputer for my heavy duties with Ansys version 2021/R2. Although my simulations require extensive computational power, I am suspicious about my actual computational runtime and believe I can reduce it further. An example concerns a two-way strongly coupled simulation of a microfluidic valve interacting with a soft incompressible membrane to allow/block the flow.

Typically, I restrict myself to +/- 1Million PUMA-type cells from Fluent perspective and ~50K SOLID285 elements for transient Mechanical. The problems also include contact, gap modeling and require careful attention to avoid floating point exceptions and divergence events.

Now, I encountered an issue while trying to run the simulation using multiple nodes, and I am sharing the error I get from the SyC log for your consideration: While trying to sbatch on multiple nodes, the Slurm job does not fail, but the simulation gets stalled instead.

I am not an expert on parallelization, but I think it has something to do with the MPI. In fact, I remember I had to export a command to enable FSI simulations using one node and multiple cores as follows:

export LD_PRELOAD=$EBROOTANSYS/v212/commonfiles/MPI/Intel/2018.3.222/linx64/lib/libstrtok.so

The Slurm batch file is fine and consistent with the one proposed in the ALH SyC course. The issue emerges as soon as I run on multiple nodes.

Although I tried some suggestions from different discussions, such as this one: /forum/forums/topic/fluent-2021-r1-running-on-a-linux-server-has-issues-with-intel-mpi-2018/, the problem persists, and I could not find a way to solve. it.

I am looking forward to your suggestions.

Thanks a lot,

Best regards,

Ahmed,

-

May 30, 2023 at 2:37 pm

Rahul

Ansys EmployeeHi Ahmed,

Please see if your computer infrastructure is compatible with the version you are using.

Our supported platforms page is athttps://www.ansys.com/solutions/solutions-by-role/it-professionals/platform-supportPlatform Support | ANSYSPlatform Support. Defining the optimum computer infrastructure for use of ANSYS software begins with understanding the computing platforms that are tested and supported by ANSYS. Follow the links below to learn about the computing platforms we support as well as reference system architectures recommended by valued partners. Current Release ...www.ansys.com -

May 31, 2023 at 2:08 pm

a.aissa.berraies

SubscriberHi,

Our computer infrastructure should be compatible with the version I am using (Version 2021/r2). Otherwise, I suppose I would not be able to run parallel jobs in the first place.

Thanks,

-

June 7, 2023 at 9:55 am

Ahmed_Aissa

SubscriberDear all,

Following the previous thread (which, unfortunately, was not resolved), I wanted to share additional information that might help create a workaround for my problem. I am sharing with you these few lines of a batch script that I wanted to run on 2 nodes for your consideration.

FLUENTNODES="$(scontrol show hostnames)"

FLUENTNODES=$(echo $FLUENTNODES | tr ' ' ',')

$ANSYSROOT/SystemCoupling/bin/systemcoupling --cnf="$FLUENTNODES:16" -s4 -R run.py

The job runs for less than a minute, and by looking at the Slurm queue I could see the assigned nodes but then the job fails,, and the log file shows the error message: "RuntimeError: Compute nodes did not start properly".

I am looking forward to your cooperation,

Best regards,

-

- The topic ‘Parallelization issues for FSI simulations using Slurm’ is closed to new replies.

-

4633

-

1535

-

1386

-

1225

-

1021

© 2025 Copyright ANSYS, Inc. All rights reserved.