-

-

April 15, 2024 at 10:44 pm

Vighnesh Natarajan

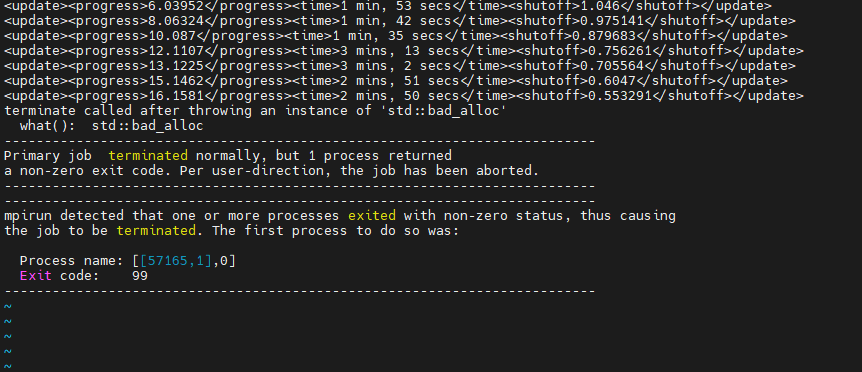

SubscriberI run many parallel jobs on a cluster. Sometimes - for no apparent reason, the job crashes citing "std::bad_alloc", but no more information. The RAM is sufficient (I have verified this, I have allocated 10x the RAM that the requirements ask for - and have monitored that this is not reached via observing using htop). This crash doesnt happen if I use just a single core on a local computer resource setting. However it happens on parallel slurm jobs with multiple cores. I need to use multiple cores to speed up simulations.

This crash is not reproducible - it randomly happens, and if I run the same job again (same simulation with same resources in the resource manager), it sometimes runs and sometimes doesnt. Thus this leads me to believe that it doesn't have anything to do with RAM but is some other problem.

This issue is okay sometimes - where for many job submissions it does not crash, but sometimes it crashes quite often, which is not desirable - and it interrupts sweeps of simulations that I run.

This is with Lumerical version 2022 R2

-

April 17, 2024 at 11:11 pm

Lito

Ansys Employee@Vighnesh Natarajan,

I am sorry to hear that you are having issues running Lumerical simulations on your cluster. To debug/troubleshoot the issue:

- What is the operating system and version running on the cluster?

- Which Lumerical product/solver are you running? FDTD, MODE, CHARGE, HEAT, or INTERCONNECT?

- How are you running the simulation job? Are you using a job scheduler submission script and running this from the Terminal on the cluster head/login node?

- What is the job scheduler on your cluster - if you are using one?

- Please paste the execution line on the submission script or the command you run on the cluster, if you are not submitting to a job scheduler.

(e.g. running FDTD with bundled MPICH2)

/install_path/lumerical/v222/mpich2/nemesis/bin/mpiexec -n 32 /install_path/lumerical/v222/bin/fdtd-engine-mpich2nem -t 1 /path_to/simulationfile.fsp -

April 17, 2024 at 11:26 pm

Vighnesh Natarajan

SubscriberHello,

- The operating system is Ubuntu 20.04.6 LTS (Focal Fossa). I login via ssh and using x11 I can open a GUI window.

- This issue comes up with FDTD

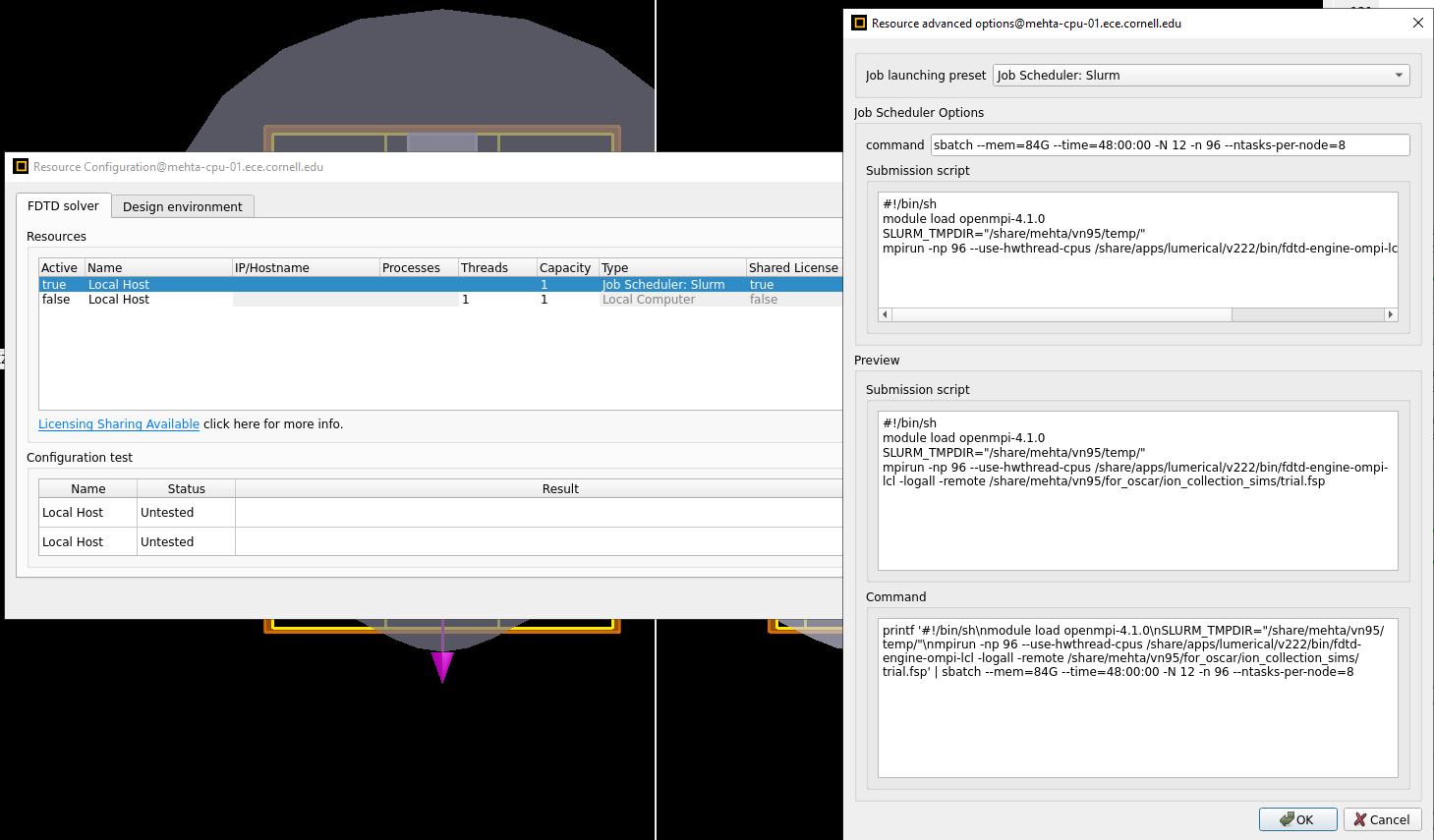

- The issue occurs only when I use SLURM as a job scheduler. In the resource manager in the GUI, I use SLURM as the job launcher and have setup a batch script that will run the job. An example of the same is

- the sbatch command is "sbatch --mem=84G --time=48:00:00 -N 12 -n 96 --ntasks-per-node=8"

- This invokes - "mpirun -np 96 --use-hwthread-cpus /share/apps/lumerical/v222/bin/fdtd-engine-ompi-lcl -logall -remote {PROJECT_FILE_PATH}"

- SLURM is the only way I can submit large batch jobs to the cluster across multpile nodes. If I set the resource manager to "Local computer", it uses the mpich2nem solver and that never crashes, however that uses only one core - the core that I used to launch the GUI from the remote ssh session.

Hope this information is useful in helping diagnose the problem.

-

April 19, 2024 at 9:25 pm

Lito

Ansys Employee@Vighnesh Natarajan,

- Are you running the job from the Lumerical CAD/GUI on the master/login node on the cluster? Or are you "sending the job to the cluster" from your local computer?

- Which version of Lumerical are you using? e.g. latest release, 2024 R1.2.

- Send a screenshot of your "Resources advanced options". (similar to the image below)

See this KB for the job integration details on the cluster. >>Lumerical job scheduler integration (Slurm, Torque, LSF, SGE) – Ansys Optics

-

April 20, 2024 at 5:43 pm

Vighnesh Natarajan

SubscriberHello,

- I run the job from a GUI launched on a login node of the cluster. The resource manager in the GUI is set to use SLURM, which submits a batch job to the cluster

- Lumerical version is 2022 R2 (from the about page under help in the GUI)

- I have attached an image below. Openmpi is already loaded, and has version 4.1.0. I have seen that KB. I think i am following the right guidelines - this script I use does run the job. The main mystery is occasionally the job crashes with error "std::bad_alloc", and as stated earlier, the job runs 90% of the time and fails 10%. The memory allocated for the job is atleast 10x what it would need (what i observe is described in more detail in the first message of this post) and the same job would not crash with a single core on local computer - just that it would take forever, so definitely not a RAM issue. But i'm unable to figure out why there is a bad_alloc and where it is happening. I have attached an image of the error thrown in the .out file of a job also below.

-

April 22, 2024 at 7:41 pm

Lito

Ansys Employee@Vighnesh Natarajan,

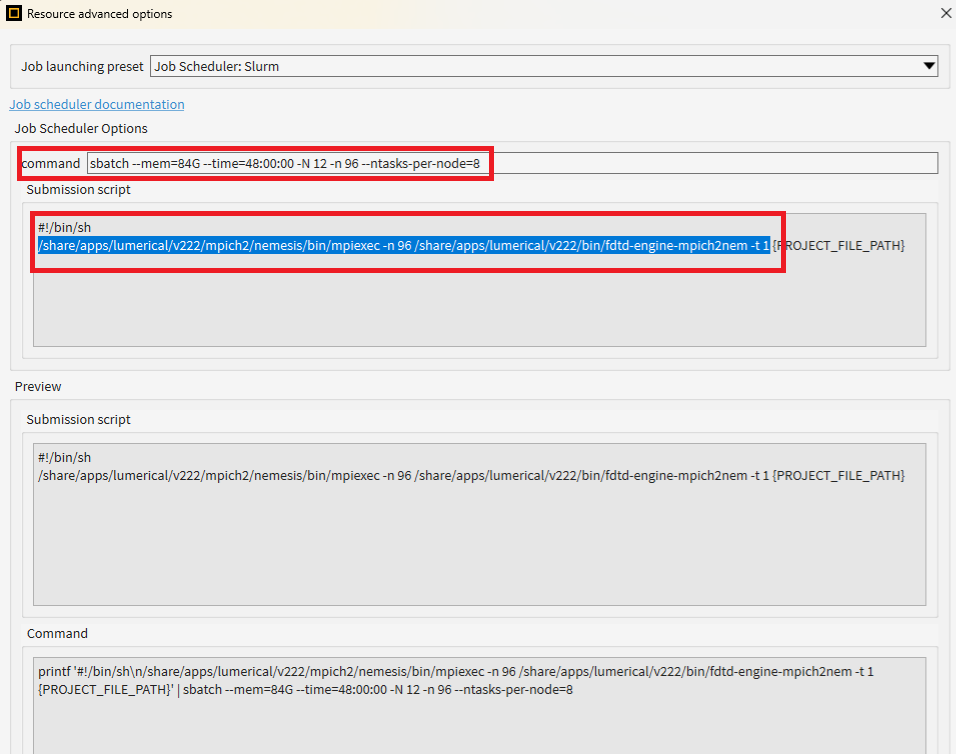

Try using 'mpiexec' with OpenMPI instead of 'mpirun' as show in the example in our KB.

>>Running simulations with MPI on Linux – Ansys Optics## using the install path for OpenMPI shown ##

/usr/lib64/openmpi/bin/mpiexec -n 96 /share/apps/lumerical/v222/bin/fdtd-engine-ompi-lcl -t 1 {PROJECT_FILE_PATH}Have you tried running with the Lumerical bundled MPICH2? Does it have the same issue?

/share/apps/lumerical/v222/mpich2/nemesis/bin/mpiexec -n 96 /share/apps/lumerical/v222/bin/fdtd-engine-mpich2nem -t 1 {PROJECT_FILE_PATH} -

April 22, 2024 at 9:06 pm

Vighnesh Natarajan

SubscriberHello,

Thank you very much for your suggestions. I may have tried mpiexec but I do not recall. I have not tried the Lumerical bundled MPICH2 yet. I shall try your suggestions and report back here how they work, thank you very much

-

- The topic ‘Lumerical cluster job crashes arbitrarily’ is closed to new replies.

-

3467

-

1057

-

1051

-

929

-

896

© 2025 Copyright ANSYS, Inc. All rights reserved.