TAGGED: batch-hpc

-

-

August 21, 2023 at 6:46 pm

ziqii.liu

SubscriberHi everyone,

I am attempting to run Ansys HFSS on the Scinet supercomputer, which comprises 4 nodes, each equipped with 40 cores available for running Slurm jobs. My goal is to simulate a large domain, leveraging HFSS's domain decomposition feature, which allows simultaneous solving of different domains on separate nodes. I intend to split the simulation into 4 tasks and distribute them across the nodes. While I am familiar with distributing tasks on a single node, I am unsure about the process for distributing tasks across multiple nodes.

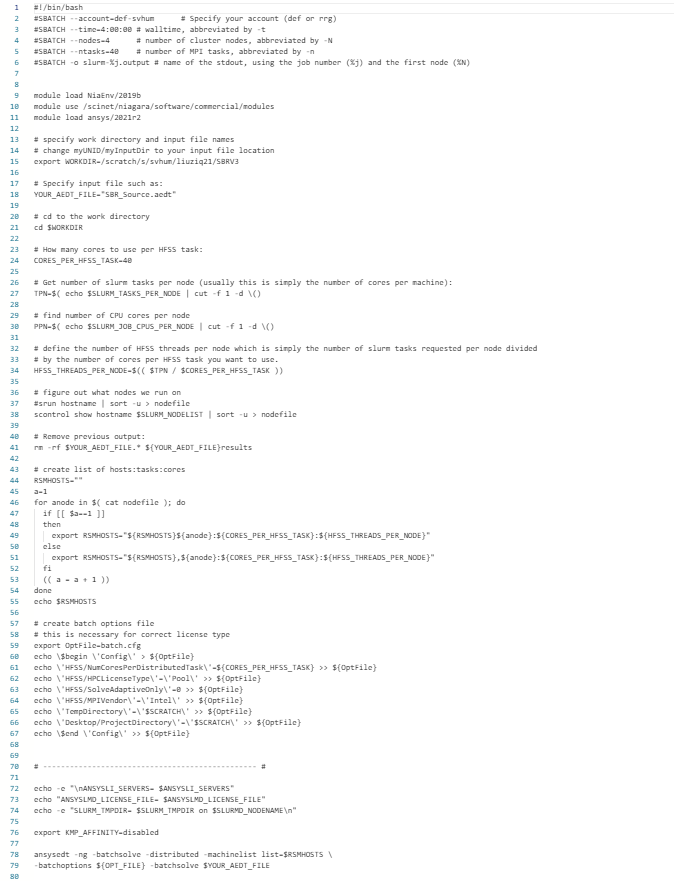

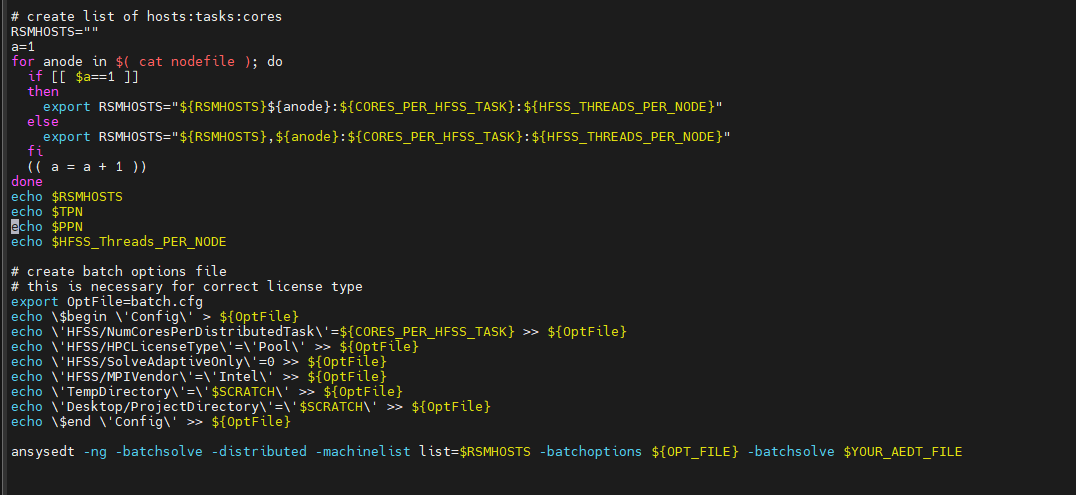

Below is the code I am currently using. To submit the Slurm job, I use the command "sbatch sample.sh". Could someone kindly assist me with this? I am uncertain where I might have made a mistake.

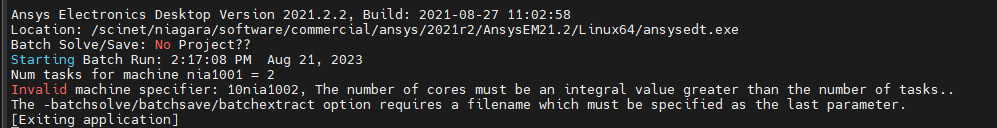

It gives me error:

-

August 25, 2023 at 2:45 pm

randyk

Forum ModeratorHi Ziqi,

What is the OS version: cat /etc/*release

What is the SLURM version: sinfo -V

Note that AnsysEM2021R2 was the first release to officially support SLURM. There were a few bugs/workarounds needed. I would consider migrating to a current version AEDT.

Here are my notes regarding AEDT2021R2 on SLURM

If you get a case with AEDT2021R2 and SLURM 20.x - run through this first.Important, customer must be running AEDT 2021R2 and SLURM 20.x for the following steps-- You would want to gather the following to confirm scheduler version and network info$ cat /etc/*release$ sinfo -V$ ifconfigEnabling tight integration change - this requires editing slurm_srun_wrapper.sh and setting batchoption 'RemoteSpawncommand'1.. (TFS447753) edit the slurm_srun_wrapper.sharchive/copy .../AnsysEM21.2/Linux64/schedulers/scripts/utils/slurm_srun_wrapper.sh to slurm_srun_wrapper.sh.ORIGedit .../AnsysEM21.2/Linux64/schedulers/scripts/utils/slurm_srun_wrapper.sh and insert the following at line 28: host=$(echo "${host}" | cut -d'.' -f1)ex:if [[ -n "$ANSYSEM_SLURM_JOB_ID" ]]thenexport SLURM_JOB_ID="${ANSYSEM_SLURM_JOB_ID}"echo "set SLURM_JOB_ID=${SLURM_JOB_ID}" >> "$DEBUG_FILE"fi=> # tfs447753=> host=$(echo "${host}" | cut -d'.' -f1)verStr=scontrol --version2.. (TFS554680) If SLURM version 20.0-20.10 - Generic SLURM scheduler integration does not check for SLURM minor versionsrun '--overlap' option was introduced in SLURM VERSION 20.11slurm_srun_wrapper.sh only checks if the version is >= 20 and attempts to apply the srun '--overlap' optionedit .../AnsysEM21.2/Linux64/schedulers/scripts/utils/slurm_srun_wrapper.sh and comment out the appropriate srun and if/else/fi lines at bottom of file# if [ "${ver[0]}" -ge 20 ];then # SLURM ver >= 20.**.**# echo "srun --overcommit --overlap --export=ALL -n 1 -N 1 --cpu-bind=none --mem-per-cpu=0 -w ${host} $@" >> "$DEBUG_FILE"# srun --overcommit --overlap --export=ALL -n 1 -N 1 --cpu-bind=none --mem-per-cpu=0 -w $host "$@"# elseecho "srun --overcommit --export=ALL -n 1 -N 1 --cpu-bind=none --mem-per-cpu=0 -w ${host} $@" >> "$DEBUG_FILE"srun --overcommit --export=ALL -n 1 -N 1 --cpu-bind=none --mem-per-cpu=0 -w $host "$@"# fiThis should enable customer to run manual jobs for adaptive meshing, and then auto jobs for frequency sweeps.3.. After making the slurm_srun_wrapper.sh file change, run the following to set defaults to tight integration (will create/modify //AnsysEM21.2/Linux64/config/default.XML) as root or installation ownercd /opt/AnsysEM/AnsysEM21.2/Linux64./UpdateRegistry -set -ProductName ElectronicsDesktop2021.2 -RegistryKey "Desktop/Settings/ProjectOptions/ProductImprovementOptStatus" -RegistryValue 0 -RegistryLevel install./UpdateRegistry -set -ProductName ElectronicsDesktop2021.2 -RegistryKey "HFSS/MPIVendor" -RegistryValue "Intel" -RegistryLevel install./UpdateRegistry -set -ProductName ElectronicsDesktop2021.2 -RegistryKey "HFSS 3D Layout Design/MPIVendor" -RegistryValue "Intel" -RegistryLevel install./UpdateRegistry -set -ProductName ElectronicsDesktop2021.2 -RegistryKey "Maxwell 2D/MPIVendor" -RegistryValue "Intel" -RegistryLevel install./UpdateRegistry -set -ProductName ElectronicsDesktop2021.2 -RegistryKey "Maxwell 3D/MPIVendor" -RegistryValue "Intel" -RegistryLevel install./UpdateRegistry -set -ProductName ElectronicsDesktop2021.2 -RegistryKey "Q3D Extractor/MPIVendor" -RegistryValue "Intel" -RegistryLevel install./UpdateRegistry -set -ProductName ElectronicsDesktop2021.2 -RegistryKey "Icepak/MPIVendor" -RegistryValue "Intel" -RegistryLevel install./UpdateRegistry -set -ProductName ElectronicsDesktop2021.2 -RegistryKey "HFSS/RemoteSpawnCommand" -RegistryValue "scheduler" -RegistryLevel install./UpdateRegistry -set -ProductName ElectronicsDesktop2021.2 -RegistryKey "HFSS 3D Layout Design/RemoteSpawnCommand" -RegistryValue "scheduler" -RegistryLevel install./UpdateRegistry -set -ProductName ElectronicsDesktop2021.2 -RegistryKey "Maxwell 3D/RemoteSpawnCommand" -RegistryValue "scheduler" -RegistryLevel install./UpdateRegistry -set -ProductName ElectronicsDesktop2021.2 -RegistryKey "Maxwell 2D/RemoteSpawnCommand" -RegistryValue "scheduler" -RegistryLevel install./UpdateRegistry -set -ProductName ElectronicsDesktop2021.2 -RegistryKey "Q3D Extractor/RemoteSpawnCommand" -RegistryValue "scheduler" -RegistryLevel install./UpdateRegistry -set -ProductName ElectronicsDesktop2021.2 -RegistryKey "Icepak/RemoteSpawnCommand" -RegistryValue "scheduler" -RegistryLevel install./UpdateRegistry -set -ProductName ElectronicsDesktop2021.2 -RegistryKey "Desktop/Settings/ProjectOptions/ProductImprovementOptStatus" -RegistryValue 0 -RegistryLevel install# ./UpdateRegistry -set -ProductName ElectronicsDesktop2021.2 -RegistryKey "Desktop/Settings/ProjectOptions/AnsysEMPreferredSubnetAddress" -RegistryValue "192.168.1.0/24" -RegistryLevel install4.. An example batch script:Create %HOME/anstest/job.sh with the following contents (correct highlighted):#!/bin/bash#SBATCH -N 3 # allocate 3 nodes#SBATCH -n 12 # 12 tasks total##SBATCH --exclusive # no other jobs on the nodes while job is running#SBATCH -J AnsysEMTest # sensible name for the job#Set job folder, scratch folder, project, and design (Design is optional)JobFolder=$(pwd)ProjName=OptimTee-DiscreteSweep-FineMesh.aedtDsnName="TeeModel:Nominal"# Executable path and SLURM custom integration variablesAppFolder=/opt/AnsysEM/AnsysEM21.2/Linux64# setup environment and srunexport ANSYSEM_GENERIC_MPI_WRAPPER=${AppFolder}/schedulers/scripts/utils/slurm_srun_wrapper.shexport ANSYSEM_COMMON_PREFIX=${AppFolder}/commonexport ANSYSEM_TASKS_PER_NODE=${SLURM_TASKS_PER_NODE}# setup srunsrun_cmd="srun --overcommit --export=ALL -n 1 --cpu-bind=none --mem-per-cpu=0 --overlap "# note: srun '--overlap' option was introduced in SLURM VERSION 20.11"# MPI timeout set to 30min default for cloud suggest lower to 120 or 240 seconds for onpremexport MPI_TIMEOUT_SECONDS=120# System networking environment variables - HPC system dependent should not be user edits!# export ANSOFT_MPI_INTERCONNECT=ib# export ANSOFT_MPI_INTERCONNECT_VARIANT=ofed# Skip dependency check# export ANS_NODEPCHECK=1# Autocompute total cores from node allocationCoreCount=$((SLURM_JOB_NUM_NODES * SLURM_CPUS_ON_NODE))# Run Job${srun_cmd} ${AppFolder}/ansysedt -ng -monitor -waitforlicense -useelectronicsppe=1 -distributed -auto -machinelist numcores=$CoreCount -batchoptions "" -batchsolve ${DsnName} ${JobFolder}/${ProjName}Then run it:$ dos2unix $HOME/anstest/job.sh$ chmod +x $HOME/anstest/job.sh$ sbatch $HOME/anstest/job.shWhen complete, send resulting:$HOME/anstest/OptimTee-DiscreteSweep-FineMesh.aedt.batchinfo/*.log5. If customer using Windows to Linux submissionAnsoftrsmservice cannot run as root.-- make sure to set ANSYSEM_GENERIC_EXEC_PATH before starting ansoftrsmservice-- In ansoftrsmservice.cfg, the content should be:$begin 'Scheduler''SchedulerName'='generic''ConfigString'='{"Proxy":"slurm", "Data":""}'$end 'Scheduler' -

August 25, 2023 at 2:48 pm

randyk

Forum ModeratorHi Ziqi,

As indicated many SLURM integration issues were resolved by the 2022R2 release.

If you are able to migrate to AnsysEM2022R2 or newer, the following guidance should help.If you get a case with AEDT2022R2 and SLURM 20.x - run through this first.Important, customer must be running AEDT 2021R2 and SLURM 20.x for the following steps-- You would want to gather the following to confirm scheduler version and network info$ cat /etc/*release$ sinfo -V$ ifconfigEnabling tight integration change - this requires setting batchoption 'RemoteSpawncommand'1.. run the following to set defaults to tight integration (will create/modify //v222/Linux64/config/default.XML) as root or installation ownercd //v222/Linux64 ./UpdateRegistry -set -ProductName ElectronicsDesktop2022.2 -RegistryKey "Desktop/Settings/ProjectOptions/ProductImprovementOptStatus" -RegistryValue 0 -RegistryLevel install./UpdateRegistry -set -ProductName ElectronicsDesktop2022.2 -RegistryKey "HFSS/MPIVendor" -RegistryValue "Intel" -RegistryLevel install./UpdateRegistry -set -ProductName ElectronicsDesktop2022.2 -RegistryKey "HFSS 3D Layout Design/MPIVendor" -RegistryValue "Intel" -RegistryLevel install./UpdateRegistry -set -ProductName ElectronicsDesktop2022.2 -RegistryKey "Maxwell 2D/MPIVendor" -RegistryValue "Intel" -RegistryLevel install./UpdateRegistry -set -ProductName ElectronicsDesktop2022.2 -RegistryKey "Maxwell 3D/MPIVendor" -RegistryValue "Intel" -RegistryLevel install./UpdateRegistry -set -ProductName ElectronicsDesktop2022.2 -RegistryKey "Q3D Extractor/MPIVendor" -RegistryValue "Intel" -RegistryLevel install./UpdateRegistry -set -ProductName ElectronicsDesktop2022.2 -RegistryKey "Icepak/MPIVendor" -RegistryValue "Intel" -RegistryLevel install./UpdateRegistry -set -ProductName ElectronicsDesktop2022.2 -RegistryKey "HFSS/RemoteSpawnCommand" -RegistryValue "scheduler" -RegistryLevel install./UpdateRegistry -set -ProductName ElectronicsDesktop2022.2 -RegistryKey "HFSS 3D Layout Design/RemoteSpawnCommand" -RegistryValue "scheduler" -RegistryLevel install./UpdateRegistry -set -ProductName ElectronicsDesktop2022.2 -RegistryKey "Maxwell 3D/RemoteSpawnCommand" -RegistryValue "scheduler" -RegistryLevel install./UpdateRegistry -set -ProductName ElectronicsDesktop2022.2 -RegistryKey "Maxwell 2D/RemoteSpawnCommand" -RegistryValue "scheduler" -RegistryLevel install./UpdateRegistry -set -ProductName ElectronicsDesktop2022.2 -RegistryKey "Q3D Extractor/RemoteSpawnCommand" -RegistryValue "scheduler" -RegistryLevel install./UpdateRegistry -set -ProductName ElectronicsDesktop2022.2 -RegistryKey "Icepak/RemoteSpawnCommand" -RegistryValue "scheduler" -RegistryLevel install./UpdateRegistry -set -ProductName ElectronicsDesktop2022.2 -RegistryKey "Desktop/Settings/ProjectOptions/ProductImprovementOptStatus" -RegistryValue 0 -RegistryLevel install# ./UpdateRegistry -set -ProductName ElectronicsDesktop2022.2 -RegistryKey "Desktop/Settings/ProjectOptions/AnsysEMPreferredSubnetAddress" -RegistryValue "192.168.1.0/24" -RegistryLevel install2.. An example batch script:Create %HOME/anstest/job.sh with the following contents (correct highlighted):#!/bin/bash#SBATCH -N 3 # allocate 3 nodes#SBATCH -n 12 # 12 tasks total##SBATCH --exclusive # no other jobs on the nodes while job is running#SBATCH -J AnsysEMTest # sensible name for the job#Set job folder, scratch folder, project, and design (Design is optional)JobFolder=$(pwd)ProjName=OptimTee-DiscreteSweep-FineMesh.aedtDsnName="TeeModel:Nominal"# Executable path and SLURM custom integration variablesAppFolder=//AnsysEM/v222/Linux64 # setup environment and srunexport ANSYSEM_GENERIC_MPI_WRAPPER=${AppFolder}/schedulers/scripts/utils/slurm_srun_wrapper.shexport ANSYSEM_COMMON_PREFIX=${AppFolder}/commonexport ANSYSEM_TASKS_PER_NODE=${SLURM_TASKS_PER_NODE}# setup srunsrun_cmd="srun --overcommit --export=ALL -n 1 --cpu-bind=none --mem-per-cpu=0 --overlap "# note: srun '--overlap' option was introduced in SLURM VERSION 20.11"# MPI timeout set to 30min default for cloud suggest lower to 120 or 240 seconds for onpremexport MPI_TIMEOUT_SECONDS=120# System networking environment variables - HPC system dependent should not be user edits!# export ANSOFT_MPI_INTERCONNECT=ib# export ANSOFT_MPI_INTERCONNECT_VARIANT=ofed# Skip dependency check# export ANS_NODEPCHECK=1# Autocompute total cores from node allocationCoreCount=$((SLURM_JOB_NUM_NODES * SLURM_CPUS_ON_NODE))# Copy Example to $JobFoldercp ${AppFolder}/schedulers/diagnostics/Projects/HFSS/${ProjName} ${JobFolder}/${ProjName}# Run Job${srun_cmd} ${AppFolder}/ansysedt -ng -monitor -waitforlicense -useelectronicsppe=1 -distributed -auto -machinelist numcores=$CoreCount -batchoptions "" -batchsolve ${DsnName} ${JobFolder}/${ProjName}Then run it:$ dos2unix $HOME/anstest/job.sh$ chmod +x $HOME/anstest/job.sh$ sbatch $HOME/anstest/job.shWhen complete, send resulting:$HOME/anstest/OptimTee-DiscreteSweep-FineMesh.aedt.batchinfo/*.log================================================If customer using Windows to Linux submission================================================1.. Ansoftrsmservice cannot run as root.-- make sure to set ANSYSEM_GENERIC_EXEC_PATH before starting ansoftrsmserviceedit: /path/rsm/Linux64/ansoftrsmservice and add line 2:export ANSYSEM_GENERIC_EXEC_PATH=//v222/Linux64/common/mono/Linux64/bin:/ /v222/Linux64/common/IronPython 2.. Patch slurm_srun_wrapper.shFile: //AnsysEM/rsm/Linux64/schedulers/scripts/utils/slurm_srun_wrapper.sh Replace line 20slurm_host=$(${mono} ${ipy} ${script} --replacehost ${host})with:slurm_host=$(echo "${host}" | cut -d'.' -f1)3..-- In ansoftrsmservice.cfg, the content should be:$begin 'Scheduler''SchedulerName'='generic''ConfigString'='{"Proxy":"slurm", "Data":""}'$end 'Scheduler'

-

- The topic ‘Distribute HFSS tasks on multiple nodes’ is closed to new replies.

-

5014

-

1681

-

1387

-

1248

-

1021

© 2026 Copyright ANSYS, Inc. All rights reserved.