-

-

November 4, 2022 at 4:49 pm

Martin Cuma

SubscriberHello,

I am doing user support for our University's HPC center, running Ansys products on our HPC clusters under the SLURM scheduler.

We installed 2022 R2 on Rocky Linux 8.5 (equivalent to CentOS 8.5), and things work alright, except for launching the Mechanical distributed solver from the IDL.

I select the Distributed in the Solve menu section, and when pushing Solve it stops in the creating mathematical model area with the following message:

An error occured while starting the solver module. Please refer to the Troubleshooting section in the Ansys Mechanical Users Guide for more information.I can't find any more information on the reason of the error, and would appreciate if you have any pointers on that. E.g. any environment variable or option to set for more verbose output for what the IDE does prior to the solver launch, where would these log files be located, etc.

I have verified that the MPI works alright, e.g.:

mpitest222 -np 2

latency = 2.5785 microseconds

...

mpitest222 -mpi openmpi -np 2

latency = 1.1032 microsecondsAlso, running the Mechanical from the command line works alright, e.g.:

ansys222 -dis -b -np 4 -i input_crankshaft.datThus I am fairly stuck now in trying to figure out what's happening when the IDE is launching the solver, e.g. what command is it using, what is the error message, etc. Perhaps there is a verbose option or environment variable that can provide more information?

Thanks,

Martin -

November 4, 2022 at 10:46 pm

Reno Genest

Ansys EmployeeHello Martin,

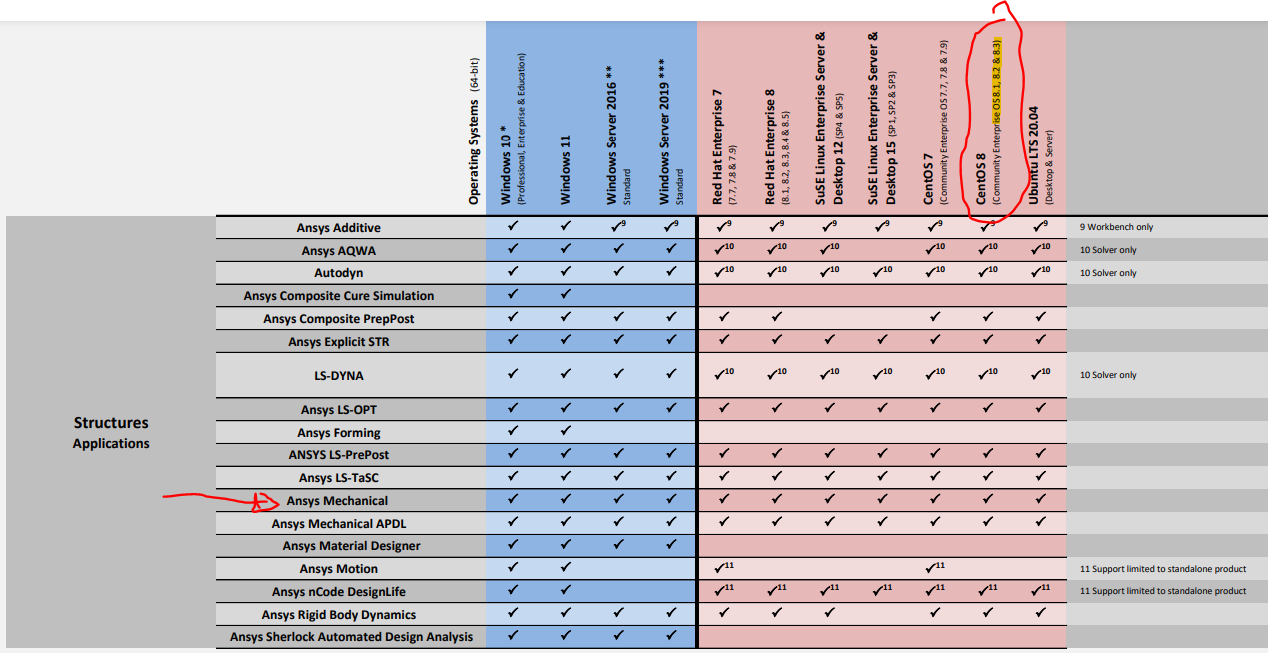

Which version of Ansys are you using? Note that the current version of Ansys (2022R2) does not support Rocky Linux (any versions) nor Centos 8.5. The supported Centos OS are 8.1, 8.2, and 8.3:

https://www.ansys.com/content/dam/it-solutions/platform-support/ansys-2022r2-platform-support-by-application-product.pdf

This means that Ansys 2022R2 has not been QA tested on Rocky Linux nor Centos8.5 which can lead to issues.

Let me check with a colleague to see if we can troubleshoot further.

Reno.

-

November 4, 2022 at 10:53 pm

Martin Cuma

SubscriberHi Reno,

thanks for reply. We run Ansys 2022 R2. So, indeed, Rocky Linux 8.5 that we run indeed is not supported. When I have installed Ansys 2022 R2, I did modify the installer shell script to add the Rocky 8.5 by simply adding the "rocky"OS ID along the "centos" ID. I don't recall having changed any versions, but, I do understand that if you have not QA'd on CentOS 8.5 (Rocky 8 should be binary compatible with CentOS 8), then we may be out of luck on the 8.5 until you start supporting it.

Any plans to support newer CentOS 8 / RHEL 8 releases in the future Ansys release, and when is it scheduled to be released?

Still, I was hoping that there is some verbose mode that I could use to see the issue, I suspect it's something silly that we could fix locally, like incorrect parameters to the MPI launcher.

Thanks,

Martin

-

November 4, 2022 at 11:03 pm

Reno Genest

Ansys EmployeeHello Martin,

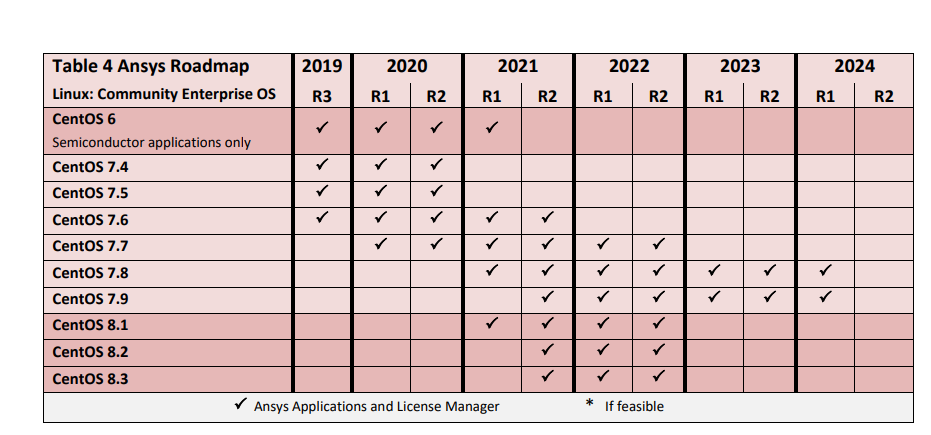

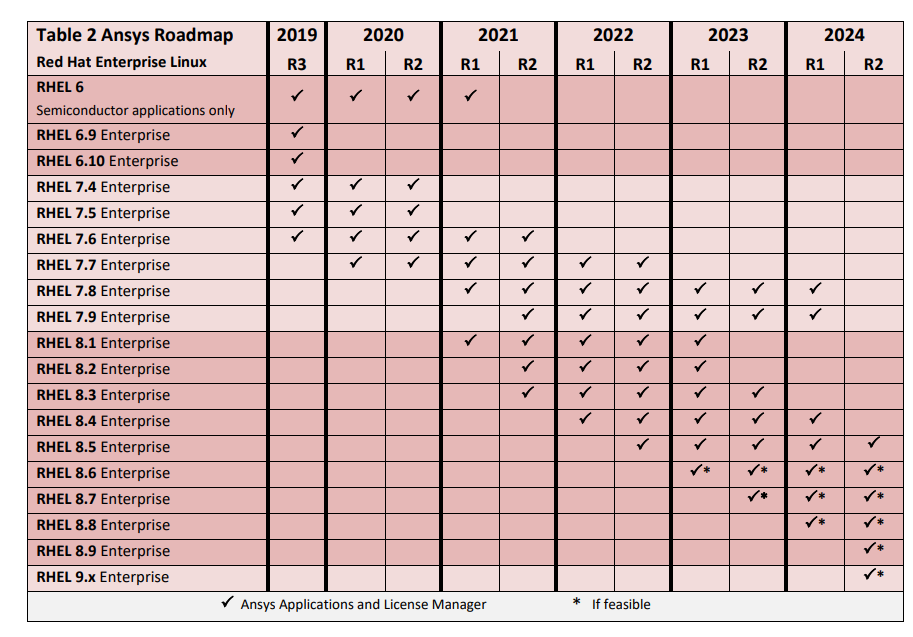

It seems like the plan is to reduce support of Centos and expand on Red Hat and Suse:

https://www.ansys.com/content/dam/it-solutions/platform-support/previous-releases/ansys-platform-support-strategy-plans-august-2022.pdf

If you can run with commands, there might be a way to modify one of the files in the Ansys installation directory to make this work.

Reno.

-

November 4, 2022 at 11:14 pm

Martin Cuma

SubscriberI see, thanks for sending the roadmap. I see that RHEL 8.5 is supported, so, likely that's why I was able to install after hacking in the "rocky" in the install script. RHEL and Rocky Linux should be binary compatible. Perhaps as a feedback for your roadmap discussions - it may be good to include Rocky Linux in your support in lieu of CentOS as most of the HPC community that used CentOS is moving to Rocky. It's cost prohibitive for us to license RHEL at this point (we used to run RHEL but moved to CentOS after they started charging per node license fees).

I can run the commands so I am curious what can I do in the Ansys installation directory to make this work. I suspect it's a bit of a looking for a needle in a haystack, so, perhaps to help you may know if there's a code somewhere in the Ansys Mech installation that runs all the commands that get executed after one hits the "Solve" button in the Mech GUI? That may be a good start.

Thanks.

-

November 4, 2022 at 11:29 pm

Reno Genest

Ansys EmployeeHello Martin,

Thank you for the feedback; we do see more customers trying to run Ansys or LS-DYNA on Rocky Linux.

The question about files to modify would be for our installation support engineers, but because your version of Linux is not supported, they would be reluctant to answer.

I use Ansys on Windows and on my machine, the ANSYS222 executable is located here:

C:\Program Files\ANSYS Inc\v222\ansys\bin\winx64

It should be similar on Linux. There you will find some files that could be modified.

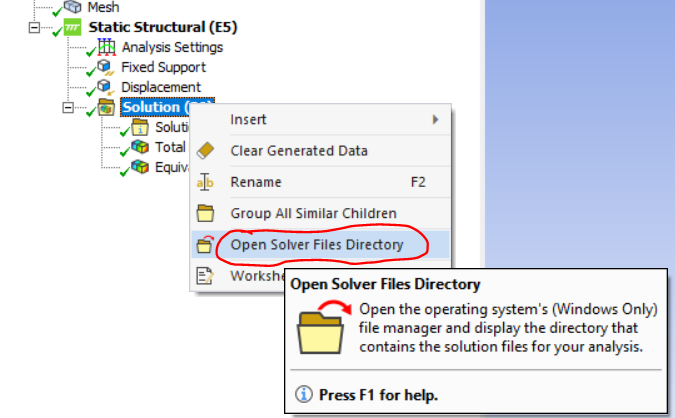

Before you do that, after clicking "Solve" in the Mechanical interface and getting the error message, if you right click on the Solution branch and choose "Open Solver Files Directory", do you see anything in that directory that could help us troubleshoot the problem? There might be a file with a meaningful error message.

Reno.

-

November 7, 2022 at 5:08 pm

Martin Cuma

SubscriberHi Reno,

thanks for your help. I tried to click the "Open Solver Files Directory", but, that option only works in Windows, there's no response in Linux (and the User's Guide says that too). I do agree that it would be very helpful to know where are the solver files, I can't find them. I have spent some time looking around for them without succes. If you or some of your colleagues has rough idea where they would be, it'd be very helpful. Perhaps this is already documented somewhere, I just can't find it?

For example, if my project is in

/scratch/general/vast/u0101881/ansys/fatigue_steel_HPC2_files

I have the following:

$ ls

dp0 dpall session_files user_filesIt looks like the bulk of the files is in the dp0 directory, but, it'd be helpful to see a document that explains what's the contents of this directory.

Thanks,

Martin

-

November 7, 2022 at 5:20 pm

Reno Genest

Ansys EmployeeHello Martin,

I don't have Ansys installed on Linux, but you seem to be on the right track. On Windows, the solver files are in dp0\SYS\MECH.

You will find more information in the help:

https://ansyshelp.ansys.com/account/secured?returnurl=/Views/Secured/corp/v222/en/wb2_help/wb2h_projfiledir.html

Reno.

-

November 8, 2022 at 5:33 pm

Martin Cuma

SubscriberHi Reno

I am making some progress, thanks to having found the solver output files. I'll update that in a minute, but, first, one of the models one user simulates seems to have some inconsistencies so it prints a lot of warnings, and does not print the actual crash error message since:

The number of ERROR and WARNING messages exceeds 10000.

Use the /NERR command to increase the number of messages.

The ANSYS run is terminated by this error.After extending the/NERR, I get:

The unconverged solution (identified as time 15 substep 999999) is

output for analysis debug purposes. Results should not be used for

any other purpose.So, that's telling me that the user needs to modify their model to improve the convergence, right?

Thanks,

Martin

-

November 8, 2022 at 5:40 pm

Martin Cuma

SubscriberAnd here's the promised further information on the crashes. Now with a model that I know converges (the "Crankshaft" example that I got from Rescale but I figure it's one of your examples).

I get the following error when I run on a node with AMD Zen1 CPUs:

OMP: Error #100: Fatal system error detected.

OMP: System error #22: Invalid argument

forrtl: error (76): Abort trap signal

Image PC Routine Line Source

libifcoremt.so.5 00007F14872F1555 for__signal_handl Unknown Unknown

libpthread-2.28.s 00007F144EDD9CE0 Unknown Unknown Unknown

libc-2.28.so 00007F144C5C0A4F gsignal Unknown Unknown

libc-2.28.so 00007F144C593DB5 abort Unknown Unknown

libiomp5.so 00007F1484DA4B23 Unknown Unknown Unknown

libiomp5.so 00007F1484D8FD17 Unknown Unknown Unknown

libiomp5.so 00007F1484D310A8 Unknown Unknown Unknown

libiomp5.so 00007F1484DE5E57 Unknown Unknown Unknown

libiomp5.so 00007F1484D2962D Unknown Unknown Unknown

libiomp5.so 00007F1484D1F119 Unknown Unknown Unknown

libiomp5.so 00007F1484D1E68B Unknown Unknown Unknown

libiomp5.so 00007F1484DA3B1F Unknown Unknown Unknown

libiomp5.so 00007F1484D8698E omp_get_num_procs Unknown Unknown

libansOpenMP.so 00007F146B886CEC ppinit_ Unknown Unknown

libansys.so 00007F147287D7EC smpstart_ Unknown Unknown

ansys.e 00000000004113F0 Unknown Unknown Unknown

ansys.e 000000000040EE28 MAIN__ Unknown Unknown

ansys.e 000000000040ED22 main Unknown Unknown

libc-2.28.so 00007F144C5ACCA3 __libc_start_main Unknown Unknown

ansys.e 000000000040EC39 Unknown Unknown Unknown

/uufs/chpc.utah.edu/sys/installdir/ansys/22.2/v222/ansys/bin/ansysdis222: line 77: 2638867 Aborted (core dumped) /uufs/chpc.utah.edu/sys/installdir/ansys/22.2/v222/ansys/bin/linx64/ansys.e -b nolist -s noread -i "dummy.dat" -o "solve.out" -dis -p ansysSounds like an issue with the Intel OpenMP library, but since it only occurs with the distributed solver, not the shared memory one, I suspect it may be due to some interaction with the Intel MPI that drives the distrbuted run. We have had quite few issues with Intel MPI on Rocky 8, some versions work and some don't, and in the versions that work we need to set the FI_FABRICS=verbs explicitly.

I am wondering if there's a way to tell the Mechanical IDE to use OpenMPI instead of Intel MPI. I know there's a command line option for that but I can't find if the IDE can set it.

The same example runs fine on nodes with Intel CPUs.

So, I think we are more less good. I'll instruct the user to stay on the Intel nodes, and to fix their model to improve convergence, and re-iterate my encouragement to support Rocky Linux in future Ansys releases, focusing both on Intel and AMD CPUs.

-

November 8, 2022 at 5:52 pm

Reno Genest

Ansys EmployeeHello Martin,

The Ansys installation does not come with OpenMPI and so trying to run OpenMPI from inside the Mechanical GUI may be difficult. I recommend running Intel MPI. If you want to run OPen MPI, please run outside the Ansys installation directory. Use LS-DYNA command lines in the Linux terminal and download the appropriate LS-DYNA solvers:

LSDYNA » Downloader (ansys.com)

username: user

password: computer

Here are the commands I use on Linux to run LS-DYNA:

SMP:

/data2/rgenest/lsdyna/SMP_DEV_dp/lsdyna_d_sse2_linux86_64 i=/data2/rgenest/runs/Test/input.k ncpu=-4 memory=20m

MPP Intel MPI:

data2/rgenest/intel/oneapi/mpi/2021.2.0/bin/mpiexec -np 4 /data2/rgenest/lsdyna/MPP_DEV_IMPI_dp/mppdyna_d_sse2_linux86_64_intelmmpi i=/data2/rgenest/runs/Test/input.k memory=20m

MPP Platform MPI:

/data2/rgenest/bin/ibm/platform_mpi/bin/mpirun -np 4 /data2/rgenest/lsdyna/MPP_DEV_PMPI_dp/mppdyna_d_sse2_linux86_64_platformmpi i=/data2/rgenest/runs/Test/input.k memory=20m

MPP Open MPI:

/opt/openmpi-4.0.0/bin/mpirun -np 4 /data2/rgenest/lsdyna/ls-dyna_mpp_d_R13_0_0_x64_centos610_ifort190_sse2_openmpi4/ls-dyna_mpp_d_R13_0_0_x64_centos610_ifort190_sse2_openmpi4.0.0 i=/data2/rgenest/runs/Test/input.k memory=20m

Note that I point to the specific path of the appropriate mpirun or mpiexec file to avoid confusion when many MPIs are installed on the machine.

You could also use LS-RUN to run LS-DYNA on Linux and keep these commands in memory for easy use:

Index of /anonymous/outgoing/lsprepost/LS-Run (lstc.com)

Here is a video tutorial:

https://www.youtube.com/watch?v=ymkTJCefm30

Reno.

-

November 8, 2022 at 5:53 pm

Reno Genest

Ansys EmployeeHello Martin,

If you have further questions about implicit LS-DYNA, please start a new thread as the topic is different.

Thanks.

Reno.

-

- The topic ‘Ansys Mechanical distributed solver does not start from the IDE.’ is closed to new replies.

-

4893

-

1587

-

1386

-

1242

-

1021

© 2026 Copyright ANSYS, Inc. All rights reserved.