TAGGED: #fluent-#ansys, ansys-running-slow, hpc-cluster

-

-

October 28, 2022 at 7:37 pm

mkhademi

SubscriberHello,

I have a question about the ways I can use to optimize the solution speed on HPC of our campus. I have run case of Fluent on my own workstation with 48 CPU cores, and I have transferred them to the HPC. Running the same cases with 128 CPU cores on HPC is even slower than the same cases on my workstation. I contacted our administration, and he puts forward some scenarios. He proposed that

"Fluent is a vendor supplied binary; like most proprietary software packages it is not compiled locally and is given to us basically as a "black box". It uses MPI for parallelization, but relies on its own MPI libraries shipped with the rest of the package (in general, MPI enabled packages want to run with the same MPI used for compilation). We have found that a number of proprietary packages appear to have been compiled with Intel compilers, and therefore (as the Intel compilers only optimize well for Intel CPUs, and are suspected of not optimizing well at all for AMD processors) do not perform well on AMD based clusters like the one on campus. But as we do not control the compilation of the package, there is little we can do about it."

I am also using Opnempi for parallelization on the HPC. Do you think this problem having AMD based clusters can be the main reason for this issue. Do you suggest some general guidelines? For example, when I am preparing the case and data files, do you think I should make the case and data file with the same version of ansys as the one on cluster? Do you think if it makes any difference if I have Fluent read the mesh on the cluster, and make the case there and run it instead of preparing it on Windows pc?

Thank you so much,

Mahdi.

-

October 31, 2022 at 11:43 am

Rob

Forum ModeratorHow many cells are in the model? Are the cores all "real" as opposed to virtual, and is the data transfer "stuff" up to the task of passing all of the data. We've seen some hardware where running on around half of the cores gave far better performance as the data handling was lacking.

-

March 7, 2023 at 12:49 am

mkhademi

SubscriberHi Rob,

Sorry for getting back to this question very late, and I appreciate your response. I gave up Fluent on HPC for the running remained very slow, and I don't know about virtual or real cores on HPC. Now, I am going to use Rocky DEM on the cluster coupled with Fluent, and I think I should communicate with the IT staff again about the slow running of the Fluent. I will ask them about your question.

The Ansys version on the cluster is 21.2, The linux system is Red hat, and I have to use Openmpi for parallel run. Would you think that switching to 23.1 will probably solve the issue? Does it matter which version of openmpi to use? Is it better to use the Openmpi which is distributed within the Fluent package? And lastly, how can I get some diagnostic data while running the simulations that can help you identify any problem? I read somewhere that "show affinity" may help with that.

Thank you so much.

-

March 7, 2023 at 7:40 am

mkhademi

SubscriberAnd, do you think it is important to load a specific version of Openmpi with Ansys 21.2? There are multiple versions on the cluster.

-

March 7, 2023 at 12:06 pm

Rob

Forum ModeratorIf you're running coupled Fluent and Rocky you'll benefit from 2023R1 as the coupling was new in 2021R2: it's since been improved. You also want to check cpu load when running Fluent, you've not mentioned cell count, or what the operating system is.

No idea about the mpi settings - I rely on our system being set up. I'll get one of the install group to comment.

-

March 7, 2023 at 1:51 pm

MangeshANSYS

Ansys Employeehello, 1. Were you able to try using Ansys 2023 R1 ? is the performance gap still seen when running on these 2 different hardware? If still seeing differences with 2023 R1 2. What is the processor make and model on your workstation and how many cores do you use? What is the processor make and model on the cluster and how many cores are used on when running on the cluster ? 3. Can you please run a few iterations on the workstation and also on the cluster and the post portions from 2 transcripts showing the slow down? Additionally you can use these commands in Fluent GUI to generate information on hardware (proc-stats) (sys-stats) (show-affinity) you can test these commands by runnign a simple test on your workstation. When running Fluent in batch on the cluster, you may need to use corresponding TUI commands / journal file 3. Can you please also find out more information on the cluster from cluster resources or cluster administrator: exact operating system version and patch level, recommended interconnect, file system to be used for HPC and whether you are using the recommended file system, scheduler used, etc -

March 7, 2023 at 11:04 pm

mkhademi

SubscriberThank you so much for your replies Rob and Mangesh,

I got some information from our IT administration. Here is the information they provided:

1) The cluster is running RHEL 8.6. The nodes have dual AMD EPYC 7763 64-Core Processor with 512 GiB of RAM

2) The compute nodes have HDR100 Infiniband interconnects

3) The scratch filesystem is BeeGFS 7.3.0

4) Scheduler is Slurm 22.05.07I ran a simulation both with my Laptop and cluster with both using 8 cores. I got some information with your instructions after running a common unsteady case with 100 iterations:

The cluster gave me this information:

> (proc-stats)------------------------------------------------------------------------------| Virtual Mem Usage (GB) | Resident Mem Usage(GB) |ID | Current Peak | Current Peak | Page Faults------------------------------------------------------------------------------host | 0.591854 0.592873 | 0.174324 0.174324 | 591n0 | 0.818371 0.826019 | 0.175053 0.182316 | 259n1 | 0.814728 0.82201 | 0.167213 0.173817 | 214n2 | 0.815907 0.822296 | 0.165989 0.171776 | 255n3 | 0.81435 0.821392 | 0.168133 0.174389 | 175n4 | 0.814678 0.82206 | 0.165474 0.172218 | 168n5 | 0.814739 0.822018 | 0.164593 0.171059 | 252n6 | 0.814575 0.821838 | 0.165981 0.172546 | 253n7 | 0.815868 0.822704 | 0.164452 0.1703 | 258------------------------------------------------------------------------------Total | 7.11507 7.17321 | 1.51121 1.56274 | 2425------------------------------------------------------------------------------------------------------------------------------------------------------------------------------| Virtual Mem Usage (GB) | Resident Mem Usage(GB) | System Mem (GB)Hostname | Current Peak | Current Peak |------------------------------------------------------------------------------------------------compute-b6-21.zarata| 7.11507 7.17321 | 1.51121 1.56274 | 503.141------------------------------------------------------------------------------------------------Total | 7.11507 7.17321 | 1.51121 1.56274 |------------------------------------------------------------------------------------------------()> (sys-stats)---------------------------------------------------------------------------------------| CPU | System Mem (GB)Hostname | Sock x Core x HT Clock (MHz) Load | Total Available---------------------------------------------------------------------------------------compute-b6-21.zarata| 2 x 64 x 1 0 58.77 | 503.141 362.15---------------------------------------------------------------------------------------Total | 128 - - | 503.141 362.15---------------------------------------------------------------------------------------()> (show-affinity)999999: 0 22 23 24 72 73 74 750: 01: 22 23 242: 72 73 74 753: 22 23 244: 22 23 245: 72 73 74 756: 72 73 74 757: 72 73 74 75999999: 0 22 23 24 72 73 74 750: 0 22 23 241: 0 22 23 242: 0 22 23 243: 0 22 23 244: 72 73 74 755: 72 73 74 756: 72 73 74 757: 72 73 74 75999999: 0 22 23 24 72 73 74 750: 0 22 23 241: 0 22 23 242: 0 22 23 243: 0 22 23 244: 72 73 74 755: 72 73 74 756: 72 73 74 757: 72 73 74 75- The alptop gave me this information for the same case and 8 cores for the same number of iterations:

> (proc-stats)

----------------------------------------------

| Virtual Mem Usage (GB)|

ID | Current Peak | Page Faults

----------------------------------------------

host | 0.112896 0.144833 | 7.166e+04

n0 | 0.0862007 0.153492 | 2.659e+06

n1 | 0.0807686 0.134022 | 1.793e+06

n2 | 0.0791664 0.135555 | 3.148e+06

n3 | 0.0825119 0.134621 | 2.002e+06

n4 | 0.0780334 0.134251 | 3.438e+06

n5 | 0.0720711 0.130085 | 1.969e+06

n6 | 0.0833321 0.135273 | 1.328e+06

n7 | 0.0814285 0.135128 | 1.962e+06

----------------------------------------------

Total | 0.756409 1.23726 | 1.837e+07

----------------------------------------------

-----------------------------------------------------------------

| Virtual Mem Usage (GB) | System Mem (GB)

Hostname | Current Peak |

-----------------------------------------------------------------

DESKTOP-C6GP8UV | 0.75642 1.23726 | 15.7449

-----------------------------------------------------------------

Total | 0.75642 1.23726 |

-----------------------------------------------------------------

()

> (sys-stats)

---------------------------------------------------------------------------------------

| CPU | System Mem (GB)

Hostname | Sock x Core x HT Clock (MHz) Load (%)| Total Available

---------------------------------------------------------------------------------------

DESKTOP-C6GP8UV | 1 x 8 x 2 2304 14.8064| 15.7449 3.93291

---------------------------------------------------------------------------------------

Total | 16 - - | 15.7449 3.93291

---------------------------------------------------------------------------------------

()

> (show-affinity)

999999: 0,1,2,3,4,5,6,7,8,9,10,11,12,13,14,15

0: 0,1,2,3,4,5,6,7,8,9,10,11,12,13,14,15

1: 0,1,2,3,4,5,6,7,8,9,10,11,12,13,14,15

2: 0,1,2,3,4,5,6,7,8,9,10,11,12,13,14,15

3: 0,1,2,3,4,5,6,7,8,9,10,11,12,13,14,15

4: 0,1,2,3,4,5,6,7,8,9,10,11,12,13,14,15

5: 0,1,2,3,4,5,6,7,8,9,10,11,12,13,14,15

6: 0,1,2,3,4,5,6,7,8,9,10,11,12,13,14,15

7: 0,1,2,3,4,5,6,7,8,9,10,11,12,13,14,15

()

-

April 9, 2023 at 6:44 am

kevin1986

SubscriberWe also have epyc workstations and we found a similar issue but not exactlly.

Actually we are vendors producing clusters. In our cluster (centos) with amd epyc CPUs, we test Fluent 2022 and they scale extremely linear. 10 nodes, 10 times faster. So back to your topic, I dont think its a problem of Fluent.

On the other hand, we are also facing a similar problem: Fluent 22 is slower than 20, but it only happens on windows system.

/forum/forums/topic/new-version-fluent-is-slower-than-2020r2/

Do you have any updates for your issue?

-

April 12, 2023 at 9:53 pm

mkhademi

SubscriberHi Kevin,

Our HPC admin installed Ansys 23R1, and now it seems to me to be faster. Still, I don't know how to compare the speed.

-

May 4, 2023 at 7:33 am

kevin1986

Subscriber> Still, I don't know how to compare the speed.

at least for this question, typically what we do is to test the scaling performance. You can simply try running a case on 1 node and N nodes. If N nodes is N times faster. Everything is fine. But be sure to use a relatively large mesh (like more then 3 million cells).

-

-

April 13, 2023 at 3:08 pm

MangeshANSYS

Ansys EmployeeHello. thank you for the processor information Please see this document on Processor manufacturer's site. compare the BIOS and other settings that were used

https://www.amd.com/system/files/documents/ansys-fluent-performance-amd-epyc7003-series-processors.pdf

-

April 11, 2023 at 10:42 am

Rob

Forum ModeratorIt's been a holiday weekend in Europe and (I think) the US. There may be a slight delay.

-

April 13, 2023 at 8:17 am

Rob

Forum ModeratorIn the parallel menu in Fluent you'll find some statistics options. They will also give you some calculation speed & network performance.

-

May 3, 2023 at 8:45 pm

ryan.careno

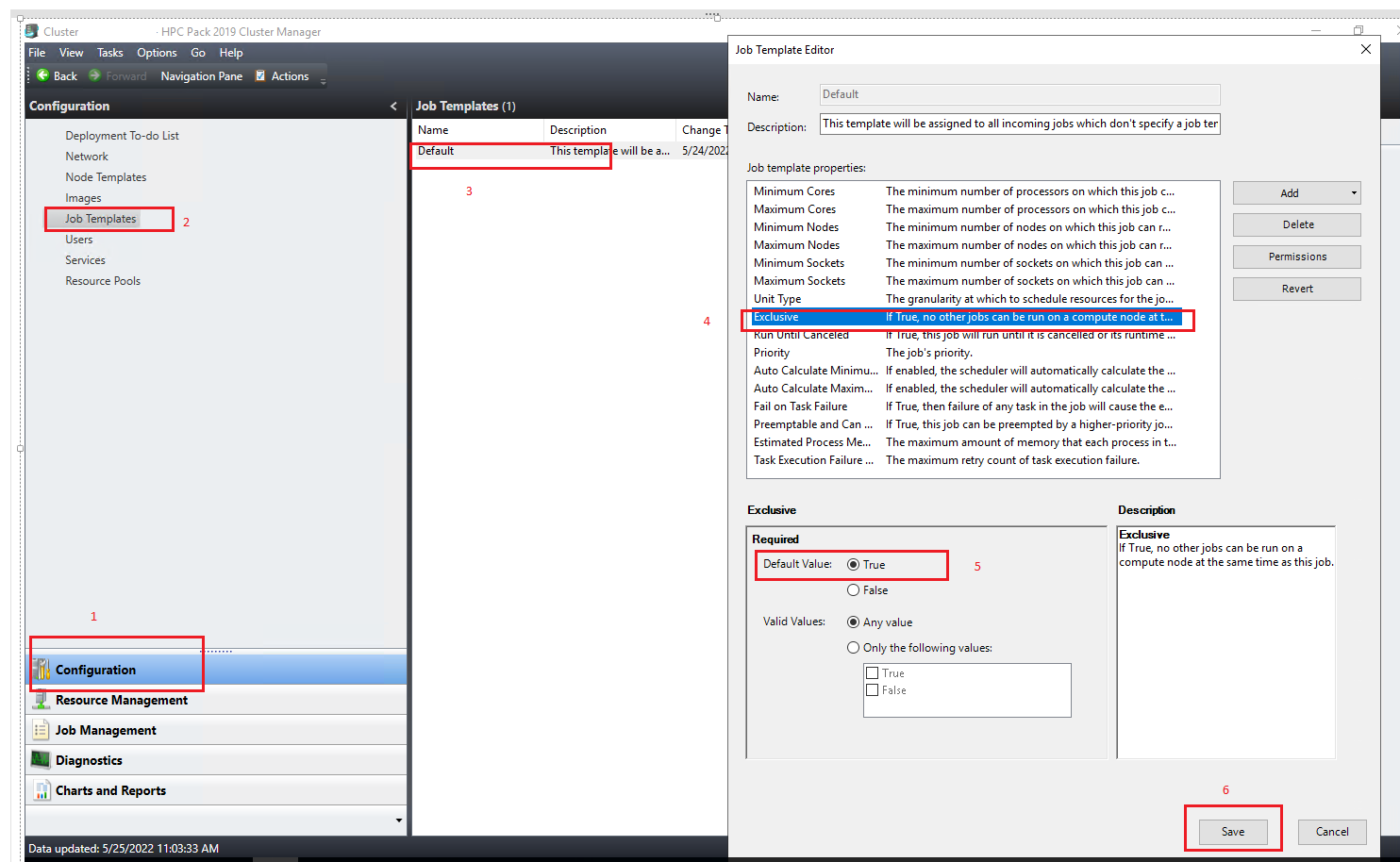

SubscriberCheck if the “exclusive” flag in the HPC Pack 2019 job template is set to “true”. We were seeing the same thing running on MS HPC pack with MS-MPI, and noticed about 30% boost in performance.

To check/set, you need administrative access to HPC Pack Cluster Manager (or reach out to whomever manages the HPC Cluster). The setting is as shown:We have a 256 core cluster at the moment, and mostly run 128 core jobs. The jobs were slower previously when this was set to false. Say you have a 32-core server in your cluster, and you request to run a job at 24-cores, this just means nobody else can use the remaining 8 cores not being used on that server, but users can still use unused servers in the cluster. This is at least my understanding of it.

-

May 4, 2023 at 9:20 am

Rob

Forum ModeratorRyan, that's correct. Running exclusive is often useful as it prevents a user using all the RAM with (for example) 4 cores then someone else jumps onto the other 28 (or whatever) and everything slows down.

Disciplined users are also useful: we launch jobs to fill a box, so multiples of 28 or 32 to avoid wasting cpu with a 36 core task. We may also run 4-10 core tasks but that "wastes" the remaining cores.

-

May 4, 2023 at 11:16 pm

mkhademi

SubscriberThank you Ryan for your help. It is very helpful. I will ask our HPC staff about this.

Thank you Rob for commenting.

-

- The topic ‘Ansys Fluent running slow on HPC’ is closed to new replies.

-

4167

-

1487

-

1318

-

1171

-

1021

© 2025 Copyright ANSYS, Inc. All rights reserved.