TAGGED: gpu-acceleration

-

-

December 10, 2024 at 1:51 pm

limy

SubscriberA6000 is only verified in Fluent and Speos as shown in the official GPU Compute Capabilities Release 2024R2 document. How about it in Lumerical?

-

December 10, 2024 at 11:23 pm

Lito

Ansys Employee@limy,

You should be able to run on NVIDIA GPUs that support CUDA drivers 452.39 or later. See the KB for full requirements and limitations when running FDTD on GPU. >>Getting started with running FDTD on GPU – Ansys Optics

Hope this helps.

Lito

-

December 14, 2024 at 12:15 pm

limy

SubscriberI got it. Another question is that when I try to run GPU-accelerated simulation of Lumerical FDTD, it seems that the memory requirement of simulation should be smaller than the 16 GB of GPU memory. However, I find the unified memory supports GPU to call more memory. Does this mean that the simulation memory requirement could be larger than 16 GB? If it is true, how can I achieve it? My computer has 256 GB memory (DDR4).

-

December 16, 2024 at 7:36 pm

Lito

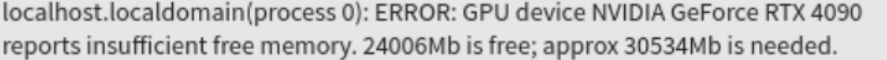

Ansys EmployeeWhen running with GPU this will be using the GPU’s memory not the system memory. From the error message your simulation requires around 30GB of vDRAM but some of the GPU memory is in use and only 24 GB is available to run the simulation. Please check the memory requirements of your simulation.

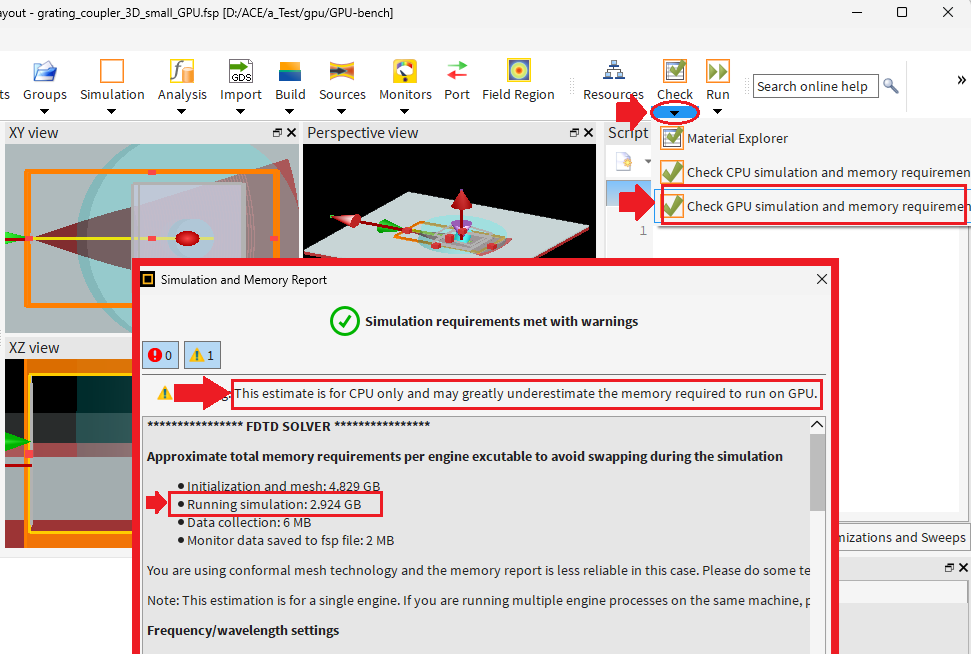

Example: Check GPU simulation memory requirements = more than 4 GB of GPU vDRAM is required to run the simulation.

-

-

- You must be logged in to reply to this topic.

-

4502

-

1494

-

1376

-

1209

-

1021

© 2025 Copyright ANSYS, Inc. All rights reserved.