TAGGED: frequency-sweep, mpi-with-slurm

-

-

October 17, 2023 at 6:07 pm

Ehsan Hafezi

SubscriberHi

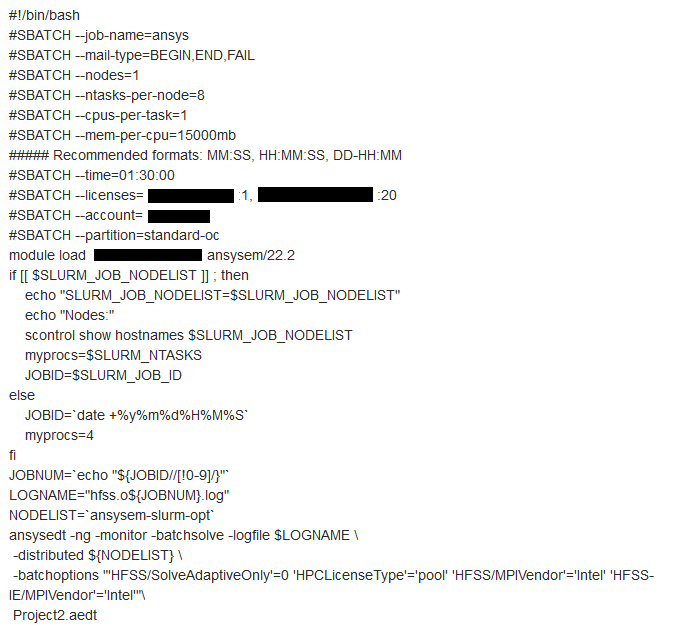

I'm using ansysem/22 on SLURM for a simulation with an adaptive solver and discrete frequency sweeping (8 data points). The sbat file is at the end of this post, but in summary, I'm using 1 node, 8 tasks per node, 1 core per task, and 15 GB of RAM per core; specifically, I assign MPIVendor as Intel in the batch options.

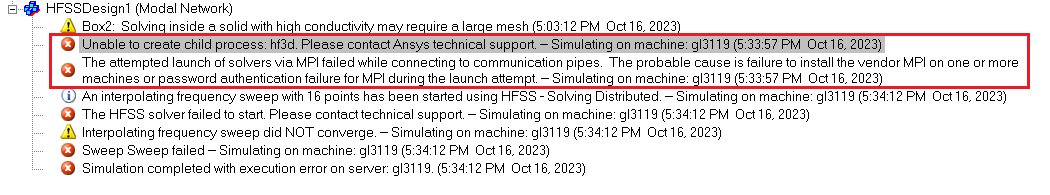

All this, and I get the following error: "The attempted launch of solvers via MPI failed while connecting to communication pipes" (complete error screenshot is attached). I've looked online (including similar posts on the Intel forum or even here) and haven't been able to solve the problem so far. This error is persistent in both interactive session and -ng through sbat job submission. I don't think there is something wrong with my simulation file because the same error occurs when I use the ansys test file located at "sw/pkgs/***/ansysem/22.R2/v222/Linux64/schedulers/diagnostics/Projects/HFSS/OptimTee-DiscreteSweep.aedt".

What happens is that HFSS solves the adaptive frequency and locks on distributing the frequencies with the following message {solved 1 out of 8 frequencies being solved in parallel} till MPI timeout reaches and the error appears. I've checked the HPC analysis and the number of cores, etc. seems okay.

I would really appreciate any feedback on this issue.

Bests

Ehsan

-

October 20, 2023 at 12:30 pm

randyk

Forum ModeratorHi Ehsan,

Please consider using a newer version AEDT, there have been changes to improve the SLURM integration.I would expect the following three items might be playing a role in your MPI failure.

1. AEDT defaults to Passwordless SSH communication by default.

- for SLURM, I recommend against using Passwordless SSH and specify "tight-integration" - leaving the scheduler initiate the remote processes. This is done by adding batchoption '/RemoteSpawnCommand'='scheduler'

- ex: -batchoptions " 'HFSS/RemoteSpawnCommand'='scheduler'"2. Intel MPI bit crashing running a newer OS variant such as RHEL8.x

- AEDT defaults to Intel MPI 2018u3 (the binaries are integrated - not installed separate) this version requires additional libraries when run under newer OS releases.

- If your OS is a RHEL8.x, I would recommend using the Intel 2021.6 option.

-- AEDT 2022R2 would enable the beta flag for IntelMPI2021 with environment variable: ANSYSEM_FEATURE_F539685_MPI_INTEL21_ENABLE=1

-- AEDT 2023R1 and newer enables with batchoption: '/MPIVersion'='2021'

ex: 'HFSS/MPIVersion'='2021'3. The execution hosts have multiple active networks.

- It is common for schedulers to have ethernet and high speed networks, you would need to specify the CIDR value of the preferred network.

- This is done with batchoption "Desktop/Settings/ProjectOptions/AnsysEMPreferredSubnetAddress"

ex: If your preferred network is 192.168.16.123/255.255.255.0, you would set -batchoption " 'Desktop/Settings/ProjectOptions/AnsysEMPreferredSubnetAddress'='192.168.16.0/24"

- There are many CIDR calculators on the web if you need assistance convering the IP/Subnetmask to CIDR.

I also suggest changes to your script, as you pasted a screen capture, I will post my example script in a separate response - you can modify as needed.

Thanks

Randy-

October 25, 2023 at 2:46 pm

Ehsan Hafezi

SubscriberHi Randy

Thanks for your reply and your great suggestions. I applied the second change but didn't help. I've reached out to our IT department for the third one, but in the meantime

Could you please advise what the correct environment variable would be if the intel version to be used was 2022.1.2? I checked and as well as 18, we have only 2022, and not the one you suggested. I'm looking for something to change this variable: "ANSYSEM_FEATURE_F539685_MPI_INTEL21_ENABLE=1"

Regards

Ehsan

-

October 25, 2023 at 7:59 pm

randyk

Forum ModeratorHi Ehsan,

We do not use the system MPI.

The IntelMPI binaries are embedded in our installation and referenced by relative path.

Please let me know if you are unable to resolve the MPI related failure with my suggested changes.

thanks

Randy

-

-

-

October 20, 2023 at 12:47 pm

randyk

Forum ModeratorHi Ehsan,

Consider basing your script on this example - I highlighted items to watch/change to match your system.Create "job.sh" with the following contents:#!/bin/bash#SBATCH -N 3 # allocate 3 nodes#SBATCH -n 12 # 12 tasks total#SBATCH -J AnsysEMTest # sensible name for the job#Set job folder, scratch folder, project, and design (Design is optional)JobFolder=$(pwd)ProjName=OptimTee-DiscreteSweep-FineMesh.aedtDsnName="TeeModel:Nominal:Setup1"# Executable pathAppFolder=//AnsysEM /v222/Linux64# Copy AEDT Example to $JobFolder for this initial testcp ${AppFolder}/schedulers/diagnostics/Projects/HFSS/${ProjName} ${JobFolder}/${ProjName}# setup environments and srunexport ANSYSEM_GENERIC_MPI_WRAPPER=${AppFolder}/schedulers/scripts/utils/slurm_srun_wrapper.shexport ANSYSEM_COMMON_PREFIX=${AppFolder}/commonexport ANSYSEM_TASKS_PER_NODE=${SLURM_TASKS_PER_NODE}# If enabling Intel MPI 2021 on AEDT 2022R2 to support RHEL8.x (beta flag)export ANSYSEM_FEATURE_F539685_MPI_INTEL21_ENABLE=1# setup srunsrun_cmd="srun --overcommit --export=ALL -n 1 --cpu-bind=none --mem-per-cpu=0 --overlap "# note: srun '--overlap' option was introduced in SLURM VERSION 20.11 - if customer using older SLURM version, remove the "--overlap"# MPI timeout set to 30min default for cloud suggest lower to 120 or 240 seconds for onpremexport MPI_TIMEOUT_SECONDS=120# System networking environment variables - HPC system dependent should not be user edits!# export ANSOFT_MPI_INTERCONNECT=ib# export ANSOFT_MPI_INTERCONNECT_VARIANT=ofed# Skip dependency checkexport ANS_NODEPCHECK=1# Setup Batchoptionsecho "\$begin 'Config'" > ${JobFolder}/${JobName}.options# echo "'Desktop/Settings/ProjectOptions/HPCLicenseType'='Pack'" >> ${JobFolder}/${JobName}.optionsecho "'HFSS/RAMLimitPercent'=90" >> ${JobFolder}/${JobName}.optionsecho "'HFSS/RemoteSpawnCommand'='scheduler'" >> ${JobFolder}/${JobName}.options# echo "'Desktop/Settings/ProjectOptions/AnsysEMPreferredSubnetAddress'='192.168.16.0/24'" >> ${JobFolder}/${JobName}.optionsecho "\$end 'Config'" >> ${JobFolder}/${JobName}.options# Run Job${srun_cmd} ${AppFolder}/ansysedt -ng -monitor -waitforlicense -useelectronicsppe=1 -distributed -auto -machinelist numcores=12 -batchoptions ${JobFolder}/${JobName}.options -batchsolve ${DsnName} ${JobFolder}/${ProjName}

#note the "numcores=xx" must match the assigned resource core count.Then run it:$ dos2unix ./job.sh$ chmod +x ./job.sh$ sbatch ./job.sh -

October 20, 2023 at 1:11 pm

randyk

Forum ModeratorOne additional suggestion, once you iron out your required batchoptions, you can use the "updateregistry" command to make them system default -- so you no longer need to add them to your submission.

As "root" or installation owner, run the following to create /path/v222/Linux64/config/default.XML

cd /path/AnsysEM/v222/Linux64

./UpdateRegistry -set -ProductName ElectronicsDesktop2022.2 -RegistryKey "Desktop/Settings/ProjectOptions/ProductImprovementOptStatus" -RegistryValue 0 -RegistryLevel install

./UpdateRegistry -set -ProductName ElectronicsDesktop2022.2 -RegistryKey "HFSS/MPIVendor" -RegistryValue "Intel" -RegistryLevel install

./UpdateRegistry -set -ProductName ElectronicsDesktop2022.2 -RegistryKey "HFSS 3D Layout Design/MPIVendor" -RegistryValue "Intel" -RegistryLevel install

./UpdateRegistry -set -ProductName ElectronicsDesktop2022.2 -RegistryKey "HFSS-IE/MPIVendor" -RegistryValue "Intel" -RegistryLevel install

./UpdateRegistry -set -ProductName ElectronicsDesktop2022.2 -RegistryKey "Maxwell 2D/MPIVendor" -RegistryValue "Intel" -RegistryLevel install

./UpdateRegistry -set -ProductName ElectronicsDesktop2022.2 -RegistryKey "Maxwell 3D/MPIVendor" -RegistryValue "Intel" -RegistryLevel install

./UpdateRegistry -set -ProductName ElectronicsDesktop2022.2 -RegistryKey "Q3D Extractor/MPIVendor" -RegistryValue "Intel" -RegistryLevel install

./UpdateRegistry -set -ProductName ElectronicsDesktop2022.2 -RegistryKey "Icepak/MPIVendor" -RegistryValue "Intel" -RegistryLevel install

./UpdateRegistry -set -ProductName ElectronicsDesktop2022.2 -RegistryKey "HFSS/RemoteSpawnCommand" -RegistryValue "scheduler" -RegistryLevel install

./UpdateRegistry -set -ProductName ElectronicsDesktop2022.2 -RegistryKey "HFSS 3D Layout Design/RemoteSpawnCommand" -RegistryValue "scheduler" -RegistryLevel install

./UpdateRegistry -set -ProductName ElectronicsDesktop2022.2 -RegistryKey "HFSS-IE/RemoteSpawnCommand" -RegistryValue "scheduler" -RegistryLevel install

./UpdateRegistry -set -ProductName ElectronicsDesktop2022.2 -RegistryKey "Maxwell 3D/RemoteSpawnCommand" -RegistryValue "scheduler" -RegistryLevel install

./UpdateRegistry -set -ProductName ElectronicsDesktop2022.2 -RegistryKey "Maxwell 2D/RemoteSpawnCommand" -RegistryValue "scheduler" -RegistryLevel install

./UpdateRegistry -set -ProductName ElectronicsDesktop2022.2 -RegistryKey "Q3D Extractor/RemoteSpawnCommand" -RegistryValue "scheduler" -RegistryLevel install

./UpdateRegistry -set -ProductName ElectronicsDesktop2022.2 -RegistryKey "Icepak/RemoteSpawnCommand" -RegistryValue "scheduler" -RegistryLevel install

./UpdateRegistry -set -ProductName ElectronicsDesktop2022.2 -RegistryKey "Desktop/Settings/ProjectOptions/AnsysEMPreferredSubnetAddress" -RegistryValue "192.168.0/24" -RegistryLevel install

-

- The topic ‘MPI failure at frequency sweep’ is closed to new replies.

-

5024

-

1708

-

1387

-

1248

-

1021

© 2026 Copyright ANSYS, Inc. All rights reserved.