TAGGED: ansys-hfss, ansys-hpc, batch-script

-

-

November 8, 2024 at 5:20 pm

katkar

SubscriberHi!

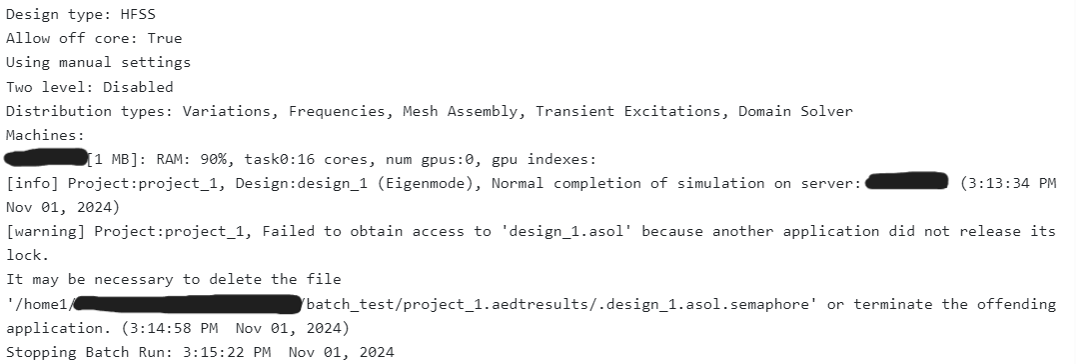

I hope you are all doing well. I am posting because I am tying to run an eigenmode simulation using a slurm script on a high performance computing cluster, but I am running into this error (the image is from the log file that is automatically created after submitting the slurm script):

I tried to delete the asol file and rerun, but the issue persists. The simulation I am trying to run is a test, so it is just a box with vacuum assigned as the material (pretty simple to run so I am not hitting any ram limits or any storage limits). When I tried to run this in interractive mode, it works fine, so I do not think there is something wrong with the file or with my permission to store the solutions on the working directory.

If any of you had any ideas on what else to try, I would really appreciate it. Thank you in advance!

Here is my script:#!/usr/bin/env bash

#SBATCH --job-name=HFSS_Simulation

#SBATCH --nodes=1 # Number of compute nodes

#SBATCH --ntasks-per-node=16 # Number of tasks (cores) per node

#SBATCH --time=04:00:00 # Maximum runtime

#SBATCH --partition=small # Partition to submit to

#SBATCH --output=hfss_output_%j.txt # Output file

#SBATCH --error=hfss_error_%j.txt # Error file

#SBATCH -A PHY23031

#SBATCH --mail-type=ALL

#SBATCH --mail-user= user emailexport TMPDIR=/scratch1/username/tmp

mkdir -p $TMPDIR

# Load Ansys HFSS module (or set environment variables for Ansys if TACC doesn't have a module system)

module load ansys/2022R2 # Adjust based on available versions# Set up the environment to run HFSS

export HFSS_EXE="/home1/apps/ANSYS/2022R2/AnsysEM/v222/Linux64/ansysedt" # Path to the HFSS executable

# Path to your HFSS project file (".aedt")

PROJECT_FILE="/home1/username/batch_test/project_1.aedt" # Create a temporary directory for the job on the compute node

DESIGN_NAME="design_1"

WORKDIR=$SLURM_SUBMIT_DIR/$SLURM_JOB_ID

mkdir -p $WORKDIR

cd $WORKDIR # Copy the project file to the working directory (optional)

cp $PROJECT_FILE $WORKDIR # Run HFSS with MPI across multiple nodes

mpirun -np $SLURM_NTASKS $HFSS_EXE -ng -BatchSolve $DESIGN_NAME:Nominal $PROJECT_FILE# Clean up (optional)

cp -r $WORKDIR/* $SLURM_SUBMIT_DIR -

November 12, 2024 at 1:04 pm

Aymen Mzoughi

Ansys EmployeePlease reach out to Ansys support under customer.ansys.com

Just a reminder that you may use Ansys Community Feed to develop your network and foster community by joining the appropriate community group and making connections. If you are interested in Electronics content, please join the Electronics group (link - https://innovationspace.ansys.com/community/groups/?electronics-community/)

-

- You must be logged in to reply to this topic.

-

4853

-

1587

-

1386

-

1242

-

1021

© 2026 Copyright ANSYS, Inc. All rights reserved.