-

-

September 28, 2019 at 3:40 am

kolyan007

SubscriberHello,

I'm playing with Distributed solving in version 19.4 R2. My cluster has 3 PCs. In a couple minutes after Solver started the job has failed.

I can't find what caused it. Could you please review log file and advise?

Thanks, Nick

-

September 30, 2019 at 7:00 pm

tsiriaks

Ansys EmployeeHi Nick,

Sorry, ANSYS employees are not allowed to download file attachments. Could you post this as an inline text or post screenshots inline ?

Are you using ANSYS Student (free) version ?

Is this Mechanical, Fluids, or Electronics product ?

Thanks,

Win

-

September 30, 2019 at 7:39 pm

-

October 1, 2019 at 12:26 am

kolyan007

SubscriberThanks Peter, good spotting. That folder ScrCA9A is missing under _ProjectScratch. How I can find why Mechanical trying to access it? Could it be because of some references in projects?

-

October 1, 2019 at 5:48 pm

tsiriaks

Ansys EmployeeThanks for the screenshot Peter.

So, this is Mechanical + ANSYS RSM.

I'm gonna need much more information to proceed. Note: That folder should be automatically created by the solver.

When you said 'cluster' , do you mean it has job scheduler or it's just 3 machines with no job scheduler connecting to each other ? If you have your own scheduler, what's the name of it ?

How do you submit the jobs ? Please show screenshots of the workflow and setup in both Workbench and RSM Configuration GUI's

Post screenshot of the top part of RSM Job Report.

Thanks,

Win

-

October 2, 2019 at 12:16 pm

kolyan007

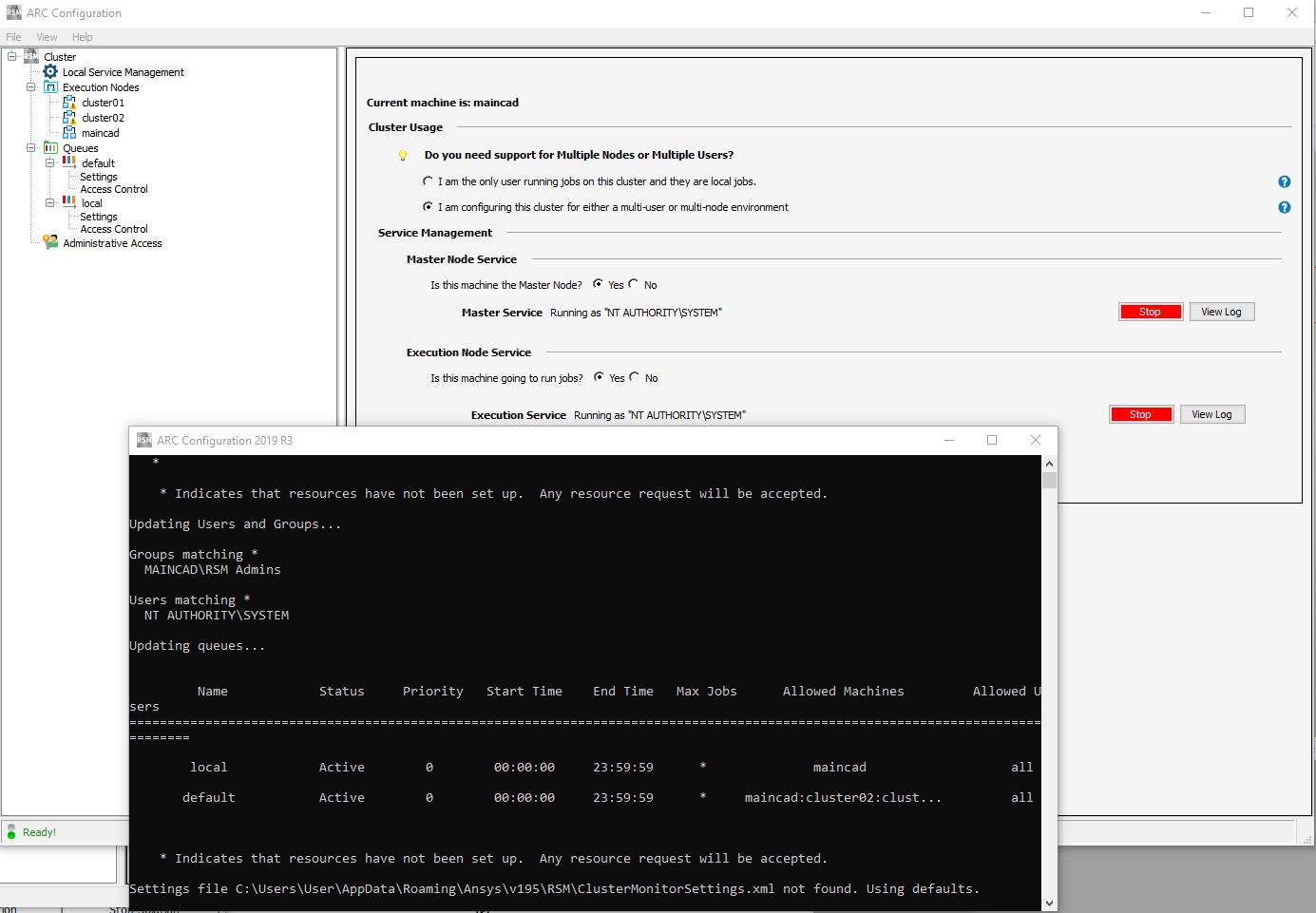

SubscriberThanks for your reply. I was thinking there is some incompatibility between my 3 machine cluster and R2, so I did reinstall OS on master machine (maincad) that using to send tasks to slaves in cluster, install R3 on it and install R3 on slaves, then replicate settings.

I don't have my own scheduler. All machines connecting to each other.

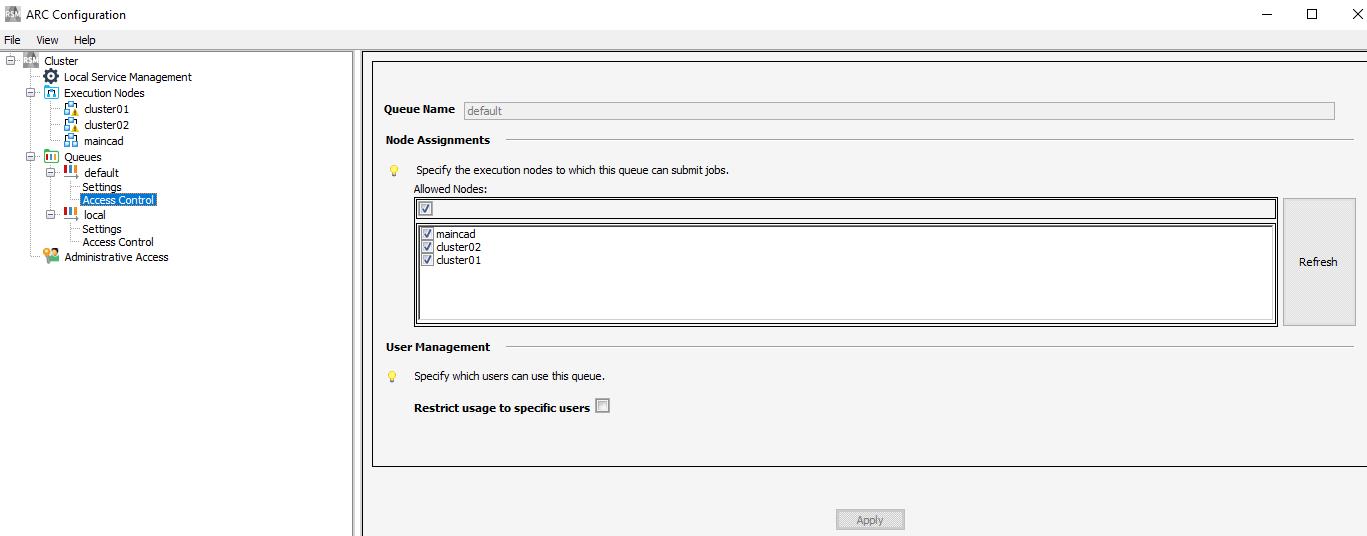

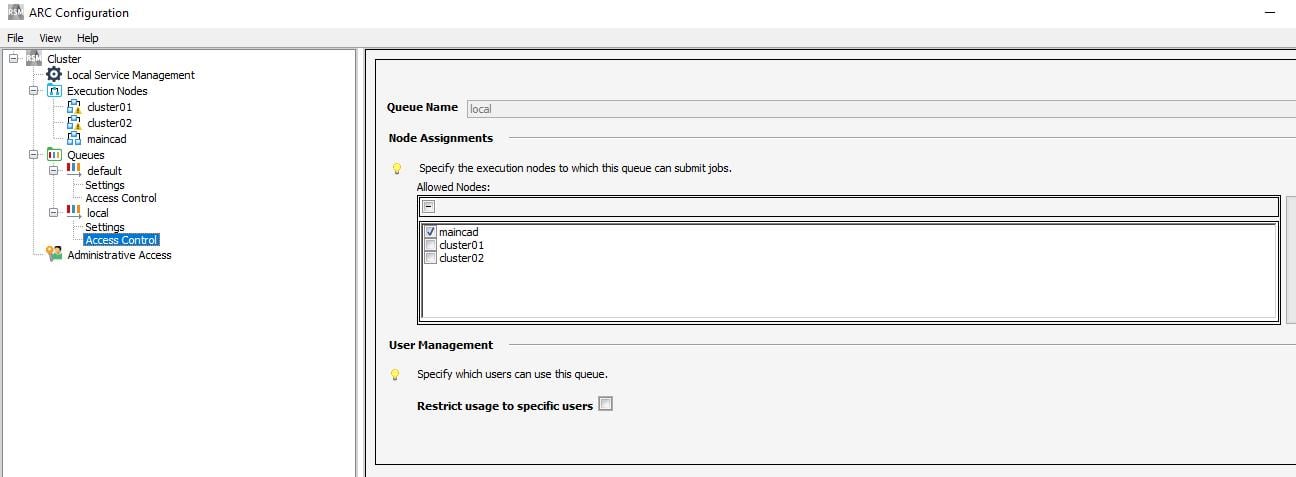

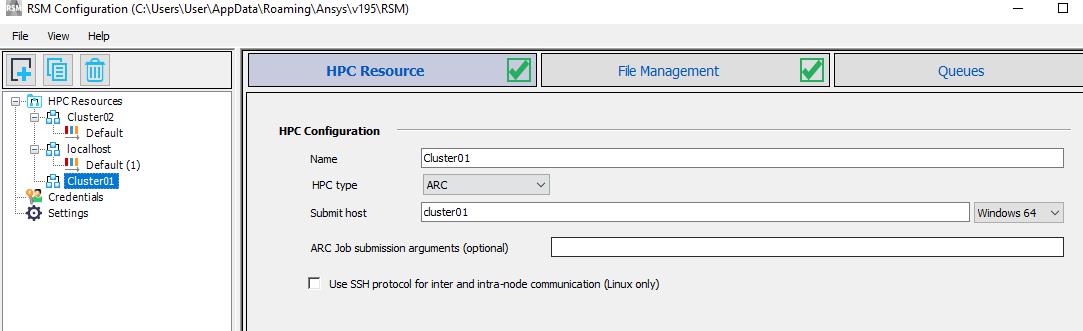

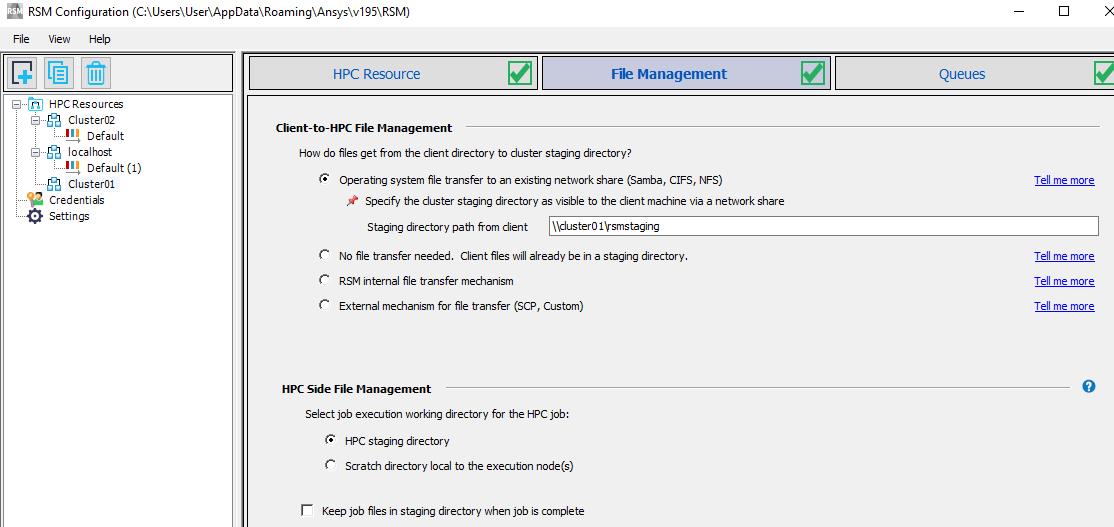

There are a screenshots with my config below.

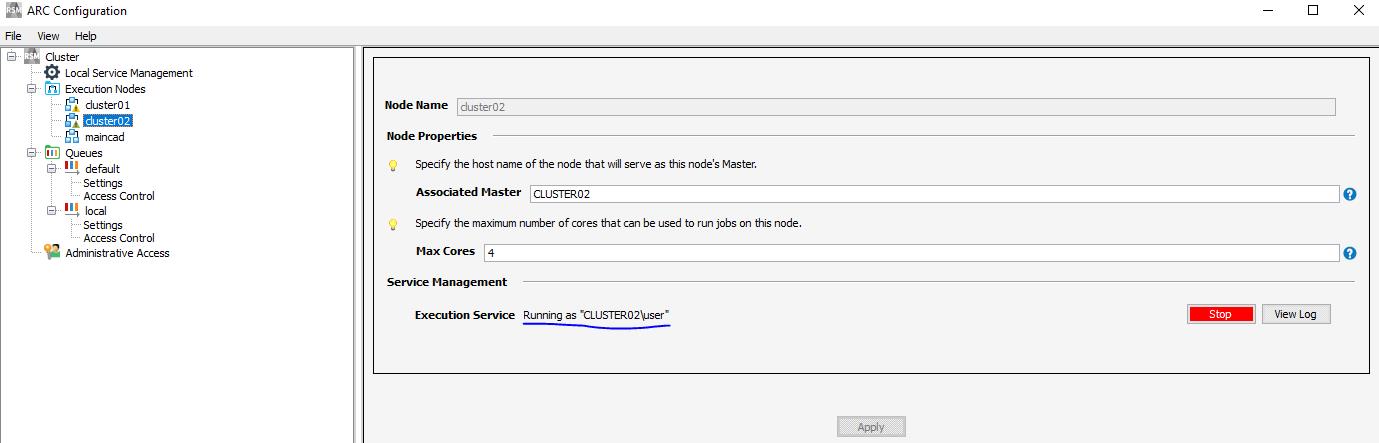

Note: I'm not sure why, but Service Management running by cluster02user. Not sure how to change to default.

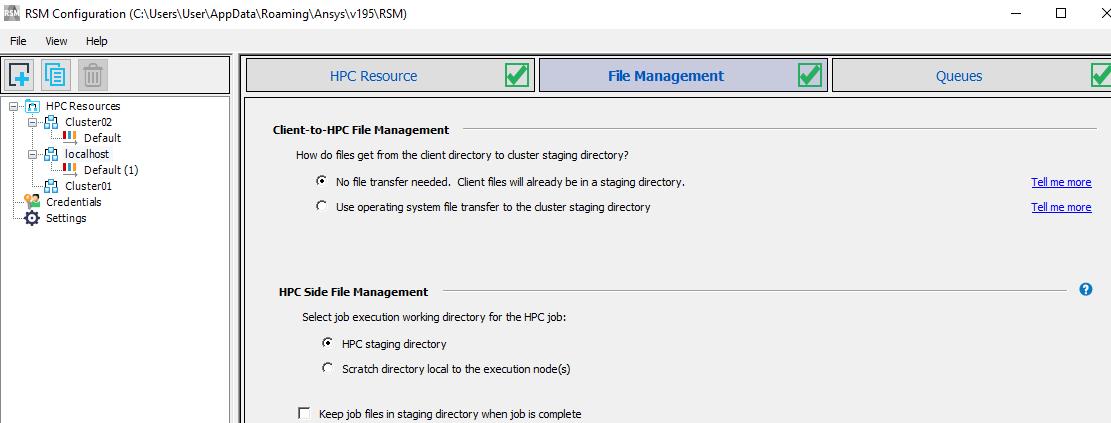

RSM config on master:

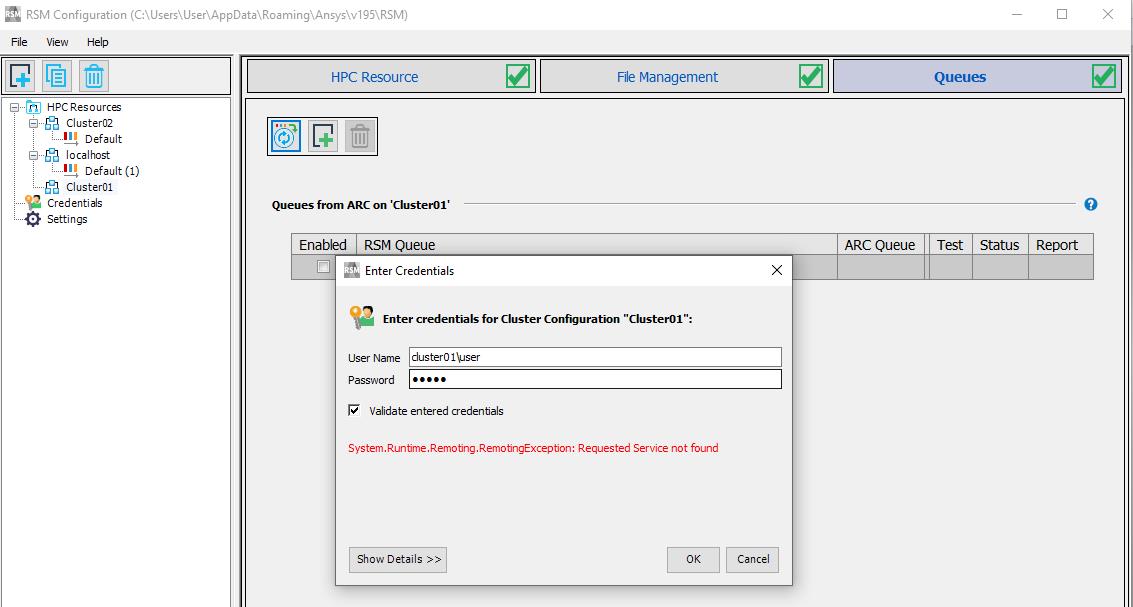

Somehow Queues refresh fails.

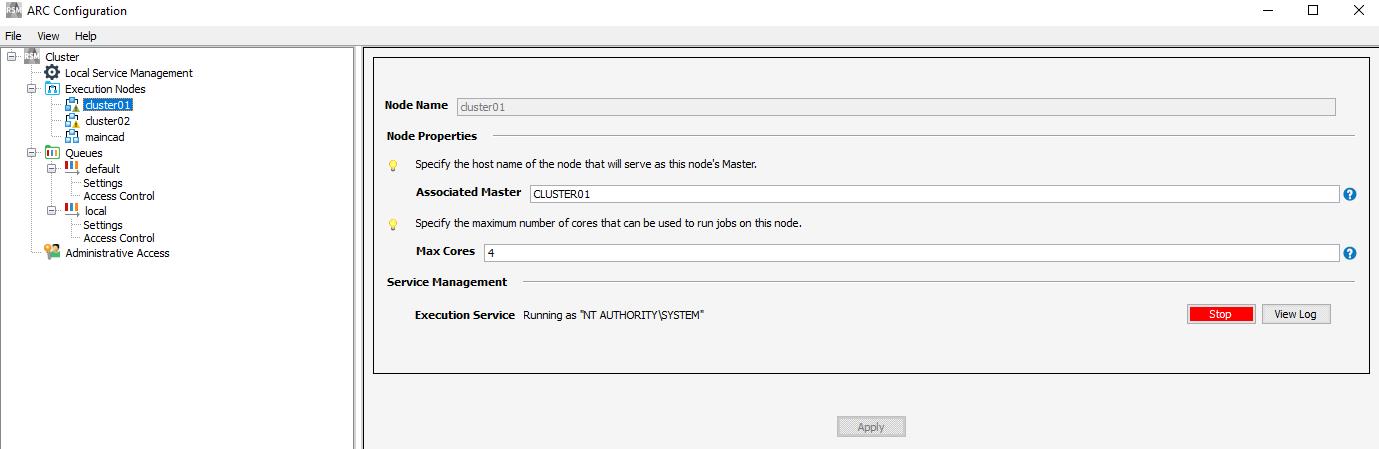

This is after I deleted credentials for cluster01

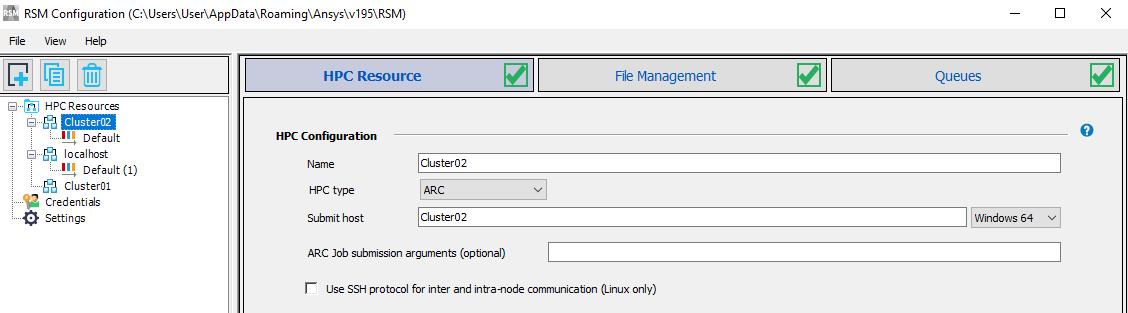

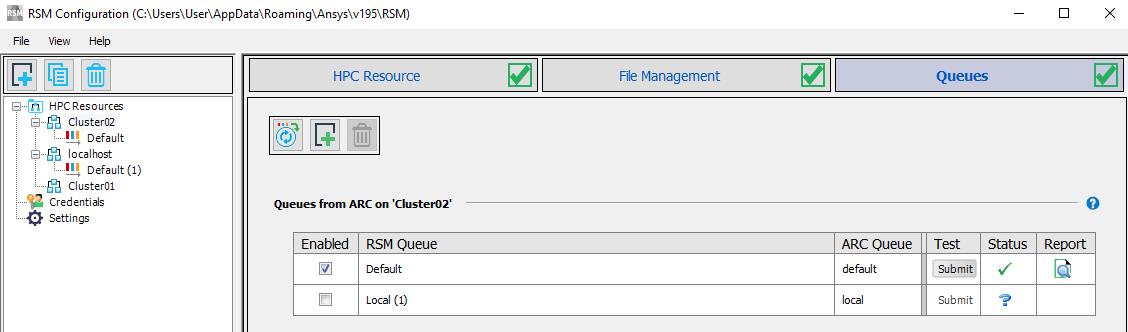

RSM config for cluster02

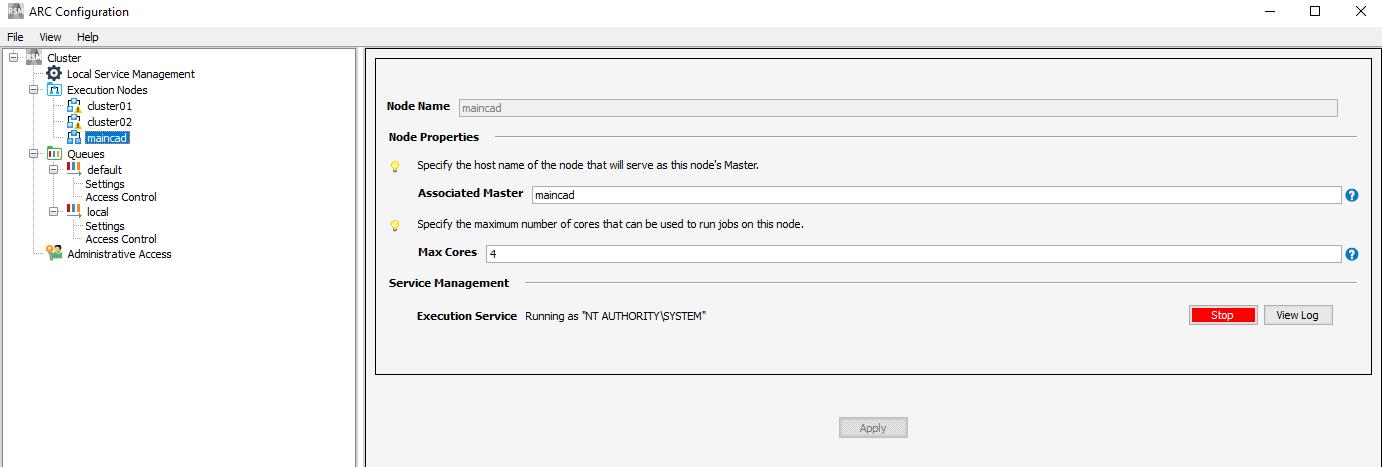

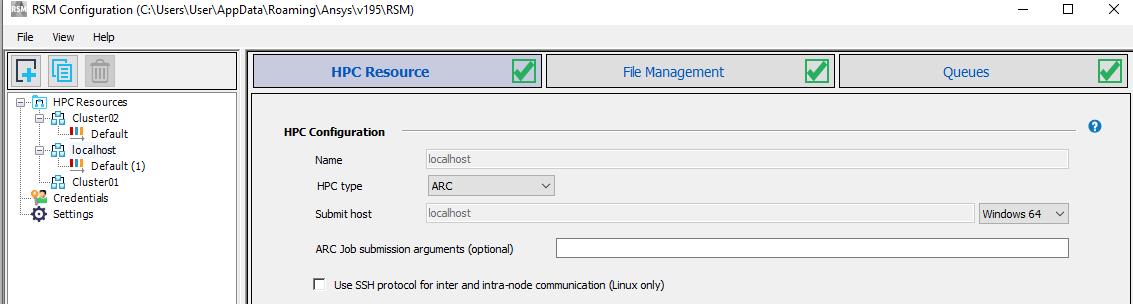

And RSM config for localhost

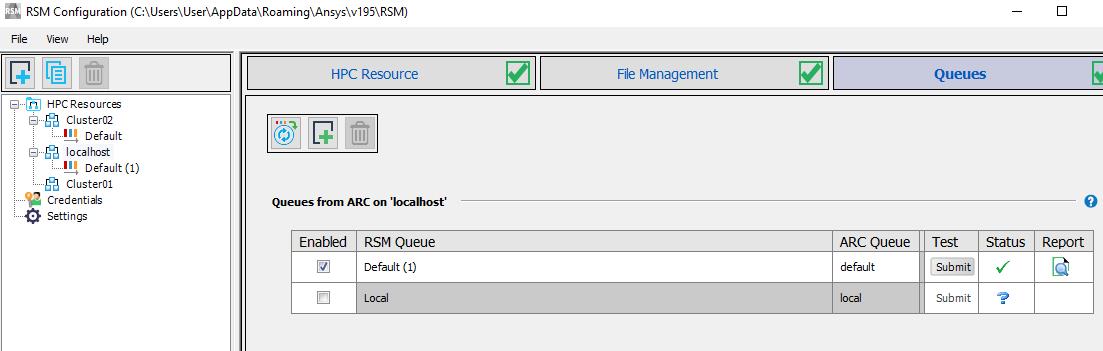

These 3 services running on each machine in cluster:

Master:

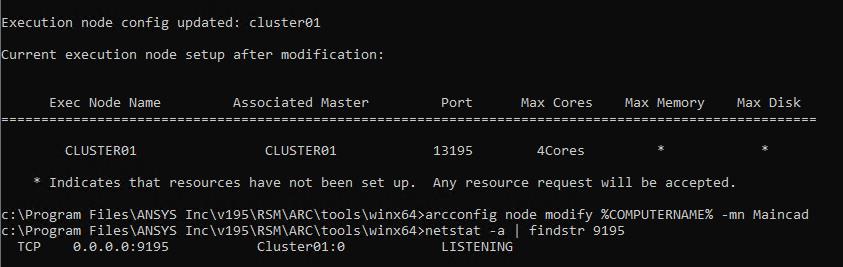

Cluster01

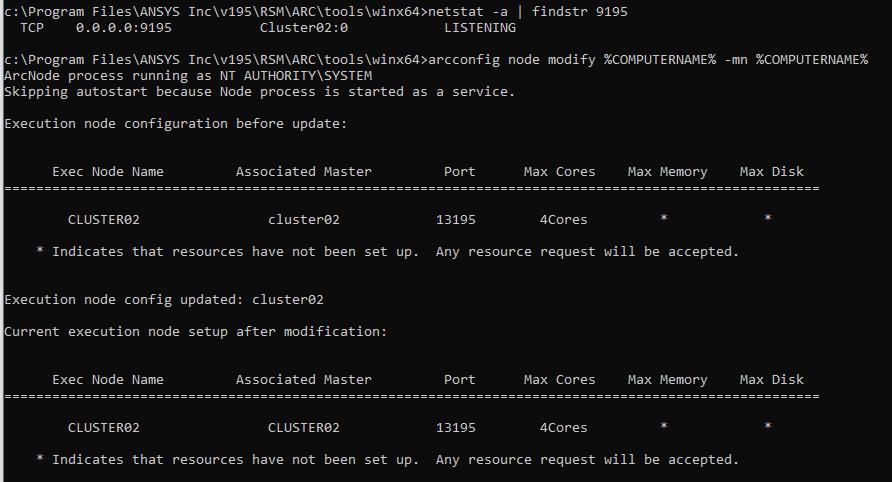

Cluster02

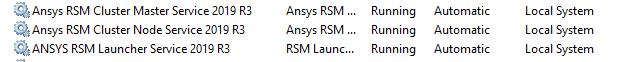

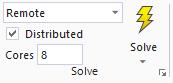

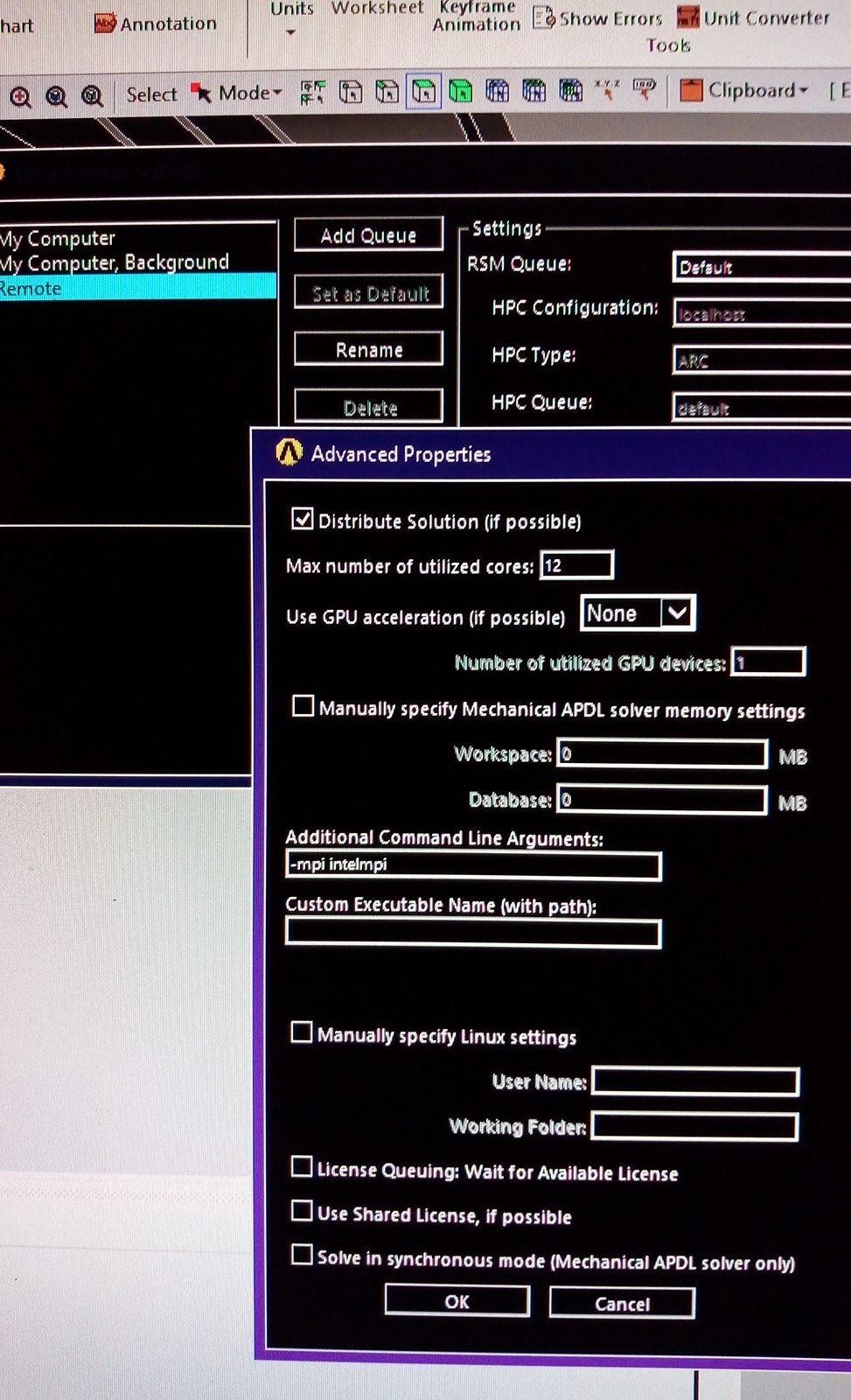

My Solve Process settings:

Also I've tested telnet to port 9195 and SMB in Windows, there is no issues from OS perspective. All machines using same credentials.

Additionally I've found that if I run: arcconfig node modify %COMPUTERNAME% -mn maincad so test in RSM will go in schedule mode, but if I replace my master node with %COMPUTERNAME%, a test will go through fine. What options is correct?

-

October 2, 2019 at 4:25 pm

tsiriaks

Ansys Employeeok, so you are using ANSYS own light-weight scheduler (ARC).

I normally use commands to set this, so I'm not familiar with settings on the ARC Config GUI but I've asked a colleague to help check it.

However, I see a lot of mis-configurations.

Essentially, this is what you need to do to properly setup RSM-ARC

Create share directory

Create a directory on a machine that you would like to use as the staging directory.

For this example, I will use e:RSMStaging

Now share that directory out to all solving machines and 'submit host' so that anyone can read and write to it.

Right click on RSMStaging and select Properties Select Sharing Tab

Click on Share...

Type in:

Everyone

And click Add

Type in:

Domain Computers

Click Add

Type in:

Domain Users

Click Add

Give each of the users Read/Write access

Then click "Share"

Then click Done

On 'submit host' machine (This is a dedicated node. Do not install this on all nodes)

This is the machine that receives requests from clients, then queues and distributes jobs to solve on solving machines. This machine itself can also be a solving machine.

Two components are required here:

1. RSM Launcher service, which deals with the communications between client machine and this machine

2. ARC Master service is one of the two parts of the ARC job scheduler, a light-weight job scheduler that ANSYS provides. This part deals with the communications between RSM Launcher and solving machine(s) , essentially, queueing and distributing jobs to solving machine(s).

On solving machine(s)

Install ARC Node service, which is used for communicating with the ARC Master service.

-

October 2, 2019 at 5:48 pm

JakeC

Ansys EmployeeHi Nick,

There seems to be a number of different issues to look at here.

First, please uninstall the ARC Master and RSM Launcher services from the two machines that are NOT the head node.

You can do this from the command line by doing the following:

Start -> Type cmd -> Right click on Command Prompt and select Run As administrator

At the prompt type:

cd /d "c:program filesansys incv195RSMbin"

ansunconfigrsm -launcher

cd /d "c:program filesansys incv195RSMARCtoolswinx64"

uninstallservice -arcmaster

This should leave the ARC Node service running on MainCad,Cluster01,Cluster02

This should leave the ARC Master and Launcher service running on MainCad only.

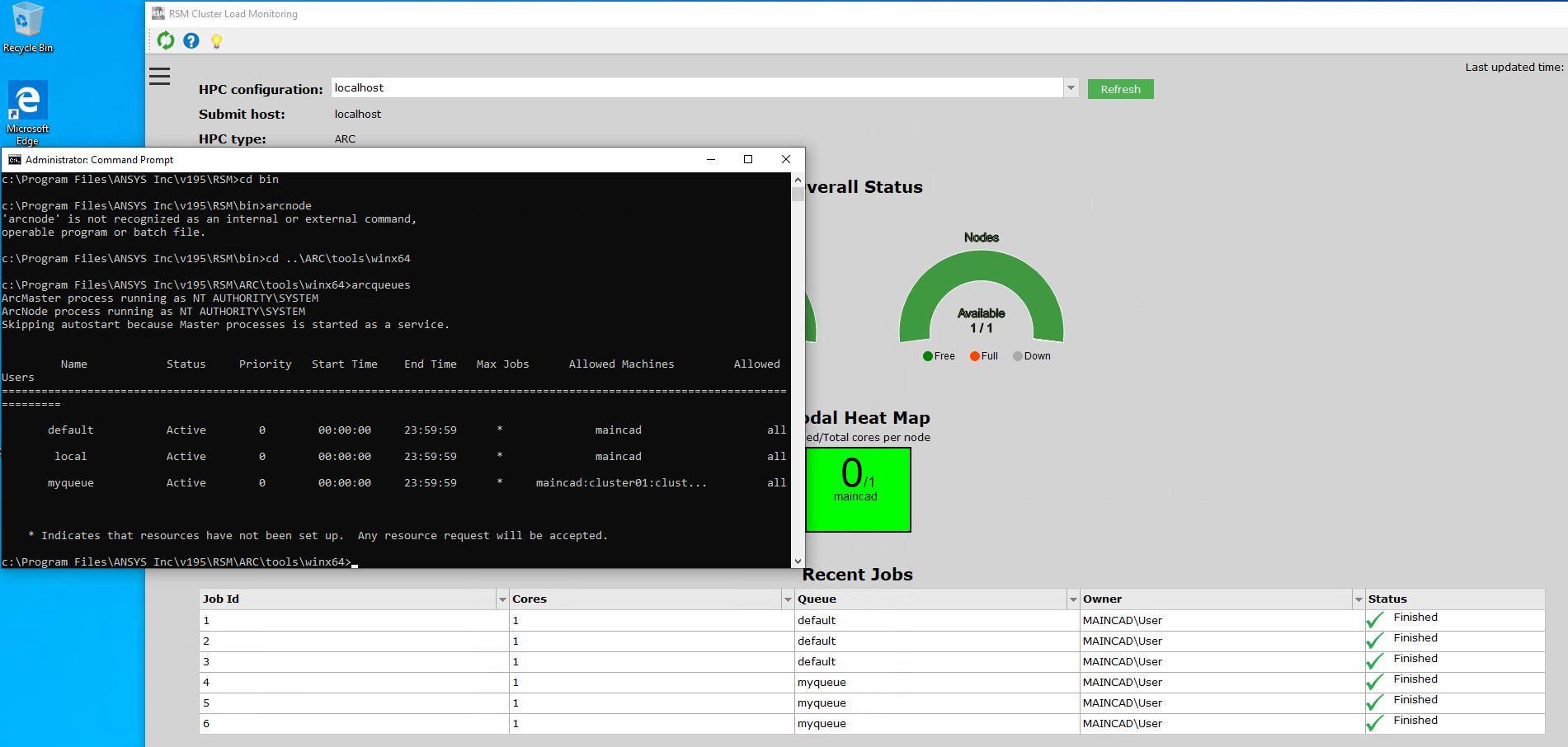

Once that is done, go to MainCad and run the admin command prompt as state above, then type:

cd /c "c:program filesansys incv195RSMARCtoolswinx64"

arcnodes

arcqueues

Then please paste a screenshot of that.

Thank you,

Jake

-

October 2, 2019 at 6:28 pm

tsiriaks

Ansys EmployeeContinued

On client machines. Note All info here is the same on any client machine. The Submit Host is the dedicated node above. Do not use local machine information.

Launch RSM Configuration GUI

Once you are in the GUI, click the plus sign on the top left then fill out name or its IP address of the Submit Host machine and OS.

Once, this is done, click “Apply”

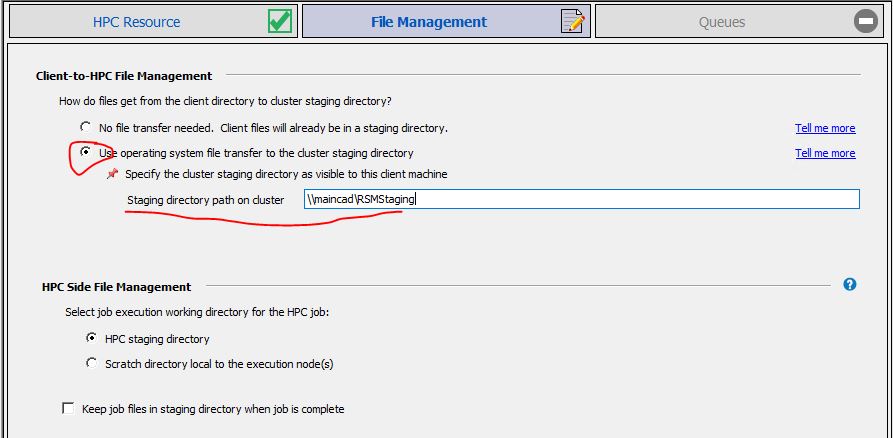

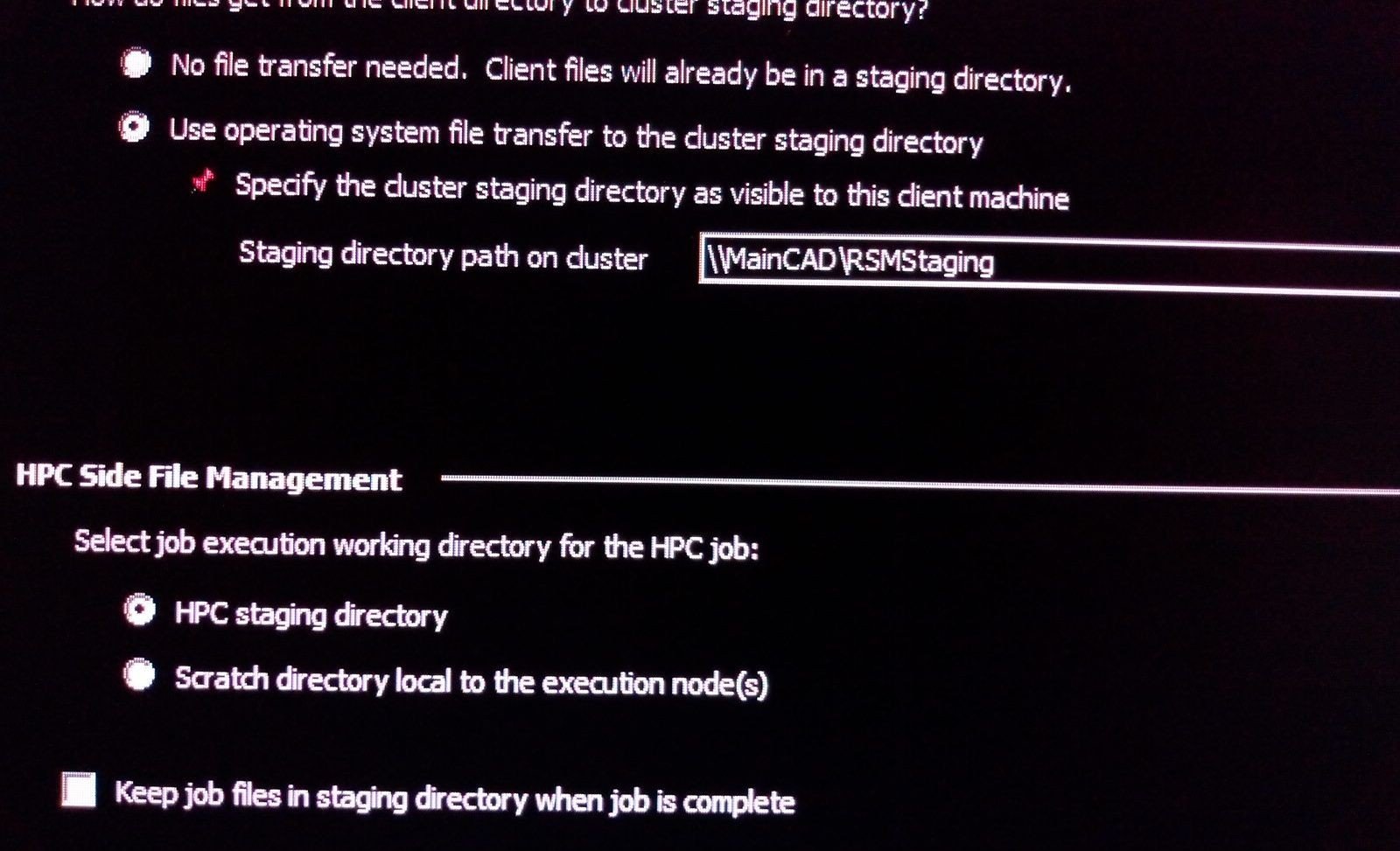

Go to “File Management” tab , Select "Operating System File Transfer" , Put in the share path to the RSMStaging directory you shared earlier (again, this is the same UNC path that you setup to share above, do not use local machine information)

Once, this is done, click “Apply”

Then, Go to “Queues” tab , click “Import/Refresh Cluster Queues” , then specify your login username and password. This will bring up all the queues. You can enable and disable any queue that you like.

Then make sure that port 9195 is open between the client and the ‘submit host’ machines.

-

October 3, 2019 at 1:25 am

tsiriaks

Ansys Employeesounds good, please keep us posted

Thanks,

Win

-

October 5, 2019 at 8:47 am

-

October 6, 2019 at 10:31 am

-

October 7, 2019 at 1:30 pm

JakeC

Ansys EmployeeLooks like something is blocking the IntelMPI processes.

Firewall / User Account Control / Antivirus / etc...

You could try IBM MPI instead.

First make sure IBM/Platform MPI is installed on all machines.

Then In Mechanical go to Solve Process Settings -> Select your config -> Advanced

In the Additional Command Line Arguments add:

-mpi ibmmpi

Then try it again.

Thank you,

Jake

-

October 7, 2019 at 9:29 pm

kolyan007

SubscriberThanks for advise. I've installed MPI services only on Submit host. I will add it to remote solvers and add these CMD arguments.

-

October 7, 2019 at 9:38 pm

kolyan007

SubscriberWhat arguments should I use if I will be using Intel MPI? (once MPI installed on all machines)

What installation set of IBM MPI would be the best for Windows 10? (Service Mode, Service Only or HPC mode)

-

October 7, 2019 at 10:13 pm

kolyan007

SubscriberRight, so IBM MPI is installed on all machines as Service Mode, hope it's correct set. Also -mpi ibmmpi as argument also added. Telnet to TCP 8636 from Submit Host to solvers is ok.

Now solving working, but I don't see any CPU load on my solvers. If there anything else to check?

Start running job commands ...

Running on machine : Maincad

Current Directory: c:RSMStagingofwkpry5.3rq

Running command: C:Program FilesANSYS Incv195SECSolverExecutionControllerrunsec.bat

Redirecting output to None

Final command arg list :

Running Process

Running Solver : C:Program FilesANSYS Incv195ansysbinwinx64ANSYS195.exe -b nolist -s noread -p ansys -mpi ibmmpi -i remote.dat -o solve.out -dis -machines maincad:1:cluster01:1:cluster02:1 -dir "c

RSMStaging/ofwkpry5.3rq"

RSMStaging/ofwkpry5.3rq"

WARNING: No cached password or password provided.

use '-pass' or '-cache' to provide password

*** The AWP_ROOT195 environment variable is set to: C:Program FilesANSYS Incv195

*** The AWP_LOCALE195 environment variable is set to: en-us

*** The ANSYS195_DIR environment variable is set to: C:Program FilesANSYS Incv195ANSYS

*** The ANSYS_SYSDIR environment variable is set to: winx64

*** The ANSYS_SYSDIR32 environment variable is set to: intel

*** The CADOE_DOCDIR195 environment variable is set to: C:Program FilesANSYS Incv195CommonFileshelpen-ussolviewer

*** The CADOE_LIBDIR195 environment variable is set to: C:Program FilesANSYS Incv195CommonFilesLanguageen-us

*** The LSTC_LICENSE environment variable is set to: ANSYS

*** The P_SCHEMA environment variable is set to: C:Program FilesANSYS Incv195AISOLCADIntegrationParasolidPSchema

*** The TEMP environment variable is set to: C:UsersUserAppDataLocalTemp

-

October 7, 2019 at 11:25 pm

tsiriaks

Ansys EmployeeThis seems good (and by the way, the Service Mode for IBM MPI is the correct choice) .

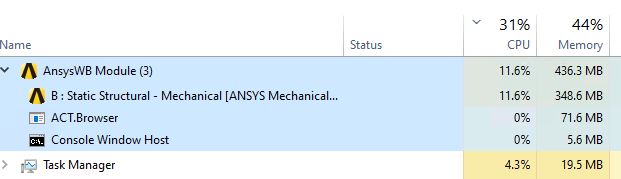

If you open up Task Manager on solving machines do you see any ANSYS.exe or ANSYS195.exe ?

-

October 8, 2019 at 1:43 am

-

October 8, 2019 at 2:31 pm

JakeC

Ansys EmployeePlease post a screenshot of your Solution properties if submitting from workbench or post the screenshot of your Solve Process Settings and the advanced tab for the solve process setting you are using.

Thank you,

Jake

-

October 9, 2019 at 5:16 am

kolyan007

SubscriberYesterday I was playing with Intel MPI as previously I've seen some load on solvers with it. The screenshot with Solve Process Settings and Advanced tab is below.

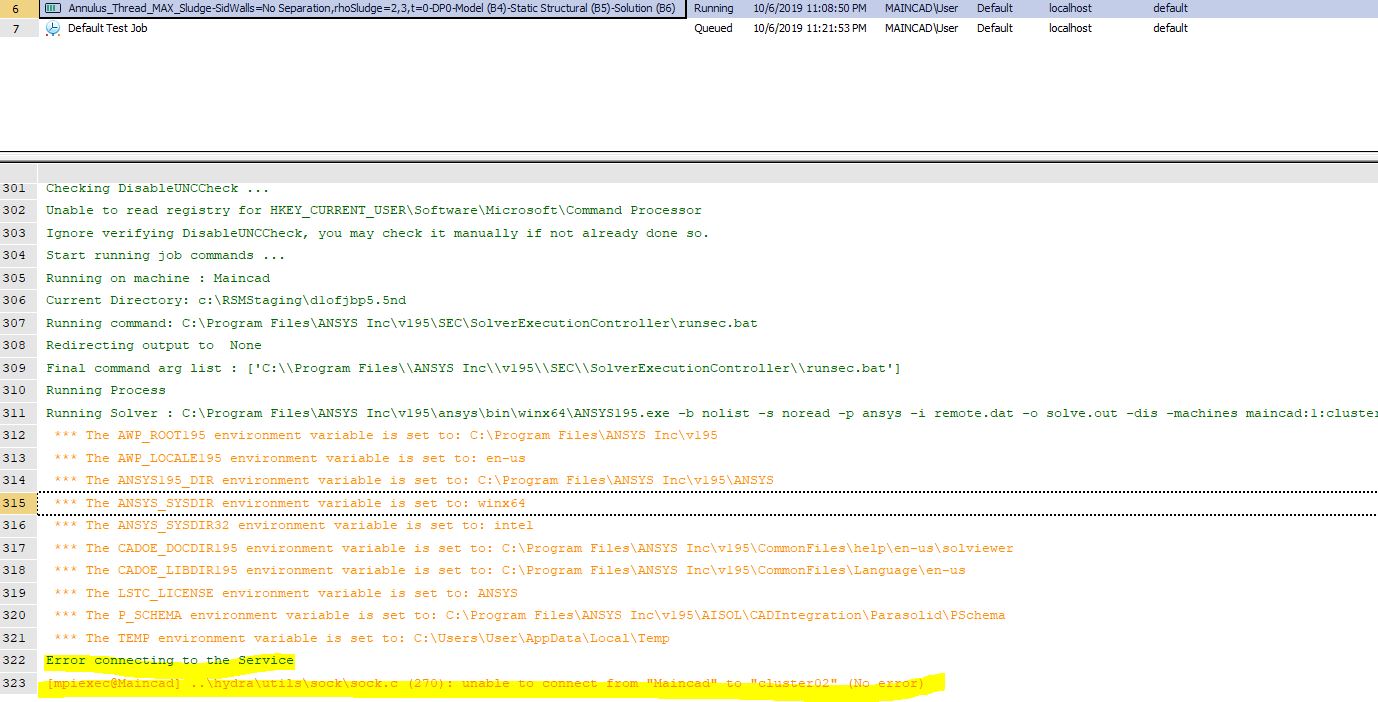

So I've tried with -mpi intelmpi argument and without. The result was the same, i.e. the solving process started on all machines, CPU load was 100% but only for a couple of minutes, then I got MPI_Abort error (full error message is also below).

Note: Regarding that Invalid working directory value= C

RSMStaging/aokqqj0p.5k1 message it's weird as folder actually exists and accessible by solvers. Not sure why it has wrong slashes here.

RSMStaging/aokqqj0p.5k1 message it's weird as folder actually exists and accessible by solvers. Not sure why it has wrong slashes here.

Also, I do have NVidia Quadro GPU in each machine. Is it reasonable to enable GPU Acceleration to improve performance?

Error message (omitted):

send of 20 bytes failed.

Command Exit Code: 99

application called MPI_Abort(MPI_COMM_WORLD, 99) - process 0

[mpiexec@Maincad] ..hydrautilssocksock.c (420): write error (Unknown error)

[mpiexec@Maincad] ..hydrautilslaunchlaunch.c (121): shutdown failed, sock 648, error 10093

ClusterJobs Command Exit Code: 99

Saving exit code file: C:RSMStagingaokqqj0p.5k1exitcode_782e78b5-2c00-45fa-b7ac-6976aa49c2cf.rsmout

Exit code file: C:RSMStagingaokqqj0p.5k1exitcode_782e78b5-2c00-45fa-b7ac-6976aa49c2cf.rsmout has been created.

Saving exit code file: C:RSMStagingaokqqj0p.5k1exitcodeCommands_782e78b5-2c00-45fa-b7ac-6976aa49c2cf.rsmout

Exit code file: C:RSMStagingaokqqj0p.5k1exitcodeCommands_782e78b5-2c00-45fa-b7ac-6976aa49c2cf.rsmout has been created.

=======SOLVE.OUT FILE======

solve --> *** FATAL ***

solve --> Attempt to run ANSYS in a distributed mode failed.

solve --> The Distributed ANSYS process with MPI Rank ID = 4 is not responding.

solve --> Please refer to the Distributed ANSYS Guide for detailed setup and configuration information.

ClusterJobs Exiting with code: 99

Individual Command Exit Codes are: [99]

Full error log:

RSM Version: 19.5.420.0, Build Date: 30/07/2019 5

7:06 am

7:06 am

RSM File Version: 19.5.0-beta.3+Branch.release-19.5.Sha.9c15e0a3e0a608e8efe8c568e494d2160788d025

RSM Library Version: 19.5.0.0

Job Name: Annulus_Thread_MAX_Sludge-SidWalls=No Separation,rhoSludge=2,3,t=0-DP0-Model (B4)-Static Structural (B5)-Solution (B6)

Type: Mechanical_ANSYS

Client Directory: C:TestProject_ProjectScratchScr6531

Client Machine: Maincad

Queue: Default [localhost, default]

Template: Mechanical_ANSYSJob.xml

Cluster Configuration: localhost [localhost]

Cluster Type: ARC

Custom Keyword: blank

Transfer Option: NativeOS

Staging Directory: C:RSMStaging

Delete Staging Directory: True

Local Scratch Directory: blank

Platform: Windows

Cluster Submit Options: blank

Normal Inputs: [*.dat,file*.*,*.mac,thermal.build,commands.xml,SecInput.txt]

Cancel Inputs: [file.abt,sec.interrupt]

Excluded Inputs: [-]

Normal Outputs: [*.xml,*.NR*,CAERepOutput.xml,cyclic_map.json,exit.topo,file*.dsub,file*.ldhi,file*.png,file*.r0*,file*.r1*,file*.r2*,file*.r3*,file*.r4*,file*.r5*,file*.r6*,file*.r7*,file*.r8*,file*.r9*,file*.rd*,file*.rst,file.BCS,file.ce,file.cm,file.cnd,file.cnm,file.DSP,file.err,file.gst,file.json,file.nd*,file.nlh,file.nr*,file.PCS,file.rdb,file.rfl,file.spm,file0.BCS,file0.ce,file0.cnd,file0.err,file0.gst,file0.nd*,file0.nlh,file0.nr*,file0.PCS,frequencies_*.out,input.x17,intermediate*.topo,Mode_mapping_*.txt,NotSupportedElems.dat,ObjectiveHistory.out,post.out,PostImage*.png,record.txt,solve*.out,topo.err,top

ut,vars.topo,SecDebugLog.txt,secStart.log,sec.validation.executed,sec.envvarvalidation.executed,sec.failure]

ut,vars.topo,SecDebugLog.txt,secStart.log,sec.validation.executed,sec.envvarvalidation.executed,sec.failure]

Failure Outputs: [*.xml,*.NR*,CAERepOutput.xml,cyclic_map.json,exit.topo,file*.dsub,file*.ldhi,file*.png,file*.r0*,file*.r1*,file*.r2*,file*.r3*,file*.r4*,file*.r5*,file*.r6*,file*.r7*,file*.r8*,file*.r9*,file*.rd*,file*.rst,file.BCS,file.ce,file.cm,file.cnd,file.cnm,file.DSP,file.err,file.gst,file.json,file.nd*,file.nlh,file.nr*,file.PCS,file.rdb,file.rfl,file.spm,file0.BCS,file0.ce,file0.cnd,file0.err,file0.gst,file0.nd*,file0.nlh,file0.nr*,file0.PCS,frequencies_*.out,input.x17,intermediate*.topo,Mode_mapping_*.txt,NotSupportedElems.dat,ObjectiveHistory.out,post.out,PostImage*.png,record.txt,solve*.out,topo.err,top

ut,vars.topo,SecDebugLog.txt,sec.solverexitcode,secStart.log,sec.failure,sec.envvarvalidation.executed]

ut,vars.topo,SecDebugLog.txt,sec.solverexitcode,secStart.log,sec.failure,sec.envvarvalidation.executed]

Cancel Outputs: [file*.err,solve.out,secStart.log,SecDebugLog.txt]

Excluded Outputs: [-]

Inquire Files:

normal: [*.xml,*.NR*,CAERepOutput.xml,cyclic_map.json,exit.topo,file*.dsub,file*.ldhi,file*.png,file*.r0*,file*.r1*,file*.r2*,file*.r3*,file*.r4*,file*.r5*,file*.r6*,file*.r7*,file*.r8*,file*.r9*,file*.rd*,file*.rst,file.BCS,file.ce,file.cm,file.cnd,file.cnm,file.DSP,file.err,file.gst,file.json,file.nd*,file.nlh,file.nr*,file.PCS,file.rdb,file.rfl,file.spm,file0.BCS,file0.ce,file0.cnd,file0.err,file0.gst,file0.nd*,file0.nlh,file0.nr*,file0.PCS,frequencies_*.out,input.x17,intermediate*.topo,Mode_mapping_*.txt,NotSupportedElems.dat,ObjectiveHistory.out,post.out,PostImage*.png,record.txt,solve*.out,topo.err,top

ut,vars.topo,SecDebugLog.txt,secStart.log,sec.validation.executed,sec.envvarvalidation.executed,sec.failure]

ut,vars.topo,SecDebugLog.txt,secStart.log,sec.validation.executed,sec.envvarvalidation.executed,sec.failure]

cancel: [file*.err,solve.out,secStart.log,SecDebugLog.txt]

failure: [*.xml,*.NR*,CAERepOutput.xml,cyclic_map.json,exit.topo,file*.dsub,file*.ldhi,file*.png,file*.r0*,file*.r1*,file*.r2*,file*.r3*,file*.r4*,file*.r5*,file*.r6*,file*.r7*,file*.r8*,file*.r9*,file*.rd*,file*.rst,file.BCS,file.ce,file.cm,file.cnd,file.cnm,file.DSP,file.err,file.gst,file.json,file.nd*,file.nlh,file.nr*,file.PCS,file.rdb,file.rfl,file.spm,file0.BCS,file0.ce,file0.cnd,file0.err,file0.gst,file0.nd*,file0.nlh,file0.nr*,file0.PCS,frequencies_*.out,input.x17,intermediate*.topo,Mode_mapping_*.txt,NotSupportedElems.dat,ObjectiveHistory.out,post.out,PostImage*.png,record.txt,solve*.out,topo.err,top

ut,vars.topo,SecDebugLog.txt,sec.solverexitcode,secStart.log,sec.failure,sec.envvarvalidation.executed]

ut,vars.topo,SecDebugLog.txt,sec.solverexitcode,secStart.log,sec.failure,sec.envvarvalidation.executed]

RemotePostInformation: [RemotePostInformation.txt]

SolutionInformation: [solve.out,file.gst,file.nlh,file0.gst,file0.nlh,file.cnd]

PostDuringSolve: [file.rcn,file.redm,file.rfl,file.rfrq,file.rmg,file.rdsp,file.rsx,file.rst,file.rth,solve.out,file.gst,file.nlh,file0.gst,file0.nlh,file.cnd]

transcript: [solve.out,monitor.json]

Submission in progress...

Created storage directory C:RSMStagingaokqqj0p.5k1

Runtime Settings:

Job Owner: MAINCADUser

Submit Time: Tuesday, 8 October 2019 11:40 pm

Directory: C:RSMStagingaokqqj0p.5k1

Uploading file: C:TestProject_ProjectScratchScr6531ds.dat

Uploading file: C:TestProject_ProjectScratchScr6531remote.dat

Uploading file: C:TestProject_ProjectScratchScr6531commands.xml

Uploading file: C:TestProject_ProjectScratchScr6531SecInput.txt

39898.74 KB, .08 sec (498807.21 KB/sec)

Submission in progress...

JobType is: Mechanical_ANSYS

Final command platform: Windows

RSM_PYTHON_HOME=C:Program FilesANSYS Incv195commonfilesCPython2_7_15winx64Releasepython

RSM_HPC_JOBNAME=Mechanical

Distributed mode requested: True

RSM_HPC_DISTRIBUTED=TRUE

Running 1 commands

Job working directory: C:RSMStagingaokqqj0p.5k1

Number of CPU requested: 12

AWP_ROOT195=C:Program FilesANSYS Incv195

Checking queue default exists ...

JobId was parsed as: 4

Job submission was successful.

Job is running on hostname Maincad

Job user from this host: User

Starting directory: C:RSMStagingaokqqj0p.5k1

Reading control file C:RSMStagingaokqqj0p.5k1control_782e78b5-2c00-45fa-b7ac-6976aa49c2cf.rsm ....

Correct Cluster verified

Cluster Type: ARC

Underlying Cluster: ARC

RSM_CLUSTER_TYPE = ARC

Compute Server is running on MAINCAD

Reading commands and arguments...

Command 1: %AWP_ROOT195%SECSolverExecutionControllerrunsec.bat, arguments: , redirectFile: None

Running from shared staging directory ...

RSM_USE_LOCAL_SCRATCH = False

RSM_LOCAL_SCRATCH_DIRECTORY =

RSM_LOCAL_SCRATCH_PARTIAL_UNC_PATH =

Cluster Shared Directory: C:RSMStagingaokqqj0p.5k1

RSM_SHARE_STAGING_DIRECTORY = C:RSMStagingaokqqj0p.5k1

Job file clean up: True

Use SSH on Linux cluster nodes: False

RSM_USE_SSH_LINUX = False

LivelogFile: NOLIVELOGFILE

StdoutLiveLogFile: stdout_782e78b5-2c00-45fa-b7ac-6976aa49c2cf.live

StderrLiveLogFile: stderr_782e78b5-2c00-45fa-b7ac-6976aa49c2cf.live

Reading input files...

*.dat

file*.*

*.mac

thermal.build

commands.xml

SecInput.txt

Reading cancel files...

file.abt

sec.interrupt

Reading output files...

*.xml

*.NR*

CAERepOutput.xml

cyclic_map.json

exit.topo

file*.dsub

file*.ldhi

file*.png

file*.r0*

file*.r1*

file*.r2*

file*.r3*

file*.r4*

file*.r5*

file*.r6*

file*.r7*

file*.r8*

file*.r9*

file*.rd*

file*.rst

file.BCS

file.ce

file.cm

file.cnd

file.cnm

file.DSP

file.err

file.gst

file.json

file.nd*

file.nlh

file.nr*

file.PCS

file.rdb

file.rfl

file.spm

file0.BCS

file0.ce

file0.cnd

file0.err

file0.gst

file0.nd*

file0.nlh

file0.nr*

file0.PCS

frequencies_*.out

input.x17

intermediate*.topo

Mode_mapping_*.txt

NotSupportedElems.dat

ObjectiveHistory.out

post.out

PostImage*.png

record.txt

solve*.out

topo.err

vars.topo

SecDebugLog.txt

secStart.log

sec.validation.executed

sec.envvarvalidation.executed

sec.failure

*.xml

*.NR*

CAERepOutput.xml

cyclic_map.json

exit.topo

file*.dsub

file*.ldhi

file*.png

file*.r0*

file*.r1*

file*.r2*

file*.r3*

file*.r4*

file*.r5*

file*.r6*

file*.r7*

file*.r8*

file*.r9*

file*.rd*

file*.rst

file.BCS

file.ce

file.cm

file.cnd

file.cnm

file.DSP

file.err

file.gst

file.json

file.nd*

file.nlh

file.nr*

file.PCS

file.rdb

file.rfl

file.spm

file0.BCS

file0.ce

file0.cnd

file0.err

file0.gst

file0.nd*

file0.nlh

file0.nr*

file0.PCS

frequencies_*.out

input.x17

intermediate*.topo

Mode_mapping_*.txt

NotSupportedElems.dat

ObjectiveHistory.out

post.out

PostImage*.png

record.txt

solve*.out

topo.err

vars.topo

SecDebugLog.txt

sec.solverexitcode

secStart.log

sec.failure

sec.envvarvalidation.executed

Reading exclude files...

stdout_782e78b5-2c00-45fa-b7ac-6976aa49c2cf.rsmout

stderr_782e78b5-2c00-45fa-b7ac-6976aa49c2cf.rsmout

control_782e78b5-2c00-45fa-b7ac-6976aa49c2cf.rsm

hosts.dat

exitcode_782e78b5-2c00-45fa-b7ac-6976aa49c2cf.rsmout

exitcodeCommands_782e78b5-2c00-45fa-b7ac-6976aa49c2cf.rsmout

stdout_782e78b5-2c00-45fa-b7ac-6976aa49c2cf.live

stderr_782e78b5-2c00-45fa-b7ac-6976aa49c2cf.live

ClusterJobCustomization.xml

ClusterJobs.py

clusterjob_782e78b5-2c00-45fa-b7ac-6976aa49c2cf.sh

clusterjob_782e78b5-2c00-45fa-b7ac-6976aa49c2cf.bat

inquire.request

inquire.confirm

request.upload.rsm

request.download.rsm

wait.download.rsm

scratch.job.rsm

volatile.job.rsm

restart.xml

cancel_782e78b5-2c00-45fa-b7ac-6976aa49c2cf.rsmout

liveLogLastPositions_782e78b5-2c00-45fa-b7ac-6976aa49c2cf.rsm

stdout_782e78b5-2c00-45fa-b7ac-6976aa49c2cf_kill.rsmout

stderr_782e78b5-2c00-45fa-b7ac-6976aa49c2cf_kill.rsmout

sec.interrupt

stdout_782e78b5-2c00-45fa-b7ac-6976aa49c2cf_*.rsmout

stderr_782e78b5-2c00-45fa-b7ac-6976aa49c2cf_*.rsmout

stdout_task_*.live

stderr_task_*.live

control_task_*.rsm

stdout_task_*.rsmout

stderr_task_*.rsmout

exitcode_task_*.rsmout

exitcodeCommands_task_*.rsmout

file.abt

Reading environment variables...

RSM_IRON_PYTHON_HOME = C:Program FilesANSYS Incv195commonfilesIronPython

RSM_TASK_WORKING_DIRECTORY = C:RSMStagingaokqqj0p.5k1

RSM_USE_SSH_LINUX = False

RSM_QUEUE_NAME = default

RSM_CONFIGUREDQUEUE_NAME = Default

RSM_COMPUTE_SERVER_MACHINE_NAME = Maincad

RSM_HPC_JOBNAME = Mechanical

RSM_HPC_DISPLAYNAME = Annulus_Thread_MAX_Sludge-SidWalls=No Separation,rhoSludge=2,3,t=0-DP0-Model (B4)-Static Structural (B5)-Solution (B6)

RSM_HPC_CORES = 12

RSM_HPC_DISTRIBUTED = TRUE

RSM_HPC_NODE_EXCLUSIVE = FALSE

RSM_HPC_QUEUE = default

RSM_HPC_USER = MAINCADUser

RSM_HPC_WORKDIR = C:RSMStagingaokqqj0p.5k1

RSM_HPC_JOBTYPE = Mechanical_ANSYS

RSM_HPC_ANSYS_LOCAL_INSTALL_DIRECTORY = C:Program FilesANSYS Incv195

RSM_HPC_VERSION = 195

RSM_HPC_STAGING = C:RSMStagingaokqqj0p.5k1

RSM_HPC_LOCAL_PLATFORM = Windows

RSM_HPC_CLUSTER_TARGET_PLATFORM = Windows

RSM_HPC_STDOUTFILE = stdout_782e78b5-2c00-45fa-b7ac-6976aa49c2cf.rsmout

RSM_HPC_STDERRFILE = stderr_782e78b5-2c00-45fa-b7ac-6976aa49c2cf.rsmout

RSM_HPC_STDOUTLIVE = stdout_782e78b5-2c00-45fa-b7ac-6976aa49c2cf.live

RSM_HPC_STDERRLIVE = stderr_782e78b5-2c00-45fa-b7ac-6976aa49c2cf.live

RSM_HPC_SCRIPTS_DIRECTORY_LOCAL = C:Program FilesANSYS Incv195RSMConfigscripts

RSM_HPC_SCRIPTS_DIRECTORY = C:Program FilesANSYS Incv195RSMConfigscripts

RSM_HPC_SUBMITHOST = localhost

RSM_HPC_STORAGEID =

a7ab7b67-dc3d-44cb-a665-813ffa216eee Mechanical=LocalOS#localhost$C:RSMStagingaokqqj0p.5k1 Tuesday, October 08, 2019 10:40:05.011 AM True

RSM_HPC_PLATFORMSTORAGEID = C:RSMStagingaokqqj0p.5k1

RSM_HPC_NATIVEOPTIONS =

ARC_ROOT = C:Program FilesANSYS Incv195RSMConfigscripts....ARC

RSM_HPC_KEYWORD = ARC

RSM_PYTHON_LOCALE = en-us

Reading AWP_ROOT environment variable name ...

AWP_ROOT environment variable name is: AWP_ROOT195

Reading Low Disk Space Warning Limit ...

Low disk space warning threshold set at: 2.0GiB

Reading File identifier ...

File identifier found as: 782e78b5-2c00-45fa-b7ac-6976aa49c2cf

Done reading control file.

RSM_AWP_ROOT_NAME = AWP_ROOT195

AWP_ROOT195 install directory: C:Program FilesANSYS Incv195

RSM_MACHINES = maincad:4:cluster01:4:cluster02:4

Number of nodes assigned for current job = 3

Machine list:

Checking DisableUNCCheck ...

Unable to read registry for HKEY_CURRENT_USERSoftwareMicrosoftCommand Processor

Ignore verifying DisableUNCCheck, you may check it manually if not already done so.

Start running job commands ...

Running on machine : Maincad

Current Directory: C:RSMStagingaokqqj0p.5k1

Running command: C:Program FilesANSYS Incv195SECSolverExecutionControllerrunsec.bat

Redirecting output to None

Final command arg list :

Running Process

Running Solver : C:Program FilesANSYS Incv195ansysbinwinx64ANSYS195.exe -b nolist -s noread -acc nvidia -na 1 -p ansys -mpi intelmpi -i remote.dat -o solve.out -dis -machines maincad:4:cluster01:4:cluster02:4 -dir "C

RSMStaging/aokqqj0p.5k1"

RSMStaging/aokqqj0p.5k1"

*** The AWP_ROOT195 environment variable is set to: C:Program FilesANSYS Incv195

*** The AWP_LOCALE195 environment variable is set to: en-us

*** The ANSYS195_DIR environment variable is set to: C:Program FilesANSYS Incv195ANSYS

*** The ANSYS_SYSDIR environment variable is set to: winx64

*** The ANSYS_SYSDIR32 environment variable is set to: intel

*** The CADOE_DOCDIR195 environment variable is set to: C:Program FilesANSYS Incv195CommonFileshelpen-ussolviewer

*** The CADOE_LIBDIR195 environment variable is set to: C:Program FilesANSYS Incv195CommonFilesLanguageen-us

*** The LSTC_LICENSE environment variable is set to: ANSYS

*** The P_SCHEMA environment variable is set to: C:Program FilesANSYS Incv195AISOLCADIntegrationParasolidPSchema

*** The TEMP environment variable is set to: C:UsersUserAppDataLocalTemp

*** FATAL ***

Invalid working directory value= C

RSMStaging/aokqqj0p.5k1

RSMStaging/aokqqj0p.5k1

(specified working directory does not exist)

*** NOTE ***

USAGE: C:Program FilesANSYS Incv195ANSYSbinwinx64ANSYS.EXE

[-d device name] [-j job name]

[-b list|nolist] [-m scratch memory(mb)]

[-s read|noread] [-g] [-db database(mb)]

[-p product] [-l language]

[-dyn] [-np #] [-mfm]

[-dvt] [-dis] [-machines list]

[-i inputfile] [-o outputfile]

[-ser port] [-scport port]

[-scname couplingname] [-schost hostname]

[-smp] [-mpi intelmpi|ibmmpi|msmpi]

[-dir working_directory ]

*** FATAL ***

Invalid working directory value= C

RSMStaging/aokqqj0p.5k1

RSMStaging/aokqqj0p.5k1

(specified working directory does not exist)

*** NOTE ***

USAGE: C:Program FilesANSYS Incv195ANSYSbinwinx64ANSYS.EXE

[-d device name] [-j job name]

[-b list|nolist] [-m scratch memory(mb)]

[-s read|noread] [-g] [-db database(mb)]

[-p product] [-l language]

[-dyn] [-np #] [-mfm]

[-dvt] [-dis] [-machines list]

[-i inputfile] [-o outputfile]

[-ser port] [-scport port]

[-scname couplingname] [-schost hostname]

[-smp] [-mpi intelmpi|ibmmpi|msmpi]

[-dir working_directory ]

[-acc nvidia] [-na #]

*** FATAL ***

Invalid working directory value= C

RSMStaging/aokqqj0p.5k1

RSMStaging/aokqqj0p.5k1

(specified working directory does not exist)

*** NOTE ***

USAGE: C:Program FilesANSYS Incv195ANSYSbinwinx64ANSYS.EXE

[-d device name] [-j job name]

[-b list|nolist] [-m scratch memory(mb)]

[-s read|noread] [-g] [-db database(mb)]

[-p product] [-l language]

[-dyn] [-np #] [-mfm]

[-dvt] [-dis] [-machines list]

[-i inputfile] [-o outputfile]

[-ser port] [-scport port]

[-scname couplingname] [-schost hostname]

[-smp] [-mpi intelmpi|ibmmpi|msmpi]

[-dir working_directory ]

[-acc nvidia] [-na #]

*** FATAL ***

Invalid working directory value= C

RSMStaging/aokqqj0p.5k1

RSMStaging/aokqqj0p.5k1

(specified working directory does not exist)

*** NOTE ***

USAGE: C:Program FilesANSYS Incv195ANSYSbinwinx64ANSYS.EXE

[-d device name] [-j job name]

[-b list|nolist] [-m scratch memory(mb)]

[-s read|noread] [-g] [-db database(mb)]

[-p product] [-l language]

[-dyn] [-np #] [-mfm]

[-dvt] [-dis] [-machines list]

[-i inputfile] [-o outputfile]

[-ser port] [-scport port]

[-scname couplingname] [-schost hostname]

[-smp] [-mpi intelmpi|ibmmpi|msmpi]

[-dir working_directory ]

[-acc nvidia] [-na #]

[-acc nvidia] [-na #]

*** FATAL ***

Invalid working directory value= C

RSMStaging/aokqqj0p.5k1

RSMStaging/aokqqj0p.5k1

(specified working directory does not exist)

*** NOTE ***

USAGE: C:Program FilesANSYS Incv195ANSYSbinwinx64ANSYS.EXE

[-d device name] [-j job name]

[-b list|nolist] [-m scratch memory(mb)]

[-s read|noread] [-g] [-db database(mb)]

[-p product] [-l language]

[-dyn] [-np #] [-mfm]

[-dvt] [-dis] [-machines list]

[-i inputfile] [-o outputfile]

[-ser port] [-scport port]

[-scname couplingname] [-schost hostname]

[-smp] [-mpi intelmpi|ibmmpi|msmpi]

[-dir working_directory ]

[-acc nvidia] [-na #]

*** FATAL ***

Invalid working directory value= C

RSMStaging/aokqqj0p.5k1

RSMStaging/aokqqj0p.5k1

(specified working directory does not exist)

*** NOTE ***

USAGE: C:Program FilesANSYS Incv195ANSYSbinwinx64ANSYS.EXE

[-d device name] [-j job name]

[-b list|nolist] [-m scratch memory(mb)]

[-s read|noread] [-g] [-db database(mb)]

[-p product] [-l language]

[-dyn] [-np #] [-mfm]

[-dvt] [-dis] [-machines list]

[-i inputfile] [-o outputfile]

[-ser port] [-scport port]

[-scname couplingname] [-schost hostname]

[-smp] [-mpi intelmpi|ibmmpi|msmpi]

[-dir working_directory ]

[-acc nvidia] [-na #]

*** FATAL ***

Invalid working directory value= C

RSMStaging/aokqqj0p.5k1

RSMStaging/aokqqj0p.5k1

(specified working directory does not exist)

*** NOTE ***

USAGE: C:Program FilesANSYS Incv195ANSYSbinwinx64ANSYS.EXE

[-d device name] [-j job name]

[-b list|nolist] [-m scratch memory(mb)]

[-s read|noread] [-g] [-db database(mb)]

[-p product] [-l language]

[-dyn] [-np #] [-mfm]

[-dvt] [-dis] [-machines list]

[-i inputfile] [-o outputfile]

[-ser port] [-scport port]

[-scname couplingname] [-schost hostname]

[-smp] [-mpi intelmpi|ibmmpi|msmpi]

[-dir working_directory ]

[-acc nvidia] [-na #]

*** FATAL ***

Invalid working directory value= C

RSMStaging/aokqqj0p.5k1

RSMStaging/aokqqj0p.5k1

(specified working directory does not exist)

*** NOTE ***

USAGE: C:Program FilesANSYS Incv195ANSYSbinwinx64ANSYS.EXE

[-d device name] [-j job name]

[-b list|nolist] [-m scratch memory(mb)]

[-s read|noread] [-g] [-db database(mb)]

[-p product] [-l language]

[-dyn] [-np #] [-mfm]

[-dvt] [-dis] [-machines list]

[-i inputfile] [-o outputfile]

[-ser port] [-scport port]

[-scname couplingname] [-schost hostname]

[-smp] [-mpi intelmpi|ibmmpi|msmpi]

[-dir working_directory ]

[-acc nvidia] [-na #]

send of 20 bytes failed.

Command Exit Code: 99

application called MPI_Abort(MPI_COMM_WORLD, 99) - process 0

[mpiexec@Maincad] ..hydrautilssocksock.c (420): write error (Unknown error)

[mpiexec@Maincad] ..hydrautilslaunchlaunch.c (121): shutdown failed, sock 648, error 10093

ClusterJobs Command Exit Code: 99

Saving exit code file: C:RSMStagingaokqqj0p.5k1exitcode_782e78b5-2c00-45fa-b7ac-6976aa49c2cf.rsmout

Exit code file: C:RSMStagingaokqqj0p.5k1exitcode_782e78b5-2c00-45fa-b7ac-6976aa49c2cf.rsmout has been created.

Saving exit code file: C:RSMStagingaokqqj0p.5k1exitcodeCommands_782e78b5-2c00-45fa-b7ac-6976aa49c2cf.rsmout

Exit code file: C:RSMStagingaokqqj0p.5k1exitcodeCommands_782e78b5-2c00-45fa-b7ac-6976aa49c2cf.rsmout has been created.

=======SOLVE.OUT FILE======

solve --> *** FATAL ***

solve --> Attempt to run ANSYS in a distributed mode failed.

solve --> The Distributed ANSYS process with MPI Rank ID = 4 is not responding.

solve --> Please refer to the Distributed ANSYS Guide for detailed setup and configuration information.

ClusterJobs Exiting with code: 99

Individual Command Exit Codes are: [99]

-

October 9, 2019 at 6:26 pm

JakeC

Ansys EmployeeHi,

If you are trying to distribute across multiple machines your staging directory can't be a local drive "c:"

You will need to share that directory and in the staging directory setting use the unc path to that shared directory eg:

\maincadRSMStaging

Please be sure to set the RSMStaging share security to be open to all users and machines.

Thank you,

Jake

-

October 10, 2019 at 2:13 am

kolyan007

SubscriberThat shared folder exists on maincad and has full access to everyone.

>If you are trying to distribute across multiple machines your staging directory can't be a local drive "c:" - Does it applies to Submit Host as well? I.e. on Submit host in RSM configuration do I need to use OS file transfer and put UNC path to shared folder \maincadRSMStaging? Like below:

Remote solvers already using it.

-

October 11, 2019 at 5:14 am

kolyan007

SubscriberThe localhost HPC Resource has been changed to use operating system file transfer to the cluster staging directory, as on the screenshot above, to use UNC folder: \maincadRSMStaging.

Solve process has failed with the error message below:

1

11/10/2019 1:58 0 pm

0 pm

RSM Version: 19.5.420.0, Build Date: 30/07/2019 5 7:06 am

7:06 am

2

11/10/2019 1:58 0 pm

0 pm

RSM File Version: 19.5.0-beta.3+Branch.release-19.5.Sha.9c15e0a3e0a608e8efe8c568e494d2160788d025

3

11/10/2019 1:58 0 pm

0 pm

RSM Library Version: 19.5.0.0

4

11/10/2019 1:58 0 pm

0 pm

Job Name: Annulus_Thread_MAX_Sludge-SidWalls=No Separation,rhoSludge=2,3,t=0-DP0-Model (B4)-Static Structural (B5)-Solution (B6)

5

11/10/2019 1:58 0 pm

0 pm

Type: Mechanical_ANSYS

6

11/10/2019 1:58 0 pm

0 pm

Client Directory: C:TestProject - Copy - Copy_ProjectScratchScr771B

7

11/10/2019 1:58 0 pm

0 pm

Client Machine: Maincad

8

11/10/2019 1:58 0 pm

0 pm

Queue: Default [localhost, default]

9

11/10/2019 1:58 0 pm

0 pm

Template: Mechanical_ANSYSJob.xml

10

11/10/2019 1:58 0 pm

0 pm

Cluster Configuration: localhost [localhost]

11

11/10/2019 1:58 0 pm

0 pm

Cluster Type: ARC

12

11/10/2019 1:58 0 pm

0 pm

Custom Keyword: blank

13

11/10/2019 1:58 0 pm

0 pm

Transfer Option: NativeOS

14

11/10/2019 1:58 0 pm

0 pm

Staging Directory: \MainCADRSMStaging

15

11/10/2019 1:58 0 pm

0 pm

Delete Staging Directory: True

16

11/10/2019 1:58 0 pm

0 pm

Local Scratch Directory: blank

17

11/10/2019 1:58 0 pm

0 pm

Platform: Windows

18

11/10/2019 1:58 0 pm

0 pm

Cluster Submit Options: blank

19

11/10/2019 1:58 0 pm

0 pm

Normal Inputs: [*.dat,file*.*,*.mac,thermal.build,commands.xml,SecInput.txt]

20

11/10/2019 1:58 0 pm

0 pm

Cancel Inputs: [file.abt,sec.interrupt]

21

11/10/2019 1:58 0 pm

0 pm

Excluded Inputs: [-]

22

11/10/2019 1:58 0 pm

0 pm

Normal Outputs: [*.xml,*.NR*,CAERepOutput.xml,cyclic_map.json,exit.topo,file*.dsub,file*.ldhi,file*.png,file*.r0*,file*.r1*,file*.r2*,file*.r3*,file*.r4*,file*.r5*,file*.r6*,file*.r7*,file*.r8*,file*.r9*,file*.rd*,file*.rst,file.BCS,file.ce,file.cm,file.cnd,file.cnm,file.DSP,file.err,file.gst,file.json,file.nd*,file.nlh,file.nr*,file.PCS,file.rdb,file.rfl,file.spm,file0.BCS,file0.ce,file0.cnd,file0.err,file0.gst,file0.nd*,file0.nlh,file0.nr*,file0.PCS,frequencies_*.out,input.x17,intermediate*.topo,Mode_mapping_*.txt,NotSupportedElems.dat,ObjectiveHistory.out,post.out,PostImage*.png,record.txt,solve*.out,topo.err,top ut,vars.topo,SecDebugLog.txt,secStart.log,sec.validation.executed,sec.envvarvalidation.executed,sec.failure]

ut,vars.topo,SecDebugLog.txt,secStart.log,sec.validation.executed,sec.envvarvalidation.executed,sec.failure]

23

11/10/2019 1:58 0 pm

0 pm

Failure Outputs: [*.xml,*.NR*,CAERepOutput.xml,cyclic_map.json,exit.topo,file*.dsub,file*.ldhi,file*.png,file*.r0*,file*.r1*,file*.r2*,file*.r3*,file*.r4*,file*.r5*,file*.r6*,file*.r7*,file*.r8*,file*.r9*,file*.rd*,file*.rst,file.BCS,file.ce,file.cm,file.cnd,file.cnm,file.DSP,file.err,file.gst,file.json,file.nd*,file.nlh,file.nr*,file.PCS,file.rdb,file.rfl,file.spm,file0.BCS,file0.ce,file0.cnd,file0.err,file0.gst,file0.nd*,file0.nlh,file0.nr*,file0.PCS,frequencies_*.out,input.x17,intermediate*.topo,Mode_mapping_*.txt,NotSupportedElems.dat,ObjectiveHistory.out,post.out,PostImage*.png,record.txt,solve*.out,topo.err,top ut,vars.topo,SecDebugLog.txt,sec.solverexitcode,secStart.log,sec.failure,sec.envvarvalidation.executed]

ut,vars.topo,SecDebugLog.txt,sec.solverexitcode,secStart.log,sec.failure,sec.envvarvalidation.executed]

24

11/10/2019 1:58 0 pm

0 pm

Cancel Outputs: [file*.err,solve.out,secStart.log,SecDebugLog.txt]

25

11/10/2019 1:58 0 pm

0 pm

Excluded Outputs: [-]

26

11/10/2019 1:58 0 pm

0 pm

Inquire Files:

27

11/10/2019 1:58 0 pm

0 pm

normal: [*.xml,*.NR*,CAERepOutput.xml,cyclic_map.json,exit.topo,file*.dsub,file*.ldhi,file*.png,file*.r0*,file*.r1*,file*.r2*,file*.r3*,file*.r4*,file*.r5*,file*.r6*,file*.r7*,file*.r8*,file*.r9*,file*.rd*,file*.rst,file.BCS,file.ce,file.cm,file.cnd,file.cnm,file.DSP,file.err,file.gst,file.json,file.nd*,file.nlh,file.nr*,file.PCS,file.rdb,file.rfl,file.spm,file0.BCS,file0.ce,file0.cnd,file0.err,file0.gst,file0.nd*,file0.nlh,file0.nr*,file0.PCS,frequencies_*.out,input.x17,intermediate*.topo,Mode_mapping_*.txt,NotSupportedElems.dat,ObjectiveHistory.out,post.out,PostImage*.png,record.txt,solve*.out,topo.err,top ut,vars.topo,SecDebugLog.txt,secStart.log,sec.validation.executed,sec.envvarvalidation.executed,sec.failure]

ut,vars.topo,SecDebugLog.txt,secStart.log,sec.validation.executed,sec.envvarvalidation.executed,sec.failure]

28

11/10/2019 1:58 0 pm

0 pm

cancel: [file*.err,solve.out,secStart.log,SecDebugLog.txt]

29

11/10/2019 1:58 0 pm

0 pm

failure: [*.xml,*.NR*,CAERepOutput.xml,cyclic_map.json,exit.topo,file*.dsub,file*.ldhi,file*.png,file*.r0*,file*.r1*,file*.r2*,file*.r3*,file*.r4*,file*.r5*,file*.r6*,file*.r7*,file*.r8*,file*.r9*,file*.rd*,file*.rst,file.BCS,file.ce,file.cm,file.cnd,file.cnm,file.DSP,file.err,file.gst,file.json,file.nd*,file.nlh,file.nr*,file.PCS,file.rdb,file.rfl,file.spm,file0.BCS,file0.ce,file0.cnd,file0.err,file0.gst,file0.nd*,file0.nlh,file0.nr*,file0.PCS,frequencies_*.out,input.x17,intermediate*.topo,Mode_mapping_*.txt,NotSupportedElems.dat,ObjectiveHistory.out,post.out,PostImage*.png,record.txt,solve*.out,topo.err,top ut,vars.topo,SecDebugLog.txt,sec.solverexitcode,secStart.log,sec.failure,sec.envvarvalidation.executed]

ut,vars.topo,SecDebugLog.txt,sec.solverexitcode,secStart.log,sec.failure,sec.envvarvalidation.executed]

30

11/10/2019 1:58 0 pm

0 pm

RemotePostInformation: [RemotePostInformation.txt]

31

11/10/2019 1:58 0 pm

0 pm

SolutionInformation: [solve.out,file.gst,file.nlh,file0.gst,file0.nlh,file.cnd]

32

11/10/2019 1:58 0 pm

0 pm

PostDuringSolve: [file.rcn,file.redm,file.rfl,file.rfrq,file.rmg,file.rdsp,file.rsx,file.rst,file.rth,solve.out,file.gst,file.nlh,file0.gst,file0.nlh,file.cnd]

33

11/10/2019 1:58 0 pm

0 pm

transcript: [solve.out,monitor.json]

34

11/10/2019 1:58 0 pm

0 pm

Submission in progress...

35

11/10/2019 1:58 0 pm

0 pm

Created storage directory \MainCADRSMStaging4w4eooau.l0x

36

11/10/2019 1:58 0 pm

0 pm

Runtime Settings:

37

11/10/2019 1:58 0 pm

0 pm

Job Owner: MAINCADUser

38

11/10/2019 1:58 0 pm

0 pm

Submit Time: Friday, 11 October 2019 1:58 pm

39

11/10/2019 1:58 0 pm

0 pm

Directory: \MainCADRSMStaging4w4eooau.l0x

40

11/10/2019 1:58 0 pm

0 pm

File Transfer Package: Upload to localhost:0

41

11/10/2019 1:58 0 pm

0 pm

Name: RSM_Upload_During_Submit

42

11/10/2019 1:58 0 pm

0 pm

Local Directory: C:TestProject - Copy - Copy_ProjectScratchScr771B

43

11/10/2019 1:58 0 pm

0 pm

Storage Path: \MainCADRSMStaging4w4eooau.l0x

44

11/10/2019 1:58 0 pm

0 pm

Storage Location:

45

11/10/2019 1:58 0 pm

0 pm

Files: [*.dat,file*.*,*.mac,thermal.build,commands.xml,SecInput.txt]

46

11/10/2019 1:58 0 pm

0 pm

Exclude: [-]

47

11/10/2019 1:58 0 pm

0 pm

Storage contents:

48

11/10/2019 1:58 0 pm

0 pm

Uploading file: C:TestProject - Copy - Copy_ProjectScratchScr771Bds.dat

49

11/10/2019 1:58 0 pm

0 pm

Copying C:TestProject - Copy - Copy_ProjectScratchScr771Bds.dat to //MainCAD/RSMStaging/4w4eooau.l0x/ds.dat

50

11/10/2019 1:58 0 pm

0 pm

File size: 39897206 bytes, transferred: 39897206 bytes.

51

11/10/2019 1:58 0 pm

0 pm

Uploading file: C:TestProject - Copy - Copy_ProjectScratchScr771Bremote.dat

52

11/10/2019 1:58 0 pm

0 pm

Copying C:TestProject - Copy - Copy_ProjectScratchScr771Bremote.dat to //MainCAD/RSMStaging/4w4eooau.l0x/remote.dat

53

11/10/2019 1:58 0 pm

0 pm

File size: 179 bytes, transferred: 179 bytes.

54

11/10/2019 1:58 0 pm

0 pm

Uploading file: C:TestProject - Copy - Copy_ProjectScratchScr771Bcommands.xml

55

11/10/2019 1:58 0 pm

0 pm

Copying C:TestProject - Copy - Copy_ProjectScratchScr771Bcommands.xml to //MainCAD/RSMStaging/4w4eooau.l0x/commands.xml

56

11/10/2019 1:58 0 pm

0 pm

File size: 477 bytes, transferred: 477 bytes.

57

11/10/2019 1:58 0 pm

0 pm

Uploading file: C:TestProject - Copy - Copy_ProjectScratchScr771BSecInput.txt

58

11/10/2019 1:58 0 pm

0 pm

Copying C:TestProject - Copy - Copy_ProjectScratchScr771BSecInput.txt to //MainCAD/RSMStaging/4w4eooau.l0x/SecInput.txt

59

11/10/2019 1:58 0 pm

0 pm

File size: 899 bytes, transferred: 899 bytes.

60

11/10/2019 1:58 0 pm

0 pm

Storage contents:

61

11/10/2019 1:58 0 pm

0 pm

ds.dat

62

11/10/2019 1:58 0 pm

0 pm

remote.dat

63

11/10/2019 1:58 0 pm

0 pm

commands.xml

64

11/10/2019 1:58 0 pm

0 pm

SecInput.txt

65

11/10/2019 1:58 0 pm

0 pm

39898.76 KB, .11 sec (364982.55 KB/sec)

66

11/10/2019 1:58 0 pm

0 pm

Submission in progress...

67

11/10/2019 1:58 0 pm

0 pm

Commands from commands.xml file

68

11/10/2019 1:58 0 pm

0 pm

Behavior code: Ansys.Rsm.ClusterOperations.Behaviors.MechanicalAnsysBehaviors

69

11/10/2019 1:58 0 pm

0 pm

Loading Cluster Customization file C:Program FilesANSYS Incv195RSMConfigxmlClusterJobCustomization.xml.

70

11/10/2019 1:58 0 pm

0 pm

No customization found for cluster machineName Maincad, type ARC

71

11/10/2019 1:58 0 pm

0 pm

HPC Commands Files Used:

72

11/10/2019 1:58 0 pm

0 pm

hpc_commands_ARC.xml

73

11/10/2019 1:58 0 pm

0 pm

Environment variables:

74

11/10/2019 1:58 0 pm

0 pm

RSM_PYTHON_HOME=C:Program FilesANSYS Incv195commonfilesCPython2_7_15winx64Releasepython

75

11/10/2019 1:58 0 pm

0 pm

RSM_IRON_PYTHON_HOME=C:Program FilesANSYS Incv195commonfilesIronPython

76

11/10/2019 1:58 0 pm

0 pm

RSM_PYTHON_EXE=C:Program FilesANSYS Incv195commonfilesCPython2_7_15winx64Releasepythonpython.exe

77

11/10/2019 1:58 0 pm

0 pm

RSM_TASK_WORKING_DIRECTORY=\MainCADRSMStaging4w4eooau.l0x

78

11/10/2019 1:58 0 pm

0 pm

RSM_USE_SSH_LINUX=False

79

11/10/2019 1:58 0 pm

0 pm

RSM_QUEUE_NAME=default

80

11/10/2019 1:58 0 pm

0 pm

RSM_CONFIGUREDQUEUE_NAME=Default

81

11/10/2019 1:58 0 pm

0 pm

RSM_COMPUTE_SERVER_MACHINE_NAME=Maincad

82

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_JOBNAME=Mechanical

83

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_DISPLAYNAME=Annulus_Thread_MAX_Sludge-SidWalls=No Separation,rhoSludge=2,3,t=0-DP0-Model (B4)-Static Structural (B5)-Solution (B6)

84

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_CORES=12

85

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_DISTRIBUTED=TRUE

86

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_NODE_EXCLUSIVE=FALSE

87

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_QUEUE=default

88

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_USER=MAINCADUser

89

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_WORKDIR=\MainCADRSMStaging4w4eooau.l0x

90

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_JOBTYPE=Mechanical_ANSYS

91

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_ANSYS_LOCAL_INSTALL_DIRECTORY=C:Program FilesANSYS Incv195

92

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_VERSION=195

93

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_STAGING=\MainCADRSMStaging4w4eooau.l0x

94

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_LOCAL_PLATFORM=Windows

95

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_CLUSTER_TARGET_PLATFORM=Windows

96

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_STDOUTFILE=stdout_3a6b4620-8d29-4665-88a3-df770a04d30d.rsmout

97

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_STDERRFILE=stderr_3a6b4620-8d29-4665-88a3-df770a04d30d.rsmout

98

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_STDOUTLIVE=stdout_3a6b4620-8d29-4665-88a3-df770a04d30d.live

99

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_STDERRLIVE=stderr_3a6b4620-8d29-4665-88a3-df770a04d30d.live

100

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_SCRIPTS_DIRECTORY_LOCAL=C:Program FilesANSYS Incv195RSMConfigscripts

101

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_SCRIPTS_DIRECTORY=%AWP_ROOT195%RSMConfigscripts

102

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_SUBMITHOST=localhost

103

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_STORAGEID=dc1790b9-6290-4019-9b18-38acc7465469 Mechanical=LocalOS#localhost$\MainCADRSMStaging4w4eooau.l0x Friday, October 11, 2019 12:58  0.393 AM

0.393 AMTrue

104

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_PLATFORMSTORAGEID=\MainCADRSMStaging4w4eooau.l0x

105

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_NATIVEOPTIONS=

106

11/10/2019 1:58 0 pm

0 pm

ARC_ROOT=C:Program FilesANSYS Incv195RSMConfigscripts....ARC

107

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_KEYWORD=ARC

108

11/10/2019 1:58 0 pm

0 pm

JobType is: Mechanical_ANSYS

109

11/10/2019 1:58 0 pm

0 pm

Final command platform: Windows

110

11/10/2019 1:58 0 pm

0 pm

RSM_PYTHON_HOME=C:Program FilesANSYS Incv195commonfilesCPython2_7_15winx64Releasepython

111

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_JOBNAME=Mechanical

112

11/10/2019 1:58 0 pm

0 pm

Distributed mode requested: True

113

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_DISTRIBUTED=TRUE

114

11/10/2019 1:58 0 pm

0 pm

Running 1 commands

115

11/10/2019 1:58 0 pm

0 pm

Job working directory: \MainCADRSMStaging4w4eooau.l0x

116

11/10/2019 1:58 0 pm

0 pm

Number of CPU requested: 12

117

11/10/2019 1:58 0 pm

0 pm

AWP_ROOT195=C:Program FilesANSYS Incv195

118

11/10/2019 1:58 0 pm

0 pm

Checking queue default exists ...

119

11/10/2019 1:58 0 pm

0 pm

External cluster command: queryQueues

120

11/10/2019 1:58 0 pm

0 pm

Cluster command before expansion: %ARC_ROOT%binArcQueues.exe

121

11/10/2019 1:58 0 pm

0 pm

Final cluster command: C:Program FilesANSYS Incv195RSMConfigscripts....ARCbinArcQueues.exe

122

11/10/2019 1:58 0 pm

0 pm

Working directory : \MainCADRSMStaging4w4eooau.l0x

123

11/10/2019 1:58 0 pm

0 pm

Variables:

124

11/10/2019 1:58 0 pm

0 pm

RSM_PYTHON_HOME=C:Program FilesANSYS Incv195commonfilesCPython2_7_15winx64Releasepython

125

11/10/2019 1:58 0 pm

0 pm

RSM_IRON_PYTHON_HOME=C:Program FilesANSYS Incv195commonfilesIronPython

126

11/10/2019 1:58 0 pm

0 pm

RSM_PYTHON_EXE=C:Program FilesANSYS Incv195commonfilesCPython2_7_15winx64Releasepythonpython.exe

127

11/10/2019 1:58 0 pm

0 pm

RSM_TASK_WORKING_DIRECTORY=\MainCADRSMStaging4w4eooau.l0x

128

11/10/2019 1:58 0 pm

0 pm

RSM_USE_SSH_LINUX=False

129

11/10/2019 1:58 0 pm

0 pm

RSM_QUEUE_NAME=default

130

11/10/2019 1:58 0 pm

0 pm

RSM_CONFIGUREDQUEUE_NAME=Default

131

11/10/2019 1:58 0 pm

0 pm

RSM_COMPUTE_SERVER_MACHINE_NAME=Maincad

132

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_JOBNAME=Mechanical

133

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_DISPLAYNAME=Annulus_Thread_MAX_Sludge-SidWalls=No Separation,rhoSludge=2,3,t=0-DP0-Model (B4)-Static Structural (B5)-Solution (B6)

134

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_CORES=12

135

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_DISTRIBUTED=TRUE

136

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_NODE_EXCLUSIVE=FALSE

137

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_QUEUE=default

138

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_USER=MAINCADUser

139

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_WORKDIR=\MainCADRSMStaging4w4eooau.l0x

140

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_JOBTYPE=Mechanical_ANSYS

141

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_ANSYS_LOCAL_INSTALL_DIRECTORY=C:Program FilesANSYS Incv195

142

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_VERSION=195

143

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_STAGING=\MainCADRSMStaging4w4eooau.l0x

144

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_LOCAL_PLATFORM=Windows

145

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_CLUSTER_TARGET_PLATFORM=Windows

146

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_STDOUTFILE=stdout_3a6b4620-8d29-4665-88a3-df770a04d30d.rsmout

147

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_STDERRFILE=stderr_3a6b4620-8d29-4665-88a3-df770a04d30d.rsmout

148

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_STDOUTLIVE=stdout_3a6b4620-8d29-4665-88a3-df770a04d30d.live

149

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_STDERRLIVE=stderr_3a6b4620-8d29-4665-88a3-df770a04d30d.live

150

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_SCRIPTS_DIRECTORY_LOCAL=C:Program FilesANSYS Incv195RSMConfigscripts

151

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_SCRIPTS_DIRECTORY=%AWP_ROOT195%RSMConfigscripts

152

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_SUBMITHOST=localhost

153

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_STORAGEID=dc1790b9-6290-4019-9b18-38acc7465469 Mechanical=LocalOS#localhost$\MainCADRSMStaging4w4eooau.l0x Friday, October 11, 2019 12:58  0.393 AM

0.393 AMTrue

154

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_PLATFORMSTORAGEID=\MainCADRSMStaging4w4eooau.l0x

155

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_NATIVEOPTIONS=

156

11/10/2019 1:58 0 pm

0 pm

ARC_ROOT=C:Program FilesANSYS Incv195RSMConfigscripts....ARC

157

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_KEYWORD=ARC

158

11/10/2019 1:58 0 pm

0 pm

RSM_HPC_PARSE_MARKER=START

159

11/10/2019 1:58 0 pm

0 pm

160

11/10/2019 1:58 1 pm

1 pm

ArcMaster process running as NT AUTHORITYSYSTEM

161

11/10/2019 1:58 1 pm

1 pm

ArcNode process running as NT AUTHORITYSYSTEM

162

11/10/2019 1:58 1 pm

1 pm

Skipping autostart because Master processes is started as a service.

163

11/10/2019 1:58 1 pm

1 pm

Name Status Priority Start Time End Time Max Jobs Allowed Machines Allowed Users

164

11/10/2019 1:58 1 pm

1 pm

================================================================================================================================

165

11/10/2019 1:58 1 pm

1 pm

default Active 0 00:00:00 23:59:59 * maincad:cluster01:clust... all

166

11/10/2019 1:58 1 pm

1 pm

local Active 0 00:00:00 23:59:59 * maincad all

167

11/10/2019 1:58 1 pm

1 pm

* Indicates that resources have not been set up. Any resource request will be accepted.

168

11/10/2019 1:58 1 pm

1 pm

External cluster command: checkQueueExists

169

11/10/2019 1:58 1 pm

1 pm

Cluster command before expansion: %RSM_PYTHON_EXE% -B -E "%RSM_HPC_SCRIPTS_DIRECTORY_LOCAL%/arcParsing.py" -queues %RSM_HPC_PARSE_MARKER%

170

11/10/2019 1:58 1 pm

1 pm

Final cluster command: C:Program FilesANSYS Incv195commonfilesCPython2_7_15winx64Releasepythonpython.exe -B -E "C:Program FilesANSYS Incv195RSMConfigscripts/arcParsing.py" -queues START

171

11/10/2019 1:58 1 pm

1 pm

Working directory : \MainCADRSMStaging4w4eooau.l0x

172

11/10/2019 1:58 1 pm

1 pm

Variables:

173

11/10/2019 1:58 1 pm

1 pm

RSM_PYTHON_HOME=C:Program FilesANSYS Incv195commonfilesCPython2_7_15winx64Releasepython

174

11/10/2019 1:58 1 pm

1 pm

RSM_IRON_PYTHON_HOME=C:Program FilesANSYS Incv195commonfilesIronPython

175

11/10/2019 1:58 1 pm

1 pm

RSM_PYTHON_EXE=C:Program FilesANSYS Incv195commonfilesCPython2_7_15winx64Releasepythonpython.exe

176

11/10/2019 1:58 1 pm

1 pm

RSM_TASK_WORKING_DIRECTORY=\MainCADRSMStaging4w4eooau.l0x

177

11/10/2019 1:58 1 pm

1 pm

RSM_USE_SSH_LINUX=False

178

11/10/2019 1:58 1 pm

1 pm

RSM_QUEUE_NAME=default

179

11/10/2019 1:58 1 pm

1 pm

RSM_CONFIGUREDQUEUE_NAME=Default

180

11/10/2019 1:58 1 pm

1 pm

RSM_COMPUTE_SERVER_MACHINE_NAME=Maincad

181

11/10/2019 1:58 1 pm

1 pm

RSM_HPC_JOBNAME=Mechanical

182

11/10/2019 1:58 1 pm

1 pm

RSM_HPC_DISPLAYNAME=Annulus_Thread_MAX_Sludge-SidWalls=No Separation,rhoSludge=2,3,t=0-DP0-Model (B4)-Static Structural (B5)-Solution (B6)

183

11/10/2019 1:58 1 pm

1 pm

RSM_HPC_CORES=12

184

11/10/2019 1:58 1 pm

1 pm

RSM_HPC_DISTRIBUTED=TRUE

185

11/10/2019 1:58 1 pm

1 pm

RSM_HPC_NODE_EXCLUSIVE=FALSE

186

11/10/2019 1:58 1 pm

1 pm

RSM_HPC_QUEUE=default

187

11/10/2019 1:58 1 pm

1 pm

RSM_HPC_USER=MAINCADUser

188

11/10/2019 1:58 1 pm

1 pm

RSM_HPC_WORKDIR=\MainCADRSMStaging4w4eooau.l0x

189

11/10/2019 1:58 1 pm

1 pm

RSM_HPC_JOBTYPE=Mechanical_ANSYS

190

11/10/2019 1:58 1 pm

1 pm

RSM_HPC_ANSYS_LOCAL_INSTALL_DIRECTORY=C:Program FilesANSYS Incv195

191

11/10/2019 1:58 1 pm

1 pm

RSM_HPC_VERSION=195

192

11/10/2019 1:58 1 pm

1 pm

RSM_HPC_STAGING=\MainCADRSMStaging4w4eooau.l0x

193

11/10/2019 1:58 1 pm

1 pm

RSM_HPC_LOCAL_PLATFORM=Windows

194

11/10/2019 1:58 1 pm

1 pm

RSM_HPC_CLUSTER_TARGET_PLATFORM=Windows

195

11/10/2019 1:58 1 pm

1 pm

RSM_HPC_STDOUTFILE=stdout_3a6b4620-8d29-4665-88a3-df770a04d30d.rsmout

196

11/10/2019 1:58 1 pm

1 pm

RSM_HPC_STDERRFILE=stderr_3a6b4620-8d29-4665-88a3-df770a04d30d.rsmout

197

11/10/2019 1:58 1 pm

1 pm

RSM_HPC_STDOUTLIVE=stdout_3a6b4620-8d29-4665-88a3-df770a04d30d.live

198

11/10/2019 1:58 1 pm

1 pm

RSM_HPC_STDERRLIVE=stderr_3a6b4620-8d29-4665-88a3-df770a04d30d.live

199

11/10/2019 1:58 1 pm

1 pm

RSM_HPC_SCRIPTS_DIRECTORY_LOCAL=C:Program FilesANSYS Incv195RSMConfigscripts

200

11/10/2019 1:58 1 pm

1 pm

RSM_HPC_SCRIPTS_DIRECTORY=%AWP_ROOT195%RSMConfigscripts

201

11/10/2019 1:58 1 pm

1 pm

RSM_HPC_SUBMITHOST=localhost

202

11/10/2019 1:58 1 pm

1 pm

RSM_HPC_STORAGEID=dc1790b9-6290-4019-9b18-38acc7465469 Mechanical=LocalOS#localhost$\MainCADRSMStaging4w4eooau.l0x Friday, October 11, 2019 12:58  0.393 AM

0.393 AMTrue

203

11/10/2019 1:58 1 pm

1 pm

RSM_HPC_PLATFORMSTORAGEID=\MainCADRSMStaging4w4eooau.l0x

204

11/10/2019 1:58 1 pm

1 pm

RSM_HPC_NATIVEOPTIONS=

205

11/10/2019 1:58 1 pm

1 pm

ARC_ROOT=C:Program FilesANSYS Incv195RSMConfigscripts....ARC

206

11/10/2019 1:58 1 pm

1 pm

RSM_HPC_KEYWORD=ARC

207

11/10/2019 1:58 1 pm

1 pm

RSM_HPC_PARSE_MARKER=START

208

11/10/2019 1:58 1 pm

1 pm

RSM_HPC_PRIMARY_STDOUT=ArcMaster process running as NT AUTHORITYSYSTEM

209

11/10/2019 1:58 1 pm

1 pm

ArcNode process running as NT AUTHORITYSYSTEM

210

11/10/2019 1:58 1 pm

1 pm

Skipping autostart because Master processes is started as a service.

211

11/10/2019 1:58 1 pm

1 pm

Name Status Priority Start Time End Time Max Jobs Allowed Machines Allowed Users

212

11/10/2019 1:58 1 pm

1 pm

================================================================================================================================

213

11/10/2019 1:58 1 pm

1 pm

default Active 0 00:00:00 23:59:59 * maincad:cluster01:clust... all

214

11/10/2019 1:58 1 pm

1 pm

local Active 0 00:00:00 23:59:59 * maincad all

215

11/10/2019 1:58 1 pm

1 pm

* Indicates that resources have not been set up. Any resource request will be accepted.

216

11/10/2019 1:58 1 pm

1 pm

RSM_HPC_PRIMARY_STDERR=

217

11/10/2019 1:58 1 pm

1 pm

218

11/10/2019 1:58 1 pm

1 pm

Executing command: %AWP_ROOT195%SECSolverExecutionControllerrunsec.bat (Ansys Solver)

219

11/10/2019 1:58 1 pm

1 pm

commands.xml file: \MainCADRSMStaging4w4eooau.l0xcommands.xml

220

11/10/2019 1:58 1 pm

1 pm

221

11/10/2019 1:58 1 pm

1 pm

222

11/10/2019 1:58 1 pm

1 pm

223

11/10/2019 1:58 1 pm

1 pm

true

224

11/10/2019 1:58 1 pm

1 pm

225

11/10/2019 1:58 1 pm

1 pm

12

226

11/10/2019 1:58 1 pm

1 pm

227

11/10/2019 1:58 1 pm

1 pm

228

11/10/2019 1:58October 11, 2019 at 1:32 pmJakeC

Ansys EmployeeFor this log it appears as though running CMD from a UNC path is blocked.

To unblock it please do the following on each machine:

Start a registry editor (e.g., regedit.exe).

Navigate to theHKEY_LOCAL_MACHINESOFTWAREMicrosoftCommand Processor registry subkey.

From the Edit menu, select New, DWORD Value; enter the name DisableUNCCheck; then press Enter.

Double-click the new value, set it to 1, then click OK.

Close the registry editor.

Reboot the machine

Thank you,

Jake

October 12, 2019 at 11:56 amkolyan007

SubscriberThank you Jake. The UNC has been disabled on all machines using steps above.

See below my RSM configuration settings for localhost on Submit Host and most recent error log. Please advise what else is wrong in my configuration.

1 13/10/2019 12:01:15 am RSM Version: 19.5.420.0, Build Date: 30/07/2019 5

7:06 am

7:06 am

2 13/10/2019 12:01:15 am RSM File Version: 19.5.0-beta.3+Branch.release-19.5.Sha.9c15e0a3e0a608e8efe8c568e494d2160788d025

3 13/10/2019 12:01:15 am RSM Library Version: 19.5.0.0

4 13/10/2019 12:01:15 am Job Name: Annulus_Thread_MAX_Sludge-SidWalls=No Separation,rhoSludge=2,3,t=0-DP0-Model (B4)-Static Structural (B5)-Solution (B6)

5 13/10/2019 12:01:15 am Type: Mechanical_ANSYS

6 13/10/2019 12:01:15 am Client Directory: C:TestProject - Copy - Copy_ProjectScratchScr1E08

7 13/10/2019 12:01:15 am Client Machine: Maincad

8 13/10/2019 12:01:15 am Queue: Default [localhost, default]

9 13/10/2019 12:01:15 am Template: Mechanical_ANSYSJob.xml

10 13/10/2019 12:01:15 am Cluster Configuration: localhost [localhost]

11 13/10/2019 12:01:15 am Cluster Type: ARC

12 13/10/2019 12:01:15 am Custom Keyword: blank

13 13/10/2019 12:01:15 am Transfer Option: NativeOS

14 13/10/2019 12:01:15 am Staging Directory: \MainCADRSMStaging

15 13/10/2019 12:01:15 am Delete Staging Directory: True

16 13/10/2019 12:01:15 am Local Scratch Directory: blank

17 13/10/2019 12:01:15 am Platform: Windows

18 13/10/2019 12:01:15 am Cluster Submit Options: blank

19 13/10/2019 12:01:15 am Normal Inputs: [*.dat,file*.*,*.mac,thermal.build,commands.xml,SecInput.txt]

20 13/10/2019 12:01:15 am Cancel Inputs: [file.abt,sec.interrupt]

21 13/10/2019 12:01:15 am Excluded Inputs: [-]

22 13/10/2019 12:01:15 am Normal Outputs: [*.xml,*.NR*,CAERepOutput.xml,cyclic_map.json,exit.topo,file*.dsub,file*.ldhi,file*.png,file*.r0*,file*.r1*,file*.r2*,file*.r3*,file*.r4*,file*.r5*,file*.r6*,file*.r7*,file*.r8*,file*.r9*,file*.rd*,file*.rst,file.BCS,file.ce,file.cm,file.cnd,file.cnm,file.DSP,file.err,file.gst,file.json,file.nd*,file.nlh,file.nr*,file.PCS,file.rdb,file.rfl,file.spm,file0.BCS,file0.ce,file0.cnd,file0.err,file0.gst,file0.nd*,file0.nlh,file0.nr*,file0.PCS,frequencies_*.out,input.x17,intermediate*.topo,Mode_mapping_*.txt,NotSupportedElems.dat,ObjectiveHistory.out,post.out,PostImage*.png,record.txt,solve*.out,topo.err,top ut,vars.topo,SecDebugLog.txt,secStart.log,sec.validation.executed,sec.envvarvalidation.executed,sec.failure]

ut,vars.topo,SecDebugLog.txt,secStart.log,sec.validation.executed,sec.envvarvalidation.executed,sec.failure]

23 13/10/2019 12:01:15 am Failure Outputs: [*.xml,*.NR*,CAERepOutput.xml,cyclic_map.json,exit.topo,file*.dsub,file*.ldhi,file*.png,file*.r0*,file*.r1*,file*.r2*,file*.r3*,file*.r4*,file*.r5*,file*.r6*,file*.r7*,file*.r8*,file*.r9*,file*.rd*,file*.rst,file.BCS,file.ce,file.cm,file.cnd,file.cnm,file.DSP,file.err,file.gst,file.json,file.nd*,file.nlh,file.nr*,file.PCS,file.rdb,file.rfl,file.spm,file0.BCS,file0.ce,file0.cnd,file0.err,file0.gst,file0.nd*,file0.nlh,file0.nr*,file0.PCS,frequencies_*.out,input.x17,intermediate*.topo,Mode_mapping_*.txt,NotSupportedElems.dat,ObjectiveHistory.out,post.out,PostImage*.png,record.txt,solve*.out,topo.err,top ut,vars.topo,SecDebugLog.txt,sec.solverexitcode,secStart.log,sec.failure,sec.envvarvalidation.executed]

ut,vars.topo,SecDebugLog.txt,sec.solverexitcode,secStart.log,sec.failure,sec.envvarvalidation.executed]

24 13/10/2019 12:01:15 am Cancel Outputs: [file*.err,solve.out,secStart.log,SecDebugLog.txt]

25 13/10/2019 12:01:15 am Excluded Outputs: [-]

26 13/10/2019 12:01:15 am Inquire Files:

27 13/10/2019 12:01:15 am normal: [*.xml,*.NR*,CAERepOutput.xml,cyclic_map.json,exit.topo,file*.dsub,file*.ldhi,file*.png,file*.r0*,file*.r1*,file*.r2*,file*.r3*,file*.r4*,file*.r5*,file*.r6*,file*.r7*,file*.r8*,file*.r9*,file*.rd*,file*.rst,file.BCS,file.ce,file.cm,file.cnd,file.cnm,file.DSP,file.err,file.gst,file.json,file.nd*,file.nlh,file.nr*,file.PCS,file.rdb,file.rfl,file.spm,file0.BCS,file0.ce,file0.cnd,file0.err,file0.gst,file0.nd*,file0.nlh,file0.nr*,file0.PCS,frequencies_*.out,input.x17,intermediate*.topo,Mode_mapping_*.txt,NotSupportedElems.dat,ObjectiveHistory.out,post.out,PostImage*.png,record.txt,solve*.out,topo.err,top ut,vars.topo,SecDebugLog.txt,secStart.log,sec.validation.executed,sec.envvarvalidation.executed,sec.failure]

ut,vars.topo,SecDebugLog.txt,secStart.log,sec.validation.executed,sec.envvarvalidation.executed,sec.failure]

28 13/10/2019 12:01:15 am cancel: [file*.err,solve.out,secStart.log,SecDebugLog.txt]

29 13/10/2019 12:01:15 am failure: [*.xml,*.NR*,CAERepOutput.xml,cyclic_map.json,exit.topo,file*.dsub,file*.ldhi,file*.png,file*.r0*,file*.r1*,file*.r2*,file*.r3*,file*.r4*,file*.r5*,file*.r6*,file*.r7*,file*.r8*,file*.r9*,file*.rd*,file*.rst,file.BCS,file.ce,file.cm,file.cnd,file.cnm,file.DSP,file.err,file.gst,file.json,file.nd*,file.nlh,file.nr*,file.PCS,file.rdb,file.rfl,file.spm,file0.BCS,file0.ce,file0.cnd,file0.err,file0.gst,file0.nd*,file0.nlh,file0.nr*,file0.PCS,frequencies_*.out,input.x17,intermediate*.topo,Mode_mapping_*.txt,NotSupportedElems.dat,ObjectiveHistory.out,post.out,PostImage*.png,record.txt,solve*.out,topo.err,top ut,vars.topo,SecDebugLog.txt,sec.solverexitcode,secStart.log,sec.failure,sec.envvarvalidation.executed]

ut,vars.topo,SecDebugLog.txt,sec.solverexitcode,secStart.log,sec.failure,sec.envvarvalidation.executed]

30 13/10/2019 12:01:15 am RemotePostInformation: [RemotePostInformation.txt]

31 13/10/2019 12:01:15 am SolutionInformation: [solve.out,file.gst,file.nlh,file0.gst,file0.nlh,file.cnd]

32 13/10/2019 12:01:15 am PostDuringSolve: [file.rcn,file.redm,file.rfl,file.rfrq,file.rmg,file.rdsp,file.rsx,file.rst,file.rth,solve.out,file.gst,file.nlh,file0.gst,file0.nlh,file.cnd]

33 13/10/2019 12:01:15 am transcript: [solve.out,monitor.json]

34 13/10/2019 12:01:15 am Submission in progress...

35 13/10/2019 12:01:15 am Created storage directory \MainCADRSMStagingeubgp21n.ezb

36 13/10/2019 12:01:15 am Runtime Settings:

37 13/10/2019 12:01:15 am Job Owner: MAINCADUser

38 13/10/2019 12:01:15 am Submit Time: Sunday, 13 October 2019 12:01 am

39 13/10/2019 12:01:15 am Directory: \MainCADRSMStagingeubgp21n.ezb

40 13/10/2019 12:01:15 am File Transfer Package: Upload to localhost:0

41 13/10/2019 12:01:15 am Name: RSM_Upload_During_Submit

42 13/10/2019 12:01:15 am Local Directory: C:TestProject - Copy - Copy_ProjectScratchScr1E08

43 13/10/2019 12:01:15 am Storage Path: \MainCADRSMStagingeubgp21n.ezb

44 13/10/2019 12:01:15 am Storage Location:

45 13/10/2019 12:01:15 am Files: [*.dat,file*.*,*.mac,thermal.build,commands.xml,SecInput.txt]

46 13/10/2019 12:01:15 am Exclude: [-]

47 13/10/2019 12:01:15 am Storage contents:

48 13/10/2019 12:01:15 am Uploading file: C:TestProject - Copy - Copy_ProjectScratchScr1E08ds.dat

49 13/10/2019 12:01:15 am Copying C:TestProject - Copy - Copy_ProjectScratchScr1E08ds.dat to //MainCAD/RSMStaging/eubgp21n.ezb/ds.dat

50 13/10/2019 12:01:15 am File size: 39897206 bytes, transferred: 39897206 bytes.

51 13/10/2019 12:01:15 am Uploading file: C:TestProject - Copy - Copy_ProjectScratchScr1E08remote.dat

52 13/10/2019 12:01:15 am Copying C:TestProject - Copy - Copy_ProjectScratchScr1E08remote.dat to //MainCAD/RSMStaging/eubgp21n.ezb/remote.dat

53 13/10/2019 12:01:15 am File size: 179 bytes, transferred: 179 bytes.

54 13/10/2019 12:01:15 am Uploading file: C:TestProject - Copy - Copy_ProjectScratchScr1E08commands.xml

55 13/10/2019 12:01:15 am Copying C:TestProject - Copy - Copy_ProjectScratchScr1E08commands.xml to //MainCAD/RSMStaging/eubgp21n.ezb/commands.xml

56 13/10/2019 12:01:15 am File size: 477 bytes, transferred: 477 bytes.

57 13/10/2019 12:01:15 am Uploading file: C:TestProject - Copy - Copy_ProjectScratchScr1E08SecInput.txt

58 13/10/2019 12:01:15 am Copying C:TestProject - Copy - Copy_ProjectScratchScr1E08SecInput.txt to //MainCAD/RSMStaging/eubgp21n.ezb/SecInput.txt

59 13/10/2019 12:01:15 am File size: 899 bytes, transferred: 899 bytes.

60 13/10/2019 12:01:15 am Storage contents:

61 13/10/2019 12:01:15 am ds.dat

62 13/10/2019 12:01:15 am remote.dat

63 13/10/2019 12:01:15 am commands.xml

64 13/10/2019 12:01:15 am SecInput.txt

65 13/10/2019 12:01:15 am 39898.76 KB, .11 sec (364870.74 KB/sec)

66 13/10/2019 12:01:15 am Submission in progress...

67 13/10/2019 12:01:15 am Commands from commands.xml file

68 13/10/2019 12:01:15 am Behavior code: Ansys.Rsm.ClusterOperations.Behaviors.MechanicalAnsysBehaviors

69 13/10/2019 12:01:15 am Loading Cluster Customization file C:Program FilesANSYS Incv195RSMConfigxmlClusterJobCustomization.xml.

70 13/10/2019 12:01:15 am No customization found for cluster machineName Maincad, type ARC

71 13/10/2019 12:01:15 am HPC Commands Files Used:

72 13/10/2019 12:01:15 am hpc_commands_ARC.xml

73 13/10/2019 12:01:15 am Environment variables:

74 13/10/2019 12:01:15 am RSM_PYTHON_HOME=C:Program FilesANSYS Incv195commonfilesCPython2_7_15winx64Releasepython

75 13/10/2019 12:01:15 am RSM_IRON_PYTHON_HOME=C:Program FilesANSYS Incv195commonfilesIronPython

76 13/10/2019 12:01:15 am RSM_PYTHON_EXE=C:Program FilesANSYS Incv195commonfilesCPython2_7_15winx64Releasepythonpython.exe

77 13/10/2019 12:01:15 am RSM_TASK_WORKING_DIRECTORY=\MainCADRSMStagingeubgp21n.ezb

78 13/10/2019 12:01:15 am RSM_USE_SSH_LINUX=False

79 13/10/2019 12:01:15 am RSM_QUEUE_NAME=default

80 13/10/2019 12:01:15 am RSM_CONFIGUREDQUEUE_NAME=Default

81 13/10/2019 12:01:15 am RSM_COMPUTE_SERVER_MACHINE_NAME=Maincad

82 13/10/2019 12:01:15 am RSM_HPC_JOBNAME=Mechanical

83 13/10/2019 12:01:15 am RSM_HPC_DISPLAYNAME=Annulus_Thread_MAX_Sludge-SidWalls=No Separation,rhoSludge=2,3,t=0-DP0-Model (B4)-Static Structural (B5)-Solution (B6)

84 13/10/2019 12:01:15 am RSM_HPC_CORES=12

85 13/10/2019 12:01:15 am RSM_HPC_DISTRIBUTED=TRUE

86 13/10/2019 12:01:15 am RSM_HPC_NODE_EXCLUSIVE=FALSE

87 13/10/2019 12:01:15 am RSM_HPC_QUEUE=default

88 13/10/2019 12:01:15 am RSM_HPC_USER=MAINCADUser

89 13/10/2019 12:01:15 am RSM_HPC_WORKDIR=\MainCADRSMStagingeubgp21n.ezb

90 13/10/2019 12:01:15 am RSM_HPC_JOBTYPE=Mechanical_ANSYS

91 13/10/2019 12:01:15 am RSM_HPC_ANSYS_LOCAL_INSTALL_DIRECTORY=C:Program FilesANSYS Incv195

92 13/10/2019 12:01:15 am RSM_HPC_VERSION=195

93 13/10/2019 12:01:15 am RSM_HPC_STAGING=\MainCADRSMStagingeubgp21n.ezb

94 13/10/2019 12:01:15 am RSM_HPC_LOCAL_PLATFORM=Windows

95 13/10/2019 12:01:15 am RSM_HPC_CLUSTER_TARGET_PLATFORM=Windows

96 13/10/2019 12:01:15 am RSM_HPC_STDOUTFILE=stdout_9f9da426-822a-4234-a55a-2a8fc2bef7c8.rsmout

97 13/10/2019 12:01:15 am RSM_HPC_STDERRFILE=stderr_9f9da426-822a-4234-a55a-2a8fc2bef7c8.rsmout

98 13/10/2019 12:01:15 am RSM_HPC_STDOUTLIVE=stdout_9f9da426-822a-4234-a55a-2a8fc2bef7c8.live

99 13/10/2019 12:01:15 am RSM_HPC_STDERRLIVE=stderr_9f9da426-822a-4234-a55a-2a8fc2bef7c8.live

100 13/10/2019 12:01:15 am RSM_HPC_SCRIPTS_DIRECTORY_LOCAL=C:Program FilesANSYS Incv195RSMConfigscripts

101 13/10/2019 12:01:15 am RSM_HPC_SCRIPTS_DIRECTORY=%AWP_ROOT195%RSMConfigscripts

102 13/10/2019 12:01:15 am RSM_HPC_SUBMITHOST=localhost

103 13/10/2019 12:01:15 am RSM_HPC_STORAGEID=2e240152-9c98-4c8b-b806-5ccbbda6db14 Mechanical=LocalOS#localhost$\MainCADRSMStagingeubgp21n.ezb Saturday, October 12, 2019 11:01:15.288 AM True

104 13/10/2019 12:01:15 am RSM_HPC_PLATFORMSTORAGEID=\MainCADRSMStagingeubgp21n.ezb

105 13/10/2019 12:01:15 am RSM_HPC_NATIVEOPTIONS=

106 13/10/2019 12:01:15 am ARC_ROOT=C:Program FilesANSYS Incv195RSMConfigscripts....ARC

107 13/10/2019 12:01:15 am RSM_HPC_KEYWORD=ARC

108 13/10/2019 12:01:15 am JobType is: Mechanical_ANSYS

109 13/10/2019 12:01:15 am Final command platform: Windows

110 13/10/2019 12:01:15 am RSM_PYTHON_HOME=C:Program FilesANSYS Incv195commonfilesCPython2_7_15winx64Releasepython

111 13/10/2019 12:01:15 am RSM_HPC_JOBNAME=Mechanical

112 13/10/2019 12:01:15 am Distributed mode requested: True

113 13/10/2019 12:01:15 am RSM_HPC_DISTRIBUTED=TRUE

114 13/10/2019 12:01:15 am Running 1 commands

115 13/10/2019 12:01:15 am Job working directory: \MainCADRSMStagingeubgp21n.ezb

116 13/10/2019 12:01:15 am Number of CPU requested: 12

117 13/10/2019 12:01:15 am AWP_ROOT195=C:Program FilesANSYS Incv195

118 13/10/2019 12:01:15 am Checking queue default exists ...

119 13/10/2019 12:01:15 am External cluster command: queryQueues

120 13/10/2019 12:01:15 am Cluster command before expansion: %ARC_ROOT%binArcQueues.exe

121 13/10/2019 12:01:15 am Final cluster command: C:Program FilesANSYS Incv195RSMConfigscripts....ARCbinArcQueues.exe

122 13/10/2019 12:01:15 am Working directory : \MainCADRSMStagingeubgp21n.ezb

123 13/10/2019 12:01:15 am Variables:

124 13/10/2019 12:01:15 am RSM_PYTHON_HOME=C:Program FilesANSYS Incv195commonfilesCPython2_7_15winx64Releasepython

125 13/10/2019 12:01:15 am RSM_IRON_PYTHON_HOME=C:Program FilesANSYS Incv195commonfilesIronPython

126 13/10/2019 12:01:15 am RSM_PYTHON_EXE=C:Program FilesANSYS Incv195commonfilesCPython2_7_15winx64Releasepythonpython.exe

127 13/10/2019 12:01:15 am RSM_TASK_WORKING_DIRECTORY=\MainCADRSMStagingeubgp21n.ezb

128 13/10/2019 12:01:15 am RSM_USE_SSH_LINUX=False

129 13/10/2019 12:01:15 am RSM_QUEUE_NAME=default

130 13/10/2019 12:01:15 am RSM_CONFIGUREDQUEUE_NAME=Default

131 13/10/2019 12:01:15 am RSM_COMPUTE_SERVER_MACHINE_NAME=Maincad

132 13/10/2019 12:01:15 am RSM_HPC_JOBNAME=Mechanical

133 13/10/2019 12:01:15 am RSM_HPC_DISPLAYNAME=Annulus_Thread_MAX_Sludge-SidWalls=No Separation,rhoSludge=2,3,t=0-DP0-Model (B4)-Static Structural (B5)-Solution (B6)

134 13/10/2019 12:01:15 am RSM_HPC_CORES=12

135 13/10/2019 12:01:15 am RSM_HPC_DISTRIBUTED=TRUE

136 13/10/2019 12:01:15 am RSM_HPC_NODE_EXCLUSIVE=FALSE

137 13/10/2019 12:01:15 am RSM_HPC_QUEUE=default

138 13/10/2019 12:01:15 am RSM_HPC_USER=MAINCADUser

139 13/10/2019 12:01:15 am RSM_HPC_WORKDIR=\MainCADRSMStagingeubgp21n.ezb

140 13/10/2019 12:01:15 am RSM_HPC_JOBTYPE=Mechanical_ANSYS

141 13/10/2019 12:01:15 am RSM_HPC_ANSYS_LOCAL_INSTALL_DIRECTORY=C:Program FilesANSYS Incv195

142 13/10/2019 12:01:15 am RSM_HPC_VERSION=195

143 13/10/2019 12:01:15 am RSM_HPC_STAGING=\MainCADRSMStagingeubgp21n.ezb

144 13/10/2019 12:01:15 am RSM_HPC_LOCAL_PLATFORM=Windows

145 13/10/2019 12:01:15 am RSM_HPC_CLUSTER_TARGET_PLATFORM=Windows

146 13/10/2019 12:01:15 am RSM_HPC_STDOUTFILE=stdout_9f9da426-822a-4234-a55a-2a8fc2bef7c8.rsmout

147 13/10/2019 12:01:15 am RSM_HPC_STDERRFILE=stderr_9f9da426-822a-4234-a55a-2a8fc2bef7c8.rsmout

148 13/10/2019 12:01:15 am RSM_HPC_STDOUTLIVE=stdout_9f9da426-822a-4234-a55a-2a8fc2bef7c8.live

149 13/10/2019 12:01:15 am RSM_HPC_STDERRLIVE=stderr_9f9da426-822a-4234-a55a-2a8fc2bef7c8.live

150 13/10/2019 12:01:15 am RSM_HPC_SCRIPTS_DIRECTORY_LOCAL=C:Program FilesANSYS Incv195RSMConfigscripts

151 13/10/2019 12:01:15 am RSM_HPC_SCRIPTS_DIRECTORY=%AWP_ROOT195%RSMConfigscripts

152 13/10/2019 12:01:15 am RSM_HPC_SUBMITHOST=localhost