TAGGED: mpp

-

-

November 23, 2022 at 9:42 pm

Santiago Ruiz

SubscriberHi,

I am trying to run LS-DYNA in an HPC system. The computer center provided the following symbolic links to run the software for double precision and single precision running:

- ls-dyna_mpp_d_R13_1_0_x64_centos78_ifort190_avx2_openmpi2.1.3.l2a

- ls-dyna_mpp_s_R13_1_0_x64_centos78_ifort190_avx2_openmpi2.1.3.l2a

I haven't been able to run LS-DYNA using these commands. By looking at online documentation https://ftp.lstc.com/anonymous/outgoing/support/FAQ/ASCII_output_for_MPP_via_binout & https://lstc.com/download/ls-dyna) I can see that the l2a executable that appears at the end of the commands is used to extract ASCII files from a binout file. Therefore, I am not sure if the commands are the ones that should be used to run an analysis.

Could you please help me confirm if the provided commands are the correct ones to run LS-DYNA or if I might be missing something in the execution? -

November 28, 2022 at 3:55 pm

Ben_Ben

SubscriberI think you need to define the input files after your command. I use i=input_file to define the input file.

Here is the command I use to run it on my HPC:

ls-dyna_smp_d_R13_1_0_x64_centos79_ifort190 i=input_file.k NCPU=12

-

November 28, 2022 at 6:40 pm

Reno Genest

Ansys EmployeeHello Santiago,

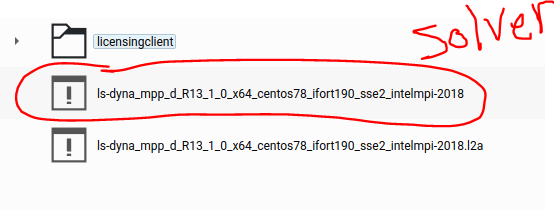

The file ending with .I2a is not the LS-DYNA solver. Please take the other file in the folder:

Here are the commands I use to run LS-DYNA on LInux:

SMP:

/data2/rgenest/lsdyna/ls-dyna_smp_d_R13_0_0_x64_centos610_ifort190/ls-dyna_smp_d_R13_0_0_x64_centos610_ifort190 i=/data2/rgenest/runs/Test/input.k ncpu=-4 memory=20m

Note that for SMP, we recommend setting ncpu=-4 (that is a negative number of cpus). The negative means that the solver will use consistency checking to get consistent results with different number of cores.

MPP:

To run MPP, we specify the location of the mpiexec or mpirun file first.

Intel MPI:

/data2/rgenest/intel/oneapi/mpi/2021.2.0/bin/mpiexec -np 4 /data2/rgenest/lsdyna/ls-dyna_mpp_d_R13_0_0_x64_centos610_ifort190_sse2_intelmpi-2018/ls-dyna_mpp_d_R13_0_0_x64_centos610_ifort190_sse2_intelmpi-2018 i=/data2/rgenest/runs/Test/input.k memory=20m

Platform MPI:

/data2/rgenest/bin/ibm/platform_mpi/bin/mpirun -np 4 /data2/rgenest/lsdyna/ls-dyna_mpp_d_R13_0_0_x64_centos610_ifort190_sse2_platformmpi/ls-dyna_mpp_d_R13_0_0_x64_centos610_ifort190_sse2_platformmpi i=/data2/rgenest/runs/Test/input.k memory=20m

Open MPI:

/opt/openmpi-4.0.0/bin/mpirun -np 4 /data2/rgenest/lsdyna/ls-dyna_mpp_d_R13_0_0_x64_centos610_ifort190_sse2_openmpi4/ls-dyna_mpp_d_R13_0_0_x64_centos610_ifort190_sse2_openmpi4.0.0 i=/data2/rgenest/runs/Test/input.k memory=20m

You will have to modify the above commands for your own path for the LS-DYNA solver, the MPI mpiexec file, and the input file.

Let me know how it goes.

Reno.

-

November 30, 2022 at 10:35 am

Ben_Ben

SubscriberHi Reno,

For an SMP run on 32 cores should I still set NCPU=-4 ?

Ben

-

November 30, 2022 at 6:21 pm

Reno Genest

Ansys EmployeeHello Ben,

To run SMP on 32 cores, you need to set NCPU=-32. But, note that SMP does not scale well beyond 8 cores; this means you won't see much speedup running on 32 vs 8 cores. To fully use all 32 cores, you should run MPP. Also, note that you need to have a model that is large enough to run on 32 cores. We recommend having at least 10 000 or more elements per core. If you have fewer elements per core, than the communication between the cores becomes the bottleneck. So, to run efficiently on 32 cores, I would like to have a model with 320 000 or more elements. This is a rule of thumb, but will be model specific. You can do benchmarks with your model and compare calculation time with different number of cores (8, 16, 24, 32 for example) and see what is fastest for you.

You will find more information on MPP here:

https://ftp.lstc.com/anonymous/outgoing/support/PRESENTATIONS/mpp_201305.pdf

https://ftp.lstc.com/anonymous/outgoing/support/FAQ/mpp.getting_started

Note that you should expect slightly different results with different cores with MPP. This is because the FEM domain is divided into the number of cores requested. So, once you find the number of cores that gives you the best performance, please use the same number of MPP cores to compare results between different runs.

Let me know how it goes.

Reno.

-

December 1, 2022 at 5:06 pm

Ben_Ben

SubscriberI'm going to give it a go. Just need to set up MPP on my HPC.

Will let you know how it goes,Ben

-

- The topic ‘Run LS-DYNA in HPC’ is closed to new replies.

-

5049

-

1764

-

1387

-

1248

-

1021

© 2026 Copyright ANSYS, Inc. All rights reserved.